mirror of

https://github.com/ClickHouse/ClickHouse.git

synced 2024-11-22 23:52:03 +00:00

Merge branch 'master' into async-loader-integration

This commit is contained in:

commit

432d359a2b

@ -8,6 +8,7 @@

|

||||

#include <functional>

|

||||

#include <iosfwd>

|

||||

|

||||

#include <base/defines.h>

|

||||

#include <base/types.h>

|

||||

#include <base/unaligned.h>

|

||||

|

||||

@ -274,6 +275,8 @@ struct CRC32Hash

|

||||

if (size == 0)

|

||||

return 0;

|

||||

|

||||

chassert(pos);

|

||||

|

||||

if (size < 8)

|

||||

{

|

||||

return static_cast<unsigned>(hashLessThan8(x.data, x.size));

|

||||

|

||||

@ -115,8 +115,15 @@

|

||||

/// because SIGABRT is easier to debug than SIGTRAP (the second one makes gdb crazy)

|

||||

#if !defined(chassert)

|

||||

#if defined(ABORT_ON_LOGICAL_ERROR)

|

||||

// clang-format off

|

||||

#include <base/types.h>

|

||||

namespace DB

|

||||

{

|

||||

void abortOnFailedAssertion(const String & description);

|

||||

}

|

||||

#define chassert(x) static_cast<bool>(x) ? void(0) : ::DB::abortOnFailedAssertion(#x)

|

||||

#define UNREACHABLE() abort()

|

||||

// clang-format off

|

||||

#else

|

||||

/// Here sizeof() trick is used to suppress unused warning for result,

|

||||

/// since simple "(void)x" will evaluate the expression, while

|

||||

|

||||

@ -57,7 +57,7 @@ public:

|

||||

URI();

|

||||

/// Creates an empty URI.

|

||||

|

||||

explicit URI(const std::string & uri, bool disable_url_encoding = false);

|

||||

explicit URI(const std::string & uri, bool enable_url_encoding = true);

|

||||

/// Parses an URI from the given string. Throws a

|

||||

/// SyntaxException if the uri is not valid.

|

||||

|

||||

@ -362,7 +362,7 @@ private:

|

||||

std::string _query;

|

||||

std::string _fragment;

|

||||

|

||||

bool _disable_url_encoding = false;

|

||||

bool _enable_url_encoding = true;

|

||||

};

|

||||

|

||||

|

||||

|

||||

@ -36,8 +36,8 @@ URI::URI():

|

||||

}

|

||||

|

||||

|

||||

URI::URI(const std::string& uri, bool decode_and_encode_path):

|

||||

_port(0), _disable_url_encoding(decode_and_encode_path)

|

||||

URI::URI(const std::string& uri, bool enable_url_encoding):

|

||||

_port(0), _enable_url_encoding(enable_url_encoding)

|

||||

{

|

||||

parse(uri);

|

||||

}

|

||||

@ -108,7 +108,7 @@ URI::URI(const URI& uri):

|

||||

_path(uri._path),

|

||||

_query(uri._query),

|

||||

_fragment(uri._fragment),

|

||||

_disable_url_encoding(uri._disable_url_encoding)

|

||||

_enable_url_encoding(uri._enable_url_encoding)

|

||||

{

|

||||

}

|

||||

|

||||

@ -121,7 +121,7 @@ URI::URI(const URI& baseURI, const std::string& relativeURI):

|

||||

_path(baseURI._path),

|

||||

_query(baseURI._query),

|

||||

_fragment(baseURI._fragment),

|

||||

_disable_url_encoding(baseURI._disable_url_encoding)

|

||||

_enable_url_encoding(baseURI._enable_url_encoding)

|

||||

{

|

||||

resolve(relativeURI);

|

||||

}

|

||||

@ -153,7 +153,7 @@ URI& URI::operator = (const URI& uri)

|

||||

_path = uri._path;

|

||||

_query = uri._query;

|

||||

_fragment = uri._fragment;

|

||||

_disable_url_encoding = uri._disable_url_encoding;

|

||||

_enable_url_encoding = uri._enable_url_encoding;

|

||||

}

|

||||

return *this;

|

||||

}

|

||||

@ -184,7 +184,7 @@ void URI::swap(URI& uri)

|

||||

std::swap(_path, uri._path);

|

||||

std::swap(_query, uri._query);

|

||||

std::swap(_fragment, uri._fragment);

|

||||

std::swap(_disable_url_encoding, uri._disable_url_encoding);

|

||||

std::swap(_enable_url_encoding, uri._enable_url_encoding);

|

||||

}

|

||||

|

||||

|

||||

@ -687,18 +687,18 @@ void URI::decode(const std::string& str, std::string& decodedStr, bool plusAsSpa

|

||||

|

||||

void URI::encodePath(std::string & encodedStr) const

|

||||

{

|

||||

if (_disable_url_encoding)

|

||||

encodedStr = _path;

|

||||

else

|

||||

if (_enable_url_encoding)

|

||||

encode(_path, RESERVED_PATH, encodedStr);

|

||||

else

|

||||

encodedStr = _path;

|

||||

}

|

||||

|

||||

void URI::decodePath(const std::string & encodedStr)

|

||||

{

|

||||

if (_disable_url_encoding)

|

||||

_path = encodedStr;

|

||||

else

|

||||

if (_enable_url_encoding)

|

||||

decode(encodedStr, _path);

|

||||

else

|

||||

_path = encodedStr;

|

||||

}

|

||||

|

||||

bool URI::isWellKnownPort() const

|

||||

|

||||

@ -17,7 +17,8 @@

|

||||

#ifndef METROHASH_PLATFORM_H

|

||||

#define METROHASH_PLATFORM_H

|

||||

|

||||

#include <stdint.h>

|

||||

#include <bit>

|

||||

#include <cstdint>

|

||||

#include <cstring>

|

||||

|

||||

// rotate right idiom recognized by most compilers

|

||||

@ -33,6 +34,11 @@ inline static uint64_t read_u64(const void * const ptr)

|

||||

// so we use memcpy() which is the most portable. clang & gcc usually translates `memcpy()` into a single `load` instruction

|

||||

// when hardware supports it, so using memcpy() is efficient too.

|

||||

memcpy(&result, ptr, sizeof(result));

|

||||

|

||||

#if __BYTE_ORDER__ == __ORDER_BIG_ENDIAN__

|

||||

result = std::byteswap(result);

|

||||

#endif

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

@ -40,6 +46,11 @@ inline static uint64_t read_u32(const void * const ptr)

|

||||

{

|

||||

uint32_t result;

|

||||

memcpy(&result, ptr, sizeof(result));

|

||||

|

||||

#if __BYTE_ORDER__ == __ORDER_BIG_ENDIAN__

|

||||

result = std::byteswap(result);

|

||||

#endif

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

@ -47,6 +58,11 @@ inline static uint64_t read_u16(const void * const ptr)

|

||||

{

|

||||

uint16_t result;

|

||||

memcpy(&result, ptr, sizeof(result));

|

||||

|

||||

#if __BYTE_ORDER__ == __ORDER_BIG_ENDIAN__

|

||||

result = std::byteswap(result);

|

||||

#endif

|

||||

|

||||

return result;

|

||||

}

|

||||

|

||||

|

||||

@ -32,7 +32,7 @@ RUN arch=${TARGETARCH:-amd64} \

|

||||

esac

|

||||

|

||||

ARG REPOSITORY="https://s3.amazonaws.com/clickhouse-builds/22.4/31c367d3cd3aefd316778601ff6565119fe36682/package_release"

|

||||

ARG VERSION="23.7.1.2470"

|

||||

ARG VERSION="23.7.2.25"

|

||||

ARG PACKAGES="clickhouse-keeper"

|

||||

|

||||

# user/group precreated explicitly with fixed uid/gid on purpose.

|

||||

|

||||

@ -6,7 +6,7 @@ Usage:

|

||||

Build deb package with `clang-14` in `debug` mode:

|

||||

```

|

||||

$ mkdir deb/test_output

|

||||

$ ./packager --output-dir deb/test_output/ --package-type deb --compiler=clang-14 --build-type=debug

|

||||

$ ./packager --output-dir deb/test_output/ --package-type deb --compiler=clang-14 --debug-build

|

||||

$ ls -l deb/test_output

|

||||

-rw-r--r-- 1 root root 3730 clickhouse-client_22.2.2+debug_all.deb

|

||||

-rw-r--r-- 1 root root 84221888 clickhouse-common-static_22.2.2+debug_amd64.deb

|

||||

|

||||

@ -112,12 +112,12 @@ def run_docker_image_with_env(

|

||||

subprocess.check_call(cmd, shell=True)

|

||||

|

||||

|

||||

def is_release_build(build_type: str, package_type: str, sanitizer: str) -> bool:

|

||||

return build_type == "" and package_type == "deb" and sanitizer == ""

|

||||

def is_release_build(debug_build: bool, package_type: str, sanitizer: str) -> bool:

|

||||

return not debug_build and package_type == "deb" and sanitizer == ""

|

||||

|

||||

|

||||

def parse_env_variables(

|

||||

build_type: str,

|

||||

debug_build: bool,

|

||||

compiler: str,

|

||||

sanitizer: str,

|

||||

package_type: str,

|

||||

@ -240,7 +240,7 @@ def parse_env_variables(

|

||||

build_target = (

|

||||

f"{build_target} clickhouse-odbc-bridge clickhouse-library-bridge"

|

||||

)

|

||||

if is_release_build(build_type, package_type, sanitizer):

|

||||

if is_release_build(debug_build, package_type, sanitizer):

|

||||

cmake_flags.append("-DSPLIT_DEBUG_SYMBOLS=ON")

|

||||

result.append("WITH_PERFORMANCE=1")

|

||||

if is_cross_arm:

|

||||

@ -255,8 +255,8 @@ def parse_env_variables(

|

||||

|

||||

if sanitizer:

|

||||

result.append(f"SANITIZER={sanitizer}")

|

||||

if build_type:

|

||||

result.append(f"BUILD_TYPE={build_type.capitalize()}")

|

||||

if debug_build:

|

||||

result.append("BUILD_TYPE=Debug")

|

||||

else:

|

||||

result.append("BUILD_TYPE=None")

|

||||

|

||||

@ -361,7 +361,7 @@ def parse_args() -> argparse.Namespace:

|

||||

help="ClickHouse git repository",

|

||||

)

|

||||

parser.add_argument("--output-dir", type=dir_name, required=True)

|

||||

parser.add_argument("--build-type", choices=("debug", ""), default="")

|

||||

parser.add_argument("--debug-build", action="store_true")

|

||||

|

||||

parser.add_argument(

|

||||

"--compiler",

|

||||

@ -467,7 +467,7 @@ def main():

|

||||

build_image(image_with_version, dockerfile)

|

||||

|

||||

env_prepared = parse_env_variables(

|

||||

args.build_type,

|

||||

args.debug_build,

|

||||

args.compiler,

|

||||

args.sanitizer,

|

||||

args.package_type,

|

||||

|

||||

@ -33,7 +33,7 @@ RUN arch=${TARGETARCH:-amd64} \

|

||||

# lts / testing / prestable / etc

|

||||

ARG REPO_CHANNEL="stable"

|

||||

ARG REPOSITORY="https://packages.clickhouse.com/tgz/${REPO_CHANNEL}"

|

||||

ARG VERSION="23.7.1.2470"

|

||||

ARG VERSION="23.7.2.25"

|

||||

ARG PACKAGES="clickhouse-client clickhouse-server clickhouse-common-static"

|

||||

|

||||

# user/group precreated explicitly with fixed uid/gid on purpose.

|

||||

|

||||

@ -23,7 +23,7 @@ RUN sed -i "s|http://archive.ubuntu.com|${apt_archive}|g" /etc/apt/sources.list

|

||||

|

||||

ARG REPO_CHANNEL="stable"

|

||||

ARG REPOSITORY="deb [signed-by=/usr/share/keyrings/clickhouse-keyring.gpg] https://packages.clickhouse.com/deb ${REPO_CHANNEL} main"

|

||||

ARG VERSION="23.7.1.2470"

|

||||

ARG VERSION="23.7.2.25"

|

||||

ARG PACKAGES="clickhouse-client clickhouse-server clickhouse-common-static"

|

||||

|

||||

# set non-empty deb_location_url url to create a docker image

|

||||

|

||||

@ -95,6 +95,7 @@ RUN python3 -m pip install --no-cache-dir \

|

||||

pytest-timeout \

|

||||

pytest-xdist \

|

||||

pytz \

|

||||

pyyaml==5.3.1 \

|

||||

redis \

|

||||

requests-kerberos \

|

||||

tzlocal==2.1 \

|

||||

|

||||

@ -200,8 +200,8 @@ Templates:

|

||||

- [Server Setting](_description_templates/template-server-setting.md)

|

||||

- [Database or Table engine](_description_templates/template-engine.md)

|

||||

- [System table](_description_templates/template-system-table.md)

|

||||

- [Data type](_description_templates/data-type.md)

|

||||

- [Statement](_description_templates/statement.md)

|

||||

- [Data type](_description_templates/template-data-type.md)

|

||||

- [Statement](_description_templates/template-statement.md)

|

||||

|

||||

|

||||

<a name="how-to-build-docs"/>

|

||||

|

||||

31

docs/changelogs/v23.7.2.25-stable.md

Normal file

31

docs/changelogs/v23.7.2.25-stable.md

Normal file

@ -0,0 +1,31 @@

|

||||

---

|

||||

sidebar_position: 1

|

||||

sidebar_label: 2023

|

||||

---

|

||||

|

||||

# 2023 Changelog

|

||||

|

||||

### ClickHouse release v23.7.2.25-stable (8dd1107b032) FIXME as compared to v23.7.1.2470-stable (a70127baecc)

|

||||

|

||||

#### Backward Incompatible Change

|

||||

* Backported in [#52850](https://github.com/ClickHouse/ClickHouse/issues/52850): If a dynamic disk contains a name, it should be specified as `disk = disk(name = 'disk_name'`, ...) in disk function arguments. In previous version it could be specified as `disk = disk_<disk_name>(...)`, which is no longer supported. [#52820](https://github.com/ClickHouse/ClickHouse/pull/52820) ([Kseniia Sumarokova](https://github.com/kssenii)).

|

||||

|

||||

#### Build/Testing/Packaging Improvement

|

||||

* Backported in [#52913](https://github.com/ClickHouse/ClickHouse/issues/52913): Add `clickhouse-keeper-client` symlink to the clickhouse-server package. [#51882](https://github.com/ClickHouse/ClickHouse/pull/51882) ([Mikhail f. Shiryaev](https://github.com/Felixoid)).

|

||||

|

||||

#### Bug Fix (user-visible misbehavior in an official stable release)

|

||||

|

||||

* Fix binary arithmetic for Nullable(IPv4) [#51642](https://github.com/ClickHouse/ClickHouse/pull/51642) ([Yakov Olkhovskiy](https://github.com/yakov-olkhovskiy)).

|

||||

* Support IPv4 and IPv6 as dictionary attributes [#51756](https://github.com/ClickHouse/ClickHouse/pull/51756) ([Yakov Olkhovskiy](https://github.com/yakov-olkhovskiy)).

|

||||

* init and destroy ares channel on demand.. [#52634](https://github.com/ClickHouse/ClickHouse/pull/52634) ([Arthur Passos](https://github.com/arthurpassos)).

|

||||

* Fix crash in function `tuple` with one sparse column argument [#52659](https://github.com/ClickHouse/ClickHouse/pull/52659) ([Anton Popov](https://github.com/CurtizJ)).

|

||||

* Fix data race in Keeper reconfiguration [#52804](https://github.com/ClickHouse/ClickHouse/pull/52804) ([Antonio Andelic](https://github.com/antonio2368)).

|

||||

* clickhouse-keeper: fix implementation of server with poll() [#52833](https://github.com/ClickHouse/ClickHouse/pull/52833) ([Andy Fiddaman](https://github.com/citrus-it)).

|

||||

|

||||

#### NOT FOR CHANGELOG / INSIGNIFICANT

|

||||

|

||||

* Rename setting disable_url_encoding to enable_url_encoding and add a test [#52656](https://github.com/ClickHouse/ClickHouse/pull/52656) ([Kruglov Pavel](https://github.com/Avogar)).

|

||||

* Fix bugs and better test for SYSTEM STOP LISTEN [#52680](https://github.com/ClickHouse/ClickHouse/pull/52680) ([Nikolay Degterinsky](https://github.com/evillique)).

|

||||

* Increase min protocol version for sparse serialization [#52835](https://github.com/ClickHouse/ClickHouse/pull/52835) ([Anton Popov](https://github.com/CurtizJ)).

|

||||

* Docker improvements [#52869](https://github.com/ClickHouse/ClickHouse/pull/52869) ([Mikhail f. Shiryaev](https://github.com/Felixoid)).

|

||||

|

||||

@ -14,6 +14,20 @@ Supported platforms:

|

||||

- PowerPC 64 LE (experimental)

|

||||

- RISC-V 64 (experimental)

|

||||

|

||||

## Building in docker

|

||||

We use the docker image `clickhouse/binary-builder` for our CI builds. It contains everything necessary to build the binary and packages. There is a script `docker/packager/packager` to ease the image usage:

|

||||

|

||||

```bash

|

||||

# define a directory for the output artifacts

|

||||

output_dir="build_results"

|

||||

# a simplest build

|

||||

./docker/packager/packager --package-type=binary --output-dir "$output_dir"

|

||||

# build debian packages

|

||||

./docker/packager/packager --package-type=deb --output-dir "$output_dir"

|

||||

# by default, debian packages use thin LTO, so we can override it to speed up the build

|

||||

CMAKE_FLAGS='-DENABLE_THINLTO=' ./docker/packager/packager --package-type=deb --output-dir "./$(git rev-parse --show-cdup)/build_results"

|

||||

```

|

||||

|

||||

## Building on Ubuntu

|

||||

|

||||

The following tutorial is based on Ubuntu Linux.

|

||||

|

||||

@ -141,6 +141,10 @@ Runs [stateful functional tests](tests.md#functional-tests). Treat them in the s

|

||||

Runs [integration tests](tests.md#integration-tests).

|

||||

|

||||

|

||||

## Bugfix validate check

|

||||

Checks that either a new test (functional or integration) or there some changed tests that fail with the binary built on master branch. This check is triggered when pull request has "pr-bugfix" label.

|

||||

|

||||

|

||||

## Stress Test

|

||||

Runs stateless functional tests concurrently from several clients to detect

|

||||

concurrency-related errors. If it fails:

|

||||

|

||||

@ -35,7 +35,7 @@ The [system.clusters](../../operations/system-tables/clusters.md) system table c

|

||||

|

||||

When creating a new replica of the database, this replica creates tables by itself. If the replica has been unavailable for a long time and has lagged behind the replication log — it checks its local metadata with the current metadata in ZooKeeper, moves the extra tables with data to a separate non-replicated database (so as not to accidentally delete anything superfluous), creates the missing tables, updates the table names if they have been renamed. The data is replicated at the `ReplicatedMergeTree` level, i.e. if the table is not replicated, the data will not be replicated (the database is responsible only for metadata).

|

||||

|

||||

[`ALTER TABLE ATTACH|FETCH|DROP|DROP DETACHED|DETACH PARTITION|PART`](../../sql-reference/statements/alter/partition.md) queries are allowed but not replicated. The database engine will only add/fetch/remove the partition/part to the current replica. However, if the table itself uses a Replicated table engine, then the data will be replicated after using `ATTACH`.

|

||||

[`ALTER TABLE FREEZE|ATTACH|FETCH|DROP|DROP DETACHED|DETACH PARTITION|PART`](../../sql-reference/statements/alter/partition.md) queries are allowed but not replicated. The database engine will only add/fetch/remove the partition/part to the current replica. However, if the table itself uses a Replicated table engine, then the data will be replicated after using `ATTACH`.

|

||||

|

||||

## Usage Example {#usage-example}

|

||||

|

||||

|

||||

@ -60,6 +60,7 @@ Engines in the family:

|

||||

- [EmbeddedRocksDB](../../engines/table-engines/integrations/embedded-rocksdb.md)

|

||||

- [RabbitMQ](../../engines/table-engines/integrations/rabbitmq.md)

|

||||

- [PostgreSQL](../../engines/table-engines/integrations/postgresql.md)

|

||||

- [S3Queue](../../engines/table-engines/integrations/s3queue.md)

|

||||

|

||||

### Special Engines {#special-engines}

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@ CREATE TABLE deltalake

|

||||

- `url` — Bucket url with path to the existing Delta Lake table.

|

||||

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file.

|

||||

|

||||

Engine parameters can be specified using [Named Collections](../../../operations/named-collections.md)

|

||||

Engine parameters can be specified using [Named Collections](/docs/en/operations/named-collections.md).

|

||||

|

||||

**Example**

|

||||

|

||||

|

||||

@ -22,7 +22,7 @@ CREATE TABLE hudi_table

|

||||

- `url` — Bucket url with the path to an existing Hudi table.

|

||||

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file.

|

||||

|

||||

Engine parameters can be specified using [Named Collections](../../../operations/named-collections.md)

|

||||

Engine parameters can be specified using [Named Collections](/docs/en/operations/named-collections.md).

|

||||

|

||||

**Example**

|

||||

|

||||

|

||||

@ -237,7 +237,7 @@ The following settings can be set before query execution or placed into configur

|

||||

- `s3_max_get_rps` — Maximum GET requests per second rate before throttling. Default value is `0` (unlimited).

|

||||

- `s3_max_get_burst` — Max number of requests that can be issued simultaneously before hitting request per second limit. By default (`0` value) equals to `s3_max_get_rps`.

|

||||

- `s3_upload_part_size_multiply_factor` - Multiply `s3_min_upload_part_size` by this factor each time `s3_multiply_parts_count_threshold` parts were uploaded from a single write to S3. Default values is `2`.

|

||||

- `s3_upload_part_size_multiply_parts_count_threshold` - Each time this number of parts was uploaded to S3 `s3_min_upload_part_size multiplied` by `s3_upload_part_size_multiply_factor`. Default value us `500`.

|

||||

- `s3_upload_part_size_multiply_parts_count_threshold` - Each time this number of parts was uploaded to S3, `s3_min_upload_part_size` is multiplied by `s3_upload_part_size_multiply_factor`. Default value is `500`.

|

||||

- `s3_max_inflight_parts_for_one_file` - Limits the number of put requests that can be run concurrently for one object. Its number should be limited. The value `0` means unlimited. Default value is `20`. Each in-flight part has a buffer with size `s3_min_upload_part_size` for the first `s3_upload_part_size_multiply_factor` parts and more when file is big enough, see `upload_part_size_multiply_factor`. With default settings one uploaded file consumes not more than `320Mb` for a file which is less than `8G`. The consumption is greater for a larger file.

|

||||

|

||||

Security consideration: if malicious user can specify arbitrary S3 URLs, `s3_max_redirects` must be set to zero to avoid [SSRF](https://en.wikipedia.org/wiki/Server-side_request_forgery) attacks; or alternatively, `remote_host_filter` must be specified in server configuration.

|

||||

|

||||

224

docs/en/engines/table-engines/integrations/s3queue.md

Normal file

224

docs/en/engines/table-engines/integrations/s3queue.md

Normal file

@ -0,0 +1,224 @@

|

||||

---

|

||||

slug: /en/engines/table-engines/integrations/s3queue

|

||||

sidebar_position: 7

|

||||

sidebar_label: S3Queue

|

||||

---

|

||||

|

||||

# S3Queue Table Engine

|

||||

This engine provides integration with [Amazon S3](https://aws.amazon.com/s3/) ecosystem and allows streaming import. This engine is similar to the [Kafka](../../../engines/table-engines/integrations/kafka.md), [RabbitMQ](../../../engines/table-engines/integrations/rabbitmq.md) engines, but provides S3-specific features.

|

||||

|

||||

## Create Table {#creating-a-table}

|

||||

|

||||

``` sql

|

||||

CREATE TABLE s3_queue_engine_table (name String, value UInt32)

|

||||

ENGINE = S3Queue(path [, NOSIGN | aws_access_key_id, aws_secret_access_key,] format, [compression])

|

||||

[SETTINGS]

|

||||

[mode = 'unordered',]

|

||||

[after_processing = 'keep',]

|

||||

[keeper_path = '',]

|

||||

[s3queue_loading_retries = 0,]

|

||||

[s3queue_polling_min_timeout_ms = 1000,]

|

||||

[s3queue_polling_max_timeout_ms = 10000,]

|

||||

[s3queue_polling_backoff_ms = 0,]

|

||||

[s3queue_tracked_files_limit = 1000,]

|

||||

[s3queue_tracked_file_ttl_sec = 0,]

|

||||

[s3queue_polling_size = 50,]

|

||||

```

|

||||

|

||||

**Engine parameters**

|

||||

|

||||

- `path` — Bucket url with path to file. Supports following wildcards in readonly mode: `*`, `?`, `{abc,def}` and `{N..M}` where `N`, `M` — numbers, `'abc'`, `'def'` — strings. For more information see [below](#wildcards-in-path).

|

||||

- `NOSIGN` - If this keyword is provided in place of credentials, all the requests will not be signed.

|

||||

- `format` — The [format](../../../interfaces/formats.md#formats) of the file.

|

||||

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file. For more information see [Using S3 for Data Storage](../mergetree-family/mergetree.md#table_engine-mergetree-s3).

|

||||

- `compression` — Compression type. Supported values: `none`, `gzip/gz`, `brotli/br`, `xz/LZMA`, `zstd/zst`. Parameter is optional. By default, it will autodetect compression by file extension.

|

||||

|

||||

**Example**

|

||||

|

||||

```sql

|

||||

CREATE TABLE s3queue_engine_table (name String, value UInt32)

|

||||

ENGINE=S3Queue('https://clickhouse-public-datasets.s3.amazonaws.com/my-test-bucket-768/*', 'CSV', 'gzip')

|

||||

SETTINGS

|

||||

mode = 'ordred';

|

||||

```

|

||||

|

||||

Using named collections:

|

||||

|

||||

``` xml

|

||||

<clickhouse>

|

||||

<named_collections>

|

||||

<s3queue_conf>

|

||||

<url>'https://clickhouse-public-datasets.s3.amazonaws.com/my-test-bucket-768/*</url>

|

||||

<access_key_id>test<access_key_id>

|

||||

<secret_access_key>test</secret_access_key>

|

||||

</s3queue_conf>

|

||||

</named_collections>

|

||||

</clickhouse>

|

||||

```

|

||||

|

||||

```sql

|

||||

CREATE TABLE s3queue_engine_table (name String, value UInt32)

|

||||

ENGINE=S3Queue(s3queue_conf, format = 'CSV', compression_method = 'gzip')

|

||||

SETTINGS

|

||||

mode = 'ordred';

|

||||

```

|

||||

|

||||

## Settings {#s3queue-settings}

|

||||

|

||||

### mode {#mode}

|

||||

|

||||

Possible values:

|

||||

|

||||

- unordered — With unordered mode, the set of all already processed files is tracked with persistent nodes in ZooKeeper.

|

||||

- ordered — With ordered mode, only the max name of the successfully consumed file, and the names of files that will be retried after unsuccessful loading attempt are being stored in ZooKeeper.

|

||||

|

||||

Default value: `unordered`.

|

||||

|

||||

### after_processing {#after_processing}

|

||||

|

||||

Delete or keep file after successful processing.

|

||||

Possible values:

|

||||

|

||||

- keep.

|

||||

- delete.

|

||||

|

||||

Default value: `keep`.

|

||||

|

||||

### keeper_path {#keeper_path}

|

||||

|

||||

The path in ZooKeeper can be specified as a table engine setting or default path can be formed from the global configuration-provided path and table UUID.

|

||||

Possible values:

|

||||

|

||||

- String.

|

||||

|

||||

Default value: `/`.

|

||||

|

||||

### s3queue_loading_retries {#s3queue_loading_retries}

|

||||

|

||||

Retry file loading up to specified number of times. By default, there are no retries.

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

### s3queue_polling_min_timeout_ms {#s3queue_polling_min_timeout_ms}

|

||||

|

||||

Minimal timeout before next polling (in milliseconds).

|

||||

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `1000`.

|

||||

|

||||

### s3queue_polling_max_timeout_ms {#s3queue_polling_max_timeout_ms}

|

||||

|

||||

Maximum timeout before next polling (in milliseconds).

|

||||

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `10000`.

|

||||

|

||||

### s3queue_polling_backoff_ms {#s3queue_polling_backoff_ms}

|

||||

|

||||

Polling backoff (in milliseconds).

|

||||

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

### s3queue_tracked_files_limit {#s3queue_tracked_files_limit}

|

||||

|

||||

Allows to limit the number of Zookeeper nodes if the 'unordered' mode is used, does nothing for 'ordered' mode.

|

||||

If limit reached the oldest processed files will be deleted from ZooKeeper node and processed again.

|

||||

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `1000`.

|

||||

|

||||

### s3queue_tracked_file_ttl_sec {#s3queue_tracked_file_ttl_sec}

|

||||

|

||||

Maximum number of seconds to store processed files in ZooKeeper node (store forever by default) for 'unordered' mode, does nothing for 'ordered' mode.

|

||||

After the specified number of seconds, the file will be re-imported.

|

||||

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

### s3queue_polling_size {#s3queue_polling_size}

|

||||

|

||||

Maximum files to fetch from S3 with SELECT or in background task.

|

||||

Engine takes files for processing from S3 in batches.

|

||||

We limit the batch size to increase concurrency if multiple table engines with the same `keeper_path` consume files from the same path.

|

||||

|

||||

Possible values:

|

||||

|

||||

- Positive integer.

|

||||

|

||||

Default value: `50`.

|

||||

|

||||

|

||||

## S3-related Settings {#s3-settings}

|

||||

|

||||

Engine supports all s3 related settings. For more information about S3 settings see [here](../../../engines/table-engines/integrations/s3.md).

|

||||

|

||||

|

||||

## Description {#description}

|

||||

|

||||

`SELECT` is not particularly useful for streaming import (except for debugging), because each file can be imported only once. It is more practical to create real-time threads using [materialized views](../../../sql-reference/statements/create/view.md). To do this:

|

||||

|

||||

1. Use the engine to create a table for consuming from specified path in S3 and consider it a data stream.

|

||||

2. Create a table with the desired structure.

|

||||

3. Create a materialized view that converts data from the engine and puts it into a previously created table.

|

||||

|

||||

When the `MATERIALIZED VIEW` joins the engine, it starts collecting data in the background.

|

||||

|

||||

Example:

|

||||

|

||||

``` sql

|

||||

CREATE TABLE s3queue_engine_table (name String, value UInt32)

|

||||

ENGINE=S3Queue('https://clickhouse-public-datasets.s3.amazonaws.com/my-test-bucket-768/*', 'CSV', 'gzip')

|

||||

SETTINGS

|

||||

mode = 'unordred',

|

||||

keeper_path = '/clickhouse/s3queue/';

|

||||

|

||||

CREATE TABLE stats (name String, value UInt32)

|

||||

ENGINE = MergeTree() ORDER BY name;

|

||||

|

||||

CREATE MATERIALIZED VIEW consumer TO stats

|

||||

AS SELECT name, value FROM s3queue_engine_table;

|

||||

|

||||

SELECT * FROM stats ORDER BY name;

|

||||

```

|

||||

|

||||

## Virtual columns {#virtual-columns}

|

||||

|

||||

- `_path` — Path to the file.

|

||||

- `_file` — Name of the file.

|

||||

|

||||

For more information about virtual columns see [here](../../../engines/table-engines/index.md#table_engines-virtual_columns).

|

||||

|

||||

|

||||

## Wildcards In Path {#wildcards-in-path}

|

||||

|

||||

`path` argument can specify multiple files using bash-like wildcards. For being processed file should exist and match to the whole path pattern. Listing of files is determined during `SELECT` (not at `CREATE` moment).

|

||||

|

||||

- `*` — Substitutes any number of any characters except `/` including empty string.

|

||||

- `?` — Substitutes any single character.

|

||||

- `{some_string,another_string,yet_another_one}` — Substitutes any of strings `'some_string', 'another_string', 'yet_another_one'`.

|

||||

- `{N..M}` — Substitutes any number in range from N to M including both borders. N and M can have leading zeroes e.g. `000..078`.

|

||||

|

||||

Constructions with `{}` are similar to the [remote](../../../sql-reference/table-functions/remote.md) table function.

|

||||

|

||||

:::note

|

||||

If the listing of files contains number ranges with leading zeros, use the construction with braces for each digit separately or use `?`.

|

||||

:::

|

||||

@ -193,6 +193,19 @@ index creation, `L2Distance` is used as default. Parameter `NumTrees` is the num

|

||||

specified: 100). Higher values of `NumTree` mean more accurate search results but slower index creation / query times (approximately

|

||||

linearly) as well as larger index sizes.

|

||||

|

||||

`L2Distance` is also called Euclidean distance, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points.

|

||||

For example: If we have point P(p1,p2), Q(q1,q2), their distance will be d(p,q)

|

||||

|

||||

|

||||

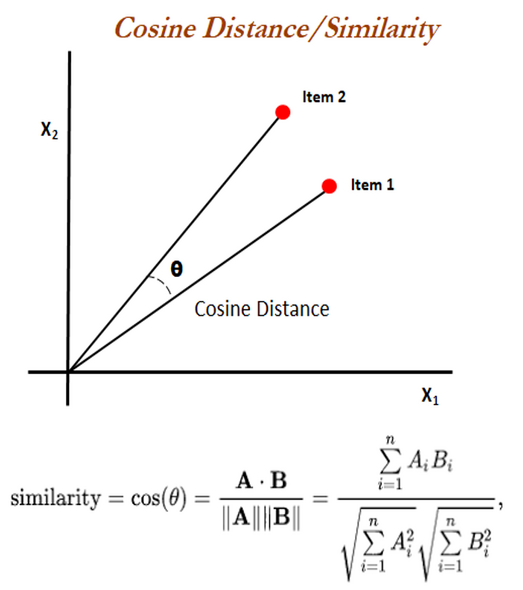

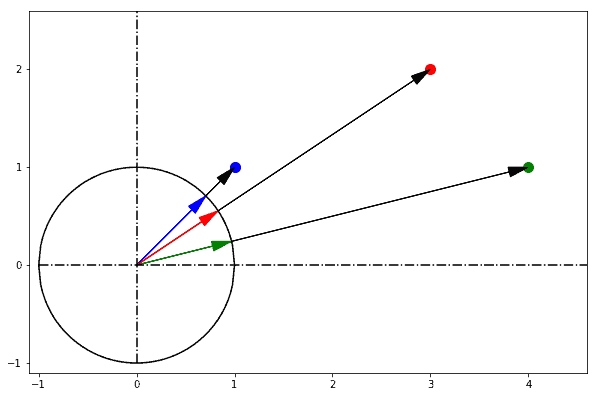

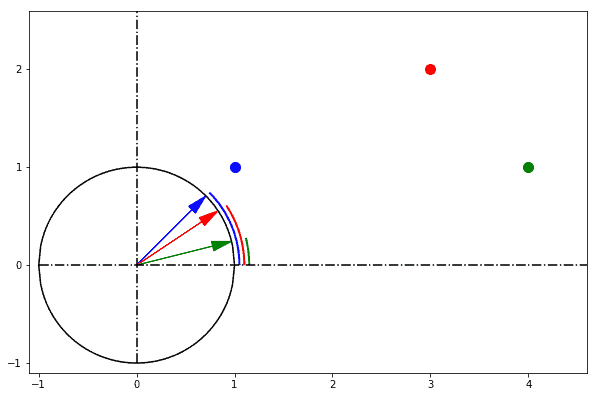

`cosineDistance` also called cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths.

|

||||

|

||||

|

||||

The Euclidean distance corresponds to the L2-norm of a difference between vectors. The cosine similarity is proportional to the dot product of two vectors and inversely proportional to the product of their magnitudes.

|

||||

|

||||

In one sentence: cosine similarity care only about the angle between them, but do not care about the "distance" we normally think.

|

||||

|

||||

|

||||

|

||||

:::note

|

||||

Indexes over columns of type `Array` will generally work faster than indexes on `Tuple` columns. All arrays **must** have same length. Use

|

||||

[CONSTRAINT](/docs/en/sql-reference/statements/create/table.md#constraints) to avoid errors. For example, `CONSTRAINT constraint_name_1

|

||||

|

||||

@ -106,4 +106,4 @@ For partitioning by month, use the `toYYYYMM(date_column)` expression, where `da

|

||||

## Storage Settings {#storage-settings}

|

||||

|

||||

- [engine_url_skip_empty_files](/docs/en/operations/settings/settings.md#engine_url_skip_empty_files) - allows to skip empty files while reading. Disabled by default.

|

||||

- [disable_url_encoding](/docs/en/operations/settings/settings.md#disable_url_encoding) -allows to disable decoding/encoding path in uri. Disabled by default.

|

||||

- [enable_url_encoding](/docs/en/operations/settings/settings.md#enable_url_encoding) - allows to enable/disable decoding/encoding path in uri. Enabled by default.

|

||||

|

||||

@ -1723,6 +1723,34 @@ You can select data from a ClickHouse table and save them into some file in the

|

||||

``` bash

|

||||

$ clickhouse-client --query = "SELECT * FROM test.hits FORMAT CapnProto SETTINGS format_schema = 'schema:Message'"

|

||||

```

|

||||

|

||||

### Using autogenerated schema {#using-autogenerated-capn-proto-schema}

|

||||

|

||||

If you don't have an external CapnProto schema for your data, you can still output/input data in CapnProto format using autogenerated schema.

|

||||

For example:

|

||||

|

||||

```sql

|

||||

SELECT * FROM test.hits format CapnProto SETTINGS format_capn_proto_use_autogenerated_schema=1

|

||||

```

|

||||

|

||||

In this case ClickHouse will autogenerate CapnProto schema according to the table structure using function [structureToCapnProtoSchema](../sql-reference/functions/other-functions.md#structure_to_capn_proto_schema) and will use this schema to serialize data in CapnProto format.

|

||||

|

||||

You can also read CapnProto file with autogenerated schema (in this case the file must be created using the same schema):

|

||||

|

||||

```bash

|

||||

$ cat hits.bin | clickhouse-client --query "INSERT INTO test.hits SETTINGS format_capn_proto_use_autogenerated_schema=1 FORMAT CapnProto"

|

||||

```

|

||||

|

||||

The setting [format_capn_proto_use_autogenerated_schema](../operations/settings/settings-formats.md#format_capn_proto_use_autogenerated_schema) is enabled by default and applies if [format_schema](../operations/settings/settings-formats.md#formatschema-format-schema) is not set.

|

||||

|

||||

You can also save autogenerated schema in the file during input/output using setting [output_format_schema](../operations/settings/settings-formats.md#outputformatschema-output-format-schema). For example:

|

||||

|

||||

```sql

|

||||

SELECT * FROM test.hits format CapnProto SETTINGS format_capn_proto_use_autogenerated_schema=1, output_format_schema='path/to/schema/schema.capnp'

|

||||

```

|

||||

|

||||

In this case autogenerated CapnProto schema will be saved in file `path/to/schema/schema.capnp`.

|

||||

|

||||

## Prometheus {#prometheus}

|

||||

|

||||

Expose metrics in [Prometheus text-based exposition format](https://prometheus.io/docs/instrumenting/exposition_formats/#text-based-format).

|

||||

@ -1861,6 +1889,33 @@ ClickHouse inputs and outputs protobuf messages in the `length-delimited` format

|

||||

It means before every message should be written its length as a [varint](https://developers.google.com/protocol-buffers/docs/encoding#varints).

|

||||

See also [how to read/write length-delimited protobuf messages in popular languages](https://cwiki.apache.org/confluence/display/GEODE/Delimiting+Protobuf+Messages).

|

||||

|

||||

### Using autogenerated schema {#using-autogenerated-protobuf-schema}

|

||||

|

||||

If you don't have an external Protobuf schema for your data, you can still output/input data in Protobuf format using autogenerated schema.

|

||||

For example:

|

||||

|

||||

```sql

|

||||

SELECT * FROM test.hits format Protobuf SETTINGS format_protobuf_use_autogenerated_schema=1

|

||||

```

|

||||

|

||||

In this case ClickHouse will autogenerate Protobuf schema according to the table structure using function [structureToProtobufSchema](../sql-reference/functions/other-functions.md#structure_to_protobuf_schema) and will use this schema to serialize data in Protobuf format.

|

||||

|

||||

You can also read Protobuf file with autogenerated schema (in this case the file must be created using the same schema):

|

||||

|

||||

```bash

|

||||

$ cat hits.bin | clickhouse-client --query "INSERT INTO test.hits SETTINGS format_protobuf_use_autogenerated_schema=1 FORMAT Protobuf"

|

||||

```

|

||||

|

||||

The setting [format_protobuf_use_autogenerated_schema](../operations/settings/settings-formats.md#format_protobuf_use_autogenerated_schema) is enabled by default and applies if [format_schema](../operations/settings/settings-formats.md#formatschema-format-schema) is not set.

|

||||

|

||||

You can also save autogenerated schema in the file during input/output using setting [output_format_schema](../operations/settings/settings-formats.md#outputformatschema-output-format-schema). For example:

|

||||

|

||||

```sql

|

||||

SELECT * FROM test.hits format Protobuf SETTINGS format_protobuf_use_autogenerated_schema=1, output_format_schema='path/to/schema/schema.proto'

|

||||

```

|

||||

|

||||

In this case autogenerated Protobuf schema will be saved in file `path/to/schema/schema.capnp`.

|

||||

|

||||

## ProtobufSingle {#protobufsingle}

|

||||

|

||||

Same as [Protobuf](#protobuf) but for storing/parsing single Protobuf message without length delimiters.

|

||||

@ -2076,7 +2131,6 @@ To exchange data with Hadoop, you can use [HDFS table engine](/docs/en/engines/t

|

||||

|

||||

- [output_format_parquet_row_group_size](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_row_group_size) - row group size in rows while data output. Default value - `1000000`.

|

||||

- [output_format_parquet_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_string_as_string) - use Parquet String type instead of Binary for String columns. Default value - `false`.

|

||||

- [input_format_parquet_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_import_nested) - allow inserting array of structs into [Nested](/docs/en/sql-reference/data-types/nested-data-structures/index.md) table in Parquet input format. Default value - `false`.

|

||||

- [input_format_parquet_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_case_insensitive_column_matching) - ignore case when matching Parquet columns with ClickHouse columns. Default value - `false`.

|

||||

- [input_format_parquet_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_allow_missing_columns) - allow missing columns while reading Parquet data. Default value - `false`.

|

||||

- [input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Parquet format. Default value - `false`.

|

||||

@ -2281,7 +2335,6 @@ $ clickhouse-client --query="SELECT * FROM {some_table} FORMAT Arrow" > {filenam

|

||||

|

||||

- [output_format_arrow_low_cardinality_as_dictionary](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_low_cardinality_as_dictionary) - enable output ClickHouse LowCardinality type as Dictionary Arrow type. Default value - `false`.

|

||||

- [output_format_arrow_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_string_as_string) - use Arrow String type instead of Binary for String columns. Default value - `false`.

|

||||

- [input_format_arrow_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_import_nested) - allow inserting array of structs into Nested table in Arrow input format. Default value - `false`.

|

||||

- [input_format_arrow_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_case_insensitive_column_matching) - ignore case when matching Arrow columns with ClickHouse columns. Default value - `false`.

|

||||

- [input_format_arrow_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_allow_missing_columns) - allow missing columns while reading Arrow data. Default value - `false`.

|

||||

- [input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Arrow format. Default value - `false`.

|

||||

@ -2347,7 +2400,6 @@ $ clickhouse-client --query="SELECT * FROM {some_table} FORMAT ORC" > {filename.

|

||||

|

||||

- [output_format_arrow_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_string_as_string) - use Arrow String type instead of Binary for String columns. Default value - `false`.

|

||||

- [output_format_orc_compression_method](/docs/en/operations/settings/settings-formats.md/#output_format_orc_compression_method) - compression method used in output ORC format. Default value - `none`.

|

||||

- [input_format_arrow_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_import_nested) - allow inserting array of structs into Nested table in Arrow input format. Default value - `false`.

|

||||

- [input_format_arrow_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_case_insensitive_column_matching) - ignore case when matching Arrow columns with ClickHouse columns. Default value - `false`.

|

||||

- [input_format_arrow_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_allow_missing_columns) - allow missing columns while reading Arrow data. Default value - `false`.

|

||||

- [input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Arrow format. Default value - `false`.

|

||||

|

||||

@ -0,0 +1,26 @@

|

||||

---

|

||||

slug: /en/operations/optimizing-performance/profile-guided-optimization

|

||||

sidebar_position: 54

|

||||

sidebar_label: Profile Guided Optimization (PGO)

|

||||

---

|

||||

import SelfManaged from '@site/docs/en/_snippets/_self_managed_only_no_roadmap.md';

|

||||

|

||||

# Profile Guided Optimization

|

||||

|

||||

Profile-Guided Optimization (PGO) is a compiler optimization technique where a program is optimized based on the runtime profile.

|

||||

|

||||

According to the tests, PGO helps with achieving better performance for ClickHouse. According to the tests, we see improvements up to 15% in QPS on the ClickBench test suite. The more detailed results are available [here](https://pastebin.com/xbue3HMU). The performance benefits depend on your typical workload - you can get better or worse results.

|

||||

|

||||

More information about PGO in ClickHouse you can read in the corresponding GitHub [issue](https://github.com/ClickHouse/ClickHouse/issues/44567).

|

||||

|

||||

## How to build ClickHouse with PGO?

|

||||

|

||||

There are two major kinds of PGO: [Instrumentation](https://clang.llvm.org/docs/UsersManual.html#using-sampling-profilers) and [Sampling](https://clang.llvm.org/docs/UsersManual.html#using-sampling-profilers) (also known as AutoFDO). In this guide is described the Instrumentation PGO with ClickHouse.

|

||||

|

||||

1. Build ClickHouse in Instrumented mode. In Clang it can be done via passing `-fprofile-instr-generate` option to `CXXFLAGS`.

|

||||

2. Run instrumented ClickHouse on a sample workload. Here you need to use your usual workload. One of the approaches could be using [ClickBench](https://github.com/ClickHouse/ClickBench) as a sample workload. ClickHouse in the instrumentation mode could work slowly so be ready for that and do not run instrumented ClickHouse in performance-critical environments.

|

||||

3. Recompile ClickHouse once again with `-fprofile-instr-use` compiler flags and profiles that are collected from the previous step.

|

||||

|

||||

A more detailed guide on how to apply PGO is in the Clang [documentation](https://clang.llvm.org/docs/UsersManual.html#profile-guided-optimization).

|

||||

|

||||

If you are going to collect a sample workload directly from a production environment, we recommend trying to use Sampling PGO.

|

||||

@ -321,6 +321,10 @@ If both `input_format_allow_errors_num` and `input_format_allow_errors_ratio` ar

|

||||

|

||||

This parameter is useful when you are using formats that require a schema definition, such as [Cap’n Proto](https://capnproto.org/) or [Protobuf](https://developers.google.com/protocol-buffers/). The value depends on the format.

|

||||

|

||||

## output_format_schema {#output-format-schema}

|

||||

|

||||

The path to the file where the automatically generated schema will be saved in [Cap’n Proto](../../interfaces/formats.md#capnproto-capnproto) or [Protobuf](../../interfaces/formats.md#protobuf-protobuf) formats.

|

||||

|

||||

## output_format_enable_streaming {#output_format_enable_streaming}

|

||||

|

||||

Enable streaming in output formats that support it.

|

||||

@ -1108,17 +1112,6 @@ Default value: 1.

|

||||

|

||||

## Arrow format settings {#arrow-format-settings}

|

||||

|

||||

### input_format_arrow_import_nested {#input_format_arrow_import_nested}

|

||||

|

||||

Enables or disables the ability to insert the data into [Nested](../../sql-reference/data-types/nested-data-structures/index.md) columns as an array of structs in [Arrow](../../interfaces/formats.md/#data_types-matching-arrow) input format.

|

||||

|

||||

Possible values:

|

||||

|

||||

- 0 — Data can not be inserted into `Nested` columns as an array of structs.

|

||||

- 1 — Data can be inserted into `Nested` columns as an array of structs.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

### input_format_arrow_case_insensitive_column_matching {#input_format_arrow_case_insensitive_column_matching}

|

||||

|

||||

Ignore case when matching Arrow column names with ClickHouse column names.

|

||||

@ -1168,17 +1161,6 @@ Default value: `lz4_frame`.

|

||||

|

||||

## ORC format settings {#orc-format-settings}

|

||||

|

||||

### input_format_orc_import_nested {#input_format_orc_import_nested}

|

||||

|

||||

Enables or disables the ability to insert the data into [Nested](../../sql-reference/data-types/nested-data-structures/index.md) columns as an array of structs in [ORC](../../interfaces/formats.md/#data-format-orc) input format.

|

||||

|

||||

Possible values:

|

||||

|

||||

- 0 — Data can not be inserted into `Nested` columns as an array of structs.

|

||||

- 1 — Data can be inserted into `Nested` columns as an array of structs.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

### input_format_orc_row_batch_size {#input_format_orc_row_batch_size}

|

||||

|

||||

Batch size when reading ORC stripes.

|

||||

@ -1217,17 +1199,6 @@ Default value: `none`.

|

||||

|

||||

## Parquet format settings {#parquet-format-settings}

|

||||

|

||||

### input_format_parquet_import_nested {#input_format_parquet_import_nested}

|

||||

|

||||

Enables or disables the ability to insert the data into [Nested](../../sql-reference/data-types/nested-data-structures/index.md) columns as an array of structs in [Parquet](../../interfaces/formats.md/#data-format-parquet) input format.

|

||||

|

||||

Possible values:

|

||||

|

||||

- 0 — Data can not be inserted into `Nested` columns as an array of structs.

|

||||

- 1 — Data can be inserted into `Nested` columns as an array of structs.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

### input_format_parquet_case_insensitive_column_matching {#input_format_parquet_case_insensitive_column_matching}

|

||||

|

||||

Ignore case when matching Parquet column names with ClickHouse column names.

|

||||

@ -1330,6 +1301,11 @@ When serializing Nullable columns with Google wrappers, serialize default values

|

||||

|

||||

Disabled by default.

|

||||

|

||||

### format_protobuf_use_autogenerated_schema {#format_capn_proto_use_autogenerated_schema}

|

||||

|

||||

Use autogenerated Protobuf schema when [format_schema](#formatschema-format-schema) is not set.

|

||||

The schema is generated from ClickHouse table structure using function [structureToProtobufSchema](../../sql-reference/functions/other-functions.md#structure_to_protobuf_schema)

|

||||

|

||||

## Avro format settings {#avro-format-settings}

|

||||

|

||||

### input_format_avro_allow_missing_fields {#input_format_avro_allow_missing_fields}

|

||||

@ -1626,6 +1602,11 @@ Possible values:

|

||||

|

||||

Default value: `'by_values'`.

|

||||

|

||||

### format_capn_proto_use_autogenerated_schema {#format_capn_proto_use_autogenerated_schema}

|

||||

|

||||

Use autogenerated CapnProto schema when [format_schema](#formatschema-format-schema) is not set.

|

||||

The schema is generated from ClickHouse table structure using function [structureToCapnProtoSchema](../../sql-reference/functions/other-functions.md#structure_to_capnproto_schema)

|

||||

|

||||

## MySQLDump format settings {#musqldump-format-settings}

|

||||

|

||||

### input_format_mysql_dump_table_name (#input_format_mysql_dump_table_name)

|

||||

|

||||

@ -3468,11 +3468,11 @@ Possible values:

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

## disable_url_encoding {#disable_url_encoding}

|

||||

## enable_url_encoding {#enable_url_encoding}

|

||||

|

||||

Allows to disable decoding/encoding path in uri in [URL](../../engines/table-engines/special/url.md) engine tables.

|

||||

Allows to enable/disable decoding/encoding path in uri in [URL](../../engines/table-engines/special/url.md) engine tables.

|

||||

|

||||

Disabled by default.

|

||||

Enabled by default.

|

||||

|

||||

## database_atomic_wait_for_drop_and_detach_synchronously {#database_atomic_wait_for_drop_and_detach_synchronously}

|

||||

|

||||

@ -4578,3 +4578,28 @@ Type: Int64

|

||||

|

||||

Default: 0

|

||||

|

||||

## precise_float_parsing {#precise_float_parsing}

|

||||

|

||||

Switches [Float32/Float64](../../sql-reference/data-types/float.md) parsing algorithms:

|

||||

* If the value is `1`, then precise method is used. It is slower than fast method, but it always returns a number that is the closest machine representable number to the input.

|

||||

* Otherwise, fast method is used (default). It usually returns the same value as precise, but in rare cases result may differ by one or two least significant digits.

|

||||

|

||||

Possible values: `0`, `1`.

|

||||

|

||||

Default value: `0`.

|

||||

|

||||

Example:

|

||||

|

||||

```sql

|

||||

SELECT toFloat64('1.7091'), toFloat64('1.5008753E7') SETTINGS precise_float_parsing = 0;

|

||||

|

||||

┌─toFloat64('1.7091')─┬─toFloat64('1.5008753E7')─┐

|

||||

│ 1.7090999999999998 │ 15008753.000000002 │

|

||||

└─────────────────────┴──────────────────────────┘

|

||||

|

||||

SELECT toFloat64('1.7091'), toFloat64('1.5008753E7') SETTINGS precise_float_parsing = 1;

|

||||

|

||||

┌─toFloat64('1.7091')─┬─toFloat64('1.5008753E7')─┐

|

||||

│ 1.7091 │ 15008753 │

|

||||

└─────────────────────┴──────────────────────────┘

|

||||

```

|

||||

|

||||

@ -48,7 +48,7 @@ Columns:

|

||||

- `read_rows` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — Total number of rows read from all tables and table functions participated in query. It includes usual subqueries, subqueries for `IN` and `JOIN`. For distributed queries `read_rows` includes the total number of rows read at all replicas. Each replica sends it’s `read_rows` value, and the server-initiator of the query summarizes all received and local values. The cache volumes do not affect this value.

|

||||

- `read_bytes` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — Total number of bytes read from all tables and table functions participated in query. It includes usual subqueries, subqueries for `IN` and `JOIN`. For distributed queries `read_bytes` includes the total number of rows read at all replicas. Each replica sends it’s `read_bytes` value, and the server-initiator of the query summarizes all received and local values. The cache volumes do not affect this value.

|

||||

- `written_rows` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — For `INSERT` queries, the number of written rows. For other queries, the column value is 0.

|

||||

- `written_bytes` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — For `INSERT` queries, the number of written bytes. For other queries, the column value is 0.

|

||||

- `written_bytes` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — For `INSERT` queries, the number of written bytes (uncompressed). For other queries, the column value is 0.

|

||||

- `result_rows` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — Number of rows in a result of the `SELECT` query, or a number of rows in the `INSERT` query.

|

||||

- `result_bytes` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — RAM volume in bytes used to store a query result.

|

||||

- `memory_usage` ([UInt64](../../sql-reference/data-types/int-uint.md#uint-ranges)) — Memory consumption by the query.

|

||||

|

||||

@ -2552,3 +2552,187 @@ Result:

|

||||

|

||||

This function can be used together with [generateRandom](../../sql-reference/table-functions/generate.md) to generate completely random tables.

|

||||

|

||||

## structureToCapnProtoSchema {#structure_to_capn_proto_schema}

|

||||

|

||||

Converts ClickHouse table structure to CapnProto schema.

|

||||

|

||||

**Syntax**

|

||||

|

||||

``` sql

|

||||

structureToCapnProtoSchema(structure)

|

||||

```

|

||||

|

||||

**Arguments**

|

||||

|

||||

- `structure` — Table structure in a format `column1_name column1_type, column2_name column2_type, ...`.

|

||||

- `root_struct_name` — Name for root struct in CapnProto schema. Default value - `Message`;

|

||||

|

||||

**Returned value**

|

||||

|

||||

- CapnProto schema

|

||||

|

||||

Type: [String](../../sql-reference/data-types/string.md).

|

||||

|

||||

**Examples**

|

||||

|

||||

Query:

|

||||

|

||||

``` sql

|

||||

SELECT structureToCapnProtoSchema('column1 String, column2 UInt32, column3 Array(String)') FORMAT RawBLOB

|

||||

```

|

||||

|

||||

Result:

|

||||

|

||||

``` text

|

||||

@0xf96402dd754d0eb7;

|

||||

|

||||

struct Message

|

||||

{

|

||||

column1 @0 : Data;

|

||||

column2 @1 : UInt32;

|

||||

column3 @2 : List(Data);

|

||||

}

|

||||

```

|

||||

|

||||

Query:

|

||||

|

||||

``` sql

|

||||

SELECT structureToCapnProtoSchema('column1 Nullable(String), column2 Tuple(element1 UInt32, element2 Array(String)), column3 Map(String, String)') FORMAT RawBLOB

|

||||

```

|

||||

|

||||

Result:

|

||||

|

||||

``` text

|

||||

@0xd1c8320fecad2b7f;

|

||||

|

||||

struct Message

|

||||

{

|

||||

struct Column1

|

||||

{

|

||||

union

|

||||

{

|

||||

value @0 : Data;

|

||||

null @1 : Void;

|

||||

}

|

||||

}

|

||||

column1 @0 : Column1;

|

||||

struct Column2

|

||||

{

|

||||

element1 @0 : UInt32;

|

||||

element2 @1 : List(Data);

|

||||

}

|

||||

column2 @1 : Column2;

|

||||

struct Column3

|

||||

{

|

||||

struct Entry

|

||||

{

|

||||

key @0 : Data;

|

||||

value @1 : Data;

|

||||

}

|

||||

entries @0 : List(Entry);

|

||||

}

|

||||

column3 @2 : Column3;

|

||||

}

|

||||

```

|

||||

|

||||

Query:

|

||||

|

||||

``` sql

|

||||

SELECT structureToCapnProtoSchema('column1 String, column2 UInt32', 'Root') FORMAT RawBLOB

|

||||

```

|

||||

|

||||

Result:

|

||||

|

||||

``` text

|

||||

@0x96ab2d4ab133c6e1;

|

||||

|

||||

struct Root

|

||||

{

|

||||

column1 @0 : Data;

|

||||

column2 @1 : UInt32;

|

||||

}

|

||||

```

|

||||

|

||||

## structureToProtobufSchema {#structure_to_protobuf_schema}

|

||||

|

||||

Converts ClickHouse table structure to Protobuf schema.

|

||||

|

||||

**Syntax**

|

||||

|

||||

``` sql

|

||||

structureToProtobufSchema(structure)

|

||||

```

|

||||

|

||||

**Arguments**

|

||||

|

||||

- `structure` — Table structure in a format `column1_name column1_type, column2_name column2_type, ...`.

|

||||

- `root_message_name` — Name for root message in Protobuf schema. Default value - `Message`;

|

||||

|

||||

**Returned value**

|

||||

|

||||

- Protobuf schema

|

||||

|

||||

Type: [String](../../sql-reference/data-types/string.md).

|

||||

|

||||

**Examples**

|

||||

|

||||

Query:

|

||||

|

||||

``` sql

|

||||

SELECT structureToProtobufSchema('column1 String, column2 UInt32, column3 Array(String)') FORMAT RawBLOB

|

||||

```

|

||||

|

||||

Result:

|

||||

|

||||

``` text

|

||||

syntax = "proto3";

|

||||

|

||||

message Message

|

||||

{

|

||||

bytes column1 = 1;

|

||||

uint32 column2 = 2;

|

||||

repeated bytes column3 = 3;

|

||||

}

|

||||

```

|

||||

|

||||

Query:

|

||||

|

||||

``` sql

|

||||

SELECT structureToProtobufSchema('column1 Nullable(String), column2 Tuple(element1 UInt32, element2 Array(String)), column3 Map(String, String)') FORMAT RawBLOB

|

||||

```

|

||||

|

||||

Result:

|

||||

|

||||

``` text

|

||||

syntax = "proto3";

|

||||

|

||||

message Message

|

||||

{

|

||||

bytes column1 = 1;

|

||||

message Column2

|

||||

{

|

||||

uint32 element1 = 1;

|

||||

repeated bytes element2 = 2;

|

||||

}

|

||||

Column2 column2 = 2;

|

||||

map<string, bytes> column3 = 3;

|

||||

}

|

||||

```

|

||||

|

||||

Query:

|

||||

|

||||

``` sql

|

||||

SELECT structureToProtobufSchema('column1 String, column2 UInt32', 'Root') FORMAT RawBLOB

|

||||

```

|

||||

|

||||

Result:

|

||||

|

||||

``` text

|

||||

syntax = "proto3";

|

||||

|

||||

message Root

|

||||

{

|

||||

bytes column1 = 1;

|

||||

uint32 column2 = 2;

|

||||

}

|

||||

```

|

||||

|

||||

@ -36,6 +36,8 @@ These `ALTER` statements modify entities related to role-based access control:

|

||||

|

||||

[ALTER TABLE ... MODIFY COMMENT](/docs/en/sql-reference/statements/alter/comment.md) statement adds, modifies, or removes comments to the table, regardless if it was set before or not.

|

||||

|

||||

[ALTER NAMED COLLECTION](/docs/en/sql-reference/statements/alter/named-collection.md) statement modifies [Named Collections](/docs/en/operations/named-collections.md).

|

||||

|

||||

## Mutations

|

||||

|

||||

`ALTER` queries that are intended to manipulate table data are implemented with a mechanism called “mutations”, most notably [ALTER TABLE … DELETE](/docs/en/sql-reference/statements/alter/delete.md) and [ALTER TABLE … UPDATE](/docs/en/sql-reference/statements/alter/update.md). They are asynchronous background processes similar to merges in [MergeTree](/docs/en/engines/table-engines/mergetree-family/index.md) tables that to produce new “mutated” versions of parts.

|

||||

|

||||

30

docs/en/sql-reference/statements/alter/named-collection.md

Normal file

30

docs/en/sql-reference/statements/alter/named-collection.md

Normal file

@ -0,0 +1,30 @@

|

||||

---

|

||||

slug: /en/sql-reference/statements/alter/named-collection

|

||||

sidebar_label: NAMED COLLECTION

|

||||

---

|

||||

|

||||

# ALTER NAMED COLLECTION

|

||||

|

||||

This query intends to modify already existing named collections.

|

||||

|

||||

**Syntax**

|

||||

|

||||

```sql

|

||||

ALTER NAMED COLLECTION [IF EXISTS] name [ON CLUSTER cluster]

|

||||

[ SET

|

||||

key_name1 = 'some value',

|

||||

key_name2 = 'some value',

|

||||

key_name3 = 'some value',

|

||||

... ] |

|

||||

[ DELETE key_name4, key_name5, ... ]

|

||||

```

|

||||

|

||||

**Example**

|

||||

|

||||

```sql

|

||||

CREATE NAMED COLLECTION foobar AS a = '1', b = '2';

|

||||

|

||||

ALTER NAMED COLLECTION foobar SET a = '2', c = '3';

|

||||

|

||||

ALTER NAMED COLLECTION foobar DELETE b;

|

||||

```

|

||||

@ -8,13 +8,14 @@ sidebar_label: CREATE

|

||||

|

||||

Create queries make a new entity of one of the following kinds:

|

||||

|

||||

- [DATABASE](../../../sql-reference/statements/create/database.md)