mirror of

https://github.com/ClickHouse/ClickHouse.git

synced 2024-11-30 19:42:00 +00:00

Merged with master.

This commit is contained in:

commit

937cb2db29

@ -1,24 +1,21 @@

|

||||

---

|

||||

BasedOnStyle: WebKit

|

||||

Language: Cpp

|

||||

BasedOnStyle: WebKit

|

||||

Language: Cpp

|

||||

AlignAfterOpenBracket: false

|

||||

BreakBeforeBraces: Custom

|

||||

BraceWrapping: {

|

||||

AfterClass: 'true'

|

||||

AfterControlStatement: 'true'

|

||||

AfterEnum : 'true'

|

||||

AfterFunction : 'true'

|

||||

AfterNamespace : 'true'

|

||||

AfterStruct : 'true'

|

||||

AfterUnion : 'true'

|

||||

BeforeCatch : 'true'

|

||||

BeforeElse : 'true'

|

||||

IndentBraces : 'false'

|

||||

}

|

||||

|

||||

BraceWrapping:

|

||||

AfterClass: true

|

||||

AfterControlStatement: true

|

||||

AfterEnum: true

|

||||

AfterFunction: true

|

||||

AfterNamespace: true

|

||||

AfterStruct: true

|

||||

AfterUnion: true

|

||||

BeforeCatch: true

|

||||

BeforeElse: true

|

||||

IndentBraces: false

|

||||

BreakConstructorInitializersBeforeComma: false

|

||||

Cpp11BracedListStyle: true

|

||||

ColumnLimit: 140

|

||||

ColumnLimit: 140

|

||||

ConstructorInitializerAllOnOneLineOrOnePerLine: true

|

||||

ExperimentalAutoDetectBinPacking: true

|

||||

UseTab: Never

|

||||

@ -28,36 +25,35 @@ Standard: Cpp11

|

||||

PointerAlignment: Middle

|

||||

MaxEmptyLinesToKeep: 2

|

||||

KeepEmptyLinesAtTheStartOfBlocks: false

|

||||

#AllowShortFunctionsOnASingleLine: Inline

|

||||

AllowShortFunctionsOnASingleLine: Empty

|

||||

AlwaysBreakTemplateDeclarations: true

|

||||

IndentCaseLabels: true

|

||||

#SpaceAfterTemplateKeyword: true

|

||||

SpaceAfterTemplateKeyword: true

|

||||

SortIncludes: true

|

||||

IncludeCategories:

|

||||

- Regex: '^<[a-z_]+>'

|

||||

Priority: 1

|

||||

- Regex: '^<[a-z_]+.h>'

|

||||

Priority: 2

|

||||

- Regex: '^["<](common|ext|mysqlxx|daemon|zkutil)/'

|

||||

Priority: 90

|

||||

- Regex: '^["<](DB)/'

|

||||

Priority: 100

|

||||

- Regex: '^["<](Poco)/'

|

||||

Priority: 50

|

||||

- Regex: '^"'

|

||||

Priority: 110

|

||||

- Regex: '/'

|

||||

Priority: 30

|

||||

- Regex: '.*'

|

||||

Priority: 40

|

||||

- Regex: '^<[a-z_]+>'

|

||||

Priority: 1

|

||||

- Regex: '^<[a-z_]+.h>'

|

||||

Priority: 2

|

||||

- Regex: '^["<](common|ext|mysqlxx|daemon|zkutil)/'

|

||||

Priority: 90

|

||||

- Regex: '^["<](DB)/'

|

||||

Priority: 100

|

||||

- Regex: '^["<](Poco)/'

|

||||

Priority: 50

|

||||

- Regex: '^"'

|

||||

Priority: 110

|

||||

- Regex: '/'

|

||||

Priority: 30

|

||||

- Regex: '.*'

|

||||

Priority: 40

|

||||

ReflowComments: false

|

||||

AlignEscapedNewlinesLeft: true

|

||||

|

||||

# Not changed:

|

||||

AccessModifierOffset: -4

|

||||

AlignConsecutiveAssignments: false

|

||||

AlignOperands: false

|

||||

AlignOperands: false

|

||||

AlignTrailingComments: false

|

||||

AllowAllParametersOfDeclarationOnNextLine: true

|

||||

AllowShortBlocksOnASingleLine: false

|

||||

@ -70,16 +66,15 @@ BinPackArguments: false

|

||||

BinPackParameters: false

|

||||

BreakBeforeBinaryOperators: All

|

||||

BreakBeforeTernaryOperators: true

|

||||

CommentPragmas: '^ IWYU pragma:'

|

||||

CommentPragmas: '^ IWYU pragma:'

|

||||

ConstructorInitializerIndentWidth: 4

|

||||

ContinuationIndentWidth: 4

|

||||

DerivePointerAlignment: false

|

||||

DisableFormat: false

|

||||

ForEachMacros: [ foreach, Q_FOREACH, BOOST_FOREACH ]

|

||||

IndentWidth: 4

|

||||

DisableFormat: false

|

||||

IndentWidth: 4

|

||||

IndentWrappedFunctionNames: false

|

||||

MacroBlockBegin: ''

|

||||

MacroBlockEnd: ''

|

||||

MacroBlockEnd: ''

|

||||

NamespaceIndentation: Inner

|

||||

ObjCBlockIndentWidth: 4

|

||||

ObjCSpaceAfterProperty: true

|

||||

@ -99,5 +94,3 @@ SpacesInContainerLiterals: true

|

||||

SpacesInCStyleCastParentheses: false

|

||||

SpacesInParentheses: false

|

||||

SpacesInSquareBrackets: false

|

||||

...

|

||||

|

||||

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@ -9,7 +9,7 @@

|

||||

# auto generated files

|

||||

*.logrt

|

||||

|

||||

build

|

||||

/build

|

||||

/docs/en_single_page/

|

||||

/docs/ru_single_page/

|

||||

/docs/venv/

|

||||

@ -43,6 +43,7 @@ cmake-build-*

|

||||

# Python cache

|

||||

*.pyc

|

||||

__pycache__

|

||||

*.pytest_cache

|

||||

|

||||

# ignore generated files

|

||||

*-metrika-yandex

|

||||

|

||||

3

.gitmodules

vendored

3

.gitmodules

vendored

@ -34,3 +34,6 @@

|

||||

[submodule "contrib/boost"]

|

||||

path = contrib/boost

|

||||

url = https://github.com/ClickHouse-Extras/boost.git

|

||||

[submodule "contrib/llvm"]

|

||||

path = contrib/llvm

|

||||

url = https://github.com/ClickHouse-Extras/llvm

|

||||

|

||||

11

.travis.yml

11

.travis.yml

@ -11,9 +11,10 @@ matrix:

|

||||

#

|

||||

# addons:

|

||||

# apt:

|

||||

# update: true

|

||||

# sources:

|

||||

# - ubuntu-toolchain-r-test

|

||||

# packages: [ g++-7, libicu-dev, libreadline-dev, libmysqlclient-dev, unixodbc-dev, libltdl-dev, libssl-dev, libboost-dev, zlib1g-dev, libdouble-conversion-dev, libzookeeper-mt-dev, libsparsehash-dev, librdkafka-dev, libcapnp-dev, libsparsehash-dev, libgoogle-perftools-dev, bash, expect, python, python-lxml, python-termcolor, curl, perl, sudo, openssl ]

|

||||

# packages: [ g++-7, libicu-dev, libreadline-dev, libmysqlclient-dev, unixodbc-dev, libltdl-dev, libssl-dev, libboost-dev, zlib1g-dev, libdouble-conversion-dev, libsparsehash-dev, librdkafka-dev, libcapnp-dev, libsparsehash-dev, libgoogle-perftools-dev, bash, expect, python, python-lxml, python-termcolor, curl, perl, sudo, openssl ]

|

||||

#

|

||||

# env:

|

||||

# - MATRIX_EVAL="export CC=gcc-7 && export CXX=g++-7"

|

||||

@ -33,10 +34,11 @@ matrix:

|

||||

|

||||

addons:

|

||||

apt:

|

||||

update: true

|

||||

sources:

|

||||

- ubuntu-toolchain-r-test

|

||||

- llvm-toolchain-trusty-5.0

|

||||

packages: [ g++-7, clang-5.0, lld-5.0, libicu-dev, libreadline-dev, libmysqlclient-dev, unixodbc-dev, libltdl-dev, libssl-dev, libboost-dev, zlib1g-dev, libdouble-conversion-dev, libzookeeper-mt-dev, libsparsehash-dev, librdkafka-dev, libcapnp-dev, libsparsehash-dev, libgoogle-perftools-dev, bash, expect, python, python-lxml, python-termcolor, curl, perl, sudo, openssl]

|

||||

packages: [ g++-7, clang-5.0, lld-5.0, libicu-dev, libreadline-dev, libmysqlclient-dev, unixodbc-dev, libltdl-dev, libssl-dev, libboost-dev, zlib1g-dev, libdouble-conversion-dev, libsparsehash-dev, librdkafka-dev, libcapnp-dev, libsparsehash-dev, libgoogle-perftools-dev, bash, expect, python, python-lxml, python-termcolor, curl, perl, sudo, openssl]

|

||||

|

||||

env:

|

||||

- MATRIX_EVAL="export CC=clang-5.0 && export CXX=clang++-5.0"

|

||||

@ -77,6 +79,7 @@ matrix:

|

||||

|

||||

addons:

|

||||

apt:

|

||||

update: true

|

||||

packages: [ pbuilder, fakeroot, debhelper ]

|

||||

|

||||

script:

|

||||

@ -94,6 +97,7 @@ matrix:

|

||||

#

|

||||

# addons:

|

||||

# apt:

|

||||

# update: true

|

||||

# packages: [ pbuilder, fakeroot, debhelper ]

|

||||

#

|

||||

# env:

|

||||

@ -115,6 +119,7 @@ matrix:

|

||||

#

|

||||

# addons:

|

||||

# apt:

|

||||

# update: true

|

||||

# packages: [ pbuilder, fakeroot, debhelper ]

|

||||

#

|

||||

# env:

|

||||

@ -137,7 +142,7 @@ matrix:

|

||||

# - brew link --overwrite gcc || true

|

||||

#

|

||||

# env:

|

||||

# - MATRIX_EVAL="export CC=gcc-7 && export CXX=g++-7"

|

||||

# - MATRIX_EVAL="export CC=gcc-8 && export CXX=g++-8"

|

||||

#

|

||||

# script:

|

||||

# - env CMAKE_FLAGS="-DUSE_INTERNAL_BOOST_LIBRARY=1" utils/travis/normal.sh

|

||||

|

||||

78

CHANGELOG.md

78

CHANGELOG.md

@ -1,3 +1,81 @@

|

||||

# ClickHouse release 1.1.54381, 2018-05-14

|

||||

|

||||

## Bug fixes:

|

||||

* Fixed a nodes leak in ZooKeeper when ClickHouse loses connection to ZooKeeper server.

|

||||

|

||||

# ClickHouse release 1.1.54380, 2018-04-21

|

||||

|

||||

## New features:

|

||||

* Added table function `file(path, format, structure)`. An example reading bytes from `/dev/urandom`: `ln -s /dev/urandom /var/lib/clickhouse/user_files/random` `clickhouse-client -q "SELECT * FROM file('random', 'RowBinary', 'd UInt8') LIMIT 10"`.

|

||||

|

||||

## Improvements:

|

||||

* Subqueries could be wrapped by `()` braces (to enhance queries readability). For example, `(SELECT 1) UNION ALL (SELECT 1)`.

|

||||

* Simple `SELECT` queries from table `system.processes` are not counted in `max_concurrent_queries` limit.

|

||||

|

||||

## Bug fixes:

|

||||

* Fixed incorrect behaviour of `IN` operator when select from `MATERIALIZED VIEW`.

|

||||

* Fixed incorrect filtering by partition index in expressions like `WHERE partition_key_column IN (...)`

|

||||

* Fixed inability to execute `OPTIMIZE` query on non-leader replica if the table was `REANAME`d.

|

||||

* Fixed authorization error when execute `OPTIMIZE` or `ALTER` queries on a non-leader replica.

|

||||

* Fixed freezing of `KILL QUERY` queries.

|

||||

* Fixed an error in ZooKeeper client library which led to watches loses, freezing of distributed DDL queue and slowing replication queue if non-empty `chroot` prefix is used in ZooKeeper configuration.

|

||||

|

||||

## Backward incompatible changes:

|

||||

* Removed support of expressions like `(a, b) IN (SELECT (a, b))` (instead of them you can use their equivalent `(a, b) IN (SELECT a, b)`). In previous releases, these expressions led to undetermined data filtering or caused errors.

|

||||

|

||||

# ClickHouse release 1.1.54378, 2018-04-16

|

||||

## New features:

|

||||

|

||||

* Logging level can be changed without restarting the server.

|

||||

* Added the `SHOW CREATE DATABASE` query.

|

||||

* The `query_id` can be passed to `clickhouse-client` (elBroom).

|

||||

* New setting: `max_network_bandwidth_for_all_users`.

|

||||

* Added support for `ALTER TABLE ... PARTITION ... ` for `MATERIALIZED VIEW`.

|

||||

* Added information about the size of data parts in uncompressed form in the system table.

|

||||

* Server-to-server encryption support for distributed tables (`<secure>1</secure>` in the replica config in `<remote_servers>`).

|

||||

* Configuration of the table level for the `ReplicatedMergeTree` family in order to minimize the amount of data stored in zookeeper: `use_minimalistic_checksums_in_zookeeper = 1`

|

||||

* Configuration of the `clickhouse-client` prompt. By default, server names are now output to the prompt. The server's display name can be changed; it's also sent in the `X-ClickHouse-Display-Name` HTTP header (Kirill Shvakov).

|

||||

* Multiple comma-separated `topics` can be specified for the `Kafka` engine (Tobias Adamson).

|

||||

* When a query is stopped by `KILL QUERY` or `replace_running_query`, the client receives the `Query was cancelled` exception instead of an incomplete response.

|

||||

|

||||

## Improvements:

|

||||

|

||||

* `ALTER TABLE ... DROP/DETACH PARTITION` queries are run at the front of the replication queue.

|

||||

* `SELECT ... FINAL` and `OPTIMIZE ... FINAL` can be used even when the table has a single data part.

|

||||

* A `query_log` table is recreated on the fly if it was deleted manually (Kirill Shvakov).

|

||||

* The `lengthUTF8` function runs faster (zhang2014).

|

||||

* Improved performance of synchronous inserts in `Distributed` tables (`insert_distributed_sync = 1`) when there is a very large number of shards.

|

||||

* The server accepts the `send_timeout` and `receive_timeout` settings from the client and applies them when connecting to the client (they are applied in reverse order: the server socket's `send_timeout` is set to the `receive_timeout` value received from the client, and vice versa).

|

||||

* More robust crash recovery for asynchronous insertion into `Distributed` tables.

|

||||

* The return type of the `countEqual` function changed from `UInt32` to `UInt64` (谢磊).

|

||||

|

||||

## Bug fixes:

|

||||

|

||||

* Fixed an error with `IN` when the left side of the expression is `Nullable`.

|

||||

* Correct results are now returned when using tuples with `IN` when some of the tuple components are in the table index.

|

||||

* The `max_execution_time` limit now works correctly with distributed queries.

|

||||

* Fixed errors when calculating the size of composite columns in the `system.columns` table.

|

||||

* Fixed an error when creating a temporary table `CREATE TEMPORARY TABLE IF NOT EXISTS`.

|

||||

* Fixed errors in `StorageKafka` (#2075)

|

||||

* Fixed server crashes from invalid arguments of certain aggregate functions.

|

||||

* Fixed the error that prevented the `DETACH DATABASE` query from stopping background tasks for `ReplicatedMergeTree` tables.

|

||||

* `Too many parts` state is less likely to happen when inserting into aggregated materialized views (#2084).

|

||||

* Corrected recursive handling of substitutions in the config if a substitution must be followed by another substitution on the same level.

|

||||

* Corrected the syntax in the metadata file when creating a `VIEW` that uses a query with `UNION ALL`.

|

||||

* `SummingMergeTree` now works correctly for summation of nested data structures with a composite key.

|

||||

* Fixed the possibility of a race condition when choosing the leader for `ReplicatedMergeTree` tables.

|

||||

|

||||

## Build changes:

|

||||

|

||||

* The build supports `ninja` instead of `make` and uses it by default for building releases.

|

||||

* Renamed packages: `clickhouse-server-base` is now `clickhouse-common-static`; `clickhouse-server-common` is now `clickhouse-server`; `clickhouse-common-dbg` is now `clickhouse-common-static-dbg`. To install, use `clickhouse-server clickhouse-client`. Packages with the old names will still load in the repositories for backward compatibility.

|

||||

|

||||

## Backward-incompatible changes:

|

||||

|

||||

* Removed the special interpretation of an IN expression if an array is specified on the left side. Previously, the expression `arr IN (set)` was interpreted as "at least one `arr` element belongs to the `set`". To get the same behavior in the new version, write `arrayExists(x -> x IN (set), arr)`.

|

||||

* Disabled the incorrect use of the socket option `SO_REUSEPORT`, which was incorrectly enabled by default in the Poco library. Note that on Linux there is no longer any reason to simultaneously specify the addresses `::` and `0.0.0.0` for listen – use just `::`, which allows listening to the connection both over IPv4 and IPv6 (with the default kernel config settings). You can also revert to the behavior from previous versions by specifying `<listen_reuse_port>1</listen_reuse_port>` in the config.

|

||||

|

||||

|

||||

# ClickHouse release 1.1.54370, 2018-03-16

|

||||

|

||||

## New features:

|

||||

|

||||

@ -1,3 +1,83 @@

|

||||

# ClickHouse release 1.1.54381, 2018-05-14

|

||||

|

||||

## Исправление ошибок:

|

||||

* Исправлена ошибка, приводящая к "утеканию" метаданных в ZooKeeper при потере соединения с сервером ZooKeeper.

|

||||

|

||||

# ClickHouse release 1.1.54380, 2018-04-21

|

||||

|

||||

## Новые возможности:

|

||||

* Добавлена табличная функция `file(path, format, structure)`. Пример, читающий байты из `/dev/urandom`: `ln -s /dev/urandom /var/lib/clickhouse/user_files/random` `clickhouse-client -q "SELECT * FROM file('random', 'RowBinary', 'd UInt8') LIMIT 10"`.

|

||||

|

||||

## Улучшения:

|

||||

* Добавлена возможность оборачивать подзапросы скобками `()` для повышения читаемости запросов. Например: `(SELECT 1) UNION ALL (SELECT 1)`.

|

||||

* Простые запросы `SELECT` из таблицы `system.processes` не учитываются в ограничении `max_concurrent_queries`.

|

||||

|

||||

## Исправление ошибок:

|

||||

* Исправлена неправильная работа оператора `IN` в `MATERIALIZED VIEW`.

|

||||

* Исправлена неправильная работа индекса по ключу партиционирования в выражениях типа `partition_key_column IN (...)`.

|

||||

* Исправлена невозможность выполнить `OPTIMIZE` запрос на лидирующей реплике после выполнения `RENAME` таблицы.

|

||||

* Исправлены ошибки авторизации возникающие при выполнении запросов `OPTIMIZE` и `ALTER` на нелидирующей реплике.

|

||||

* Исправлены зависания запросов `KILL QUERY`.

|

||||

* Исправлена ошибка в клиентской библиотеке ZooKeeper, которая при использовании непустого префикса `chroot` в конфигурации приводила к потере watch'ей, остановке очереди distributed DDL запросов и замедлению репликации.

|

||||

|

||||

## Обратно несовместимые изменения:

|

||||

* Убрана поддержка выражений типа `(a, b) IN (SELECT (a, b))` (можно использовать эквивалентные выражение `(a, b) IN (SELECT a, b)`). Раньше такие запросы могли приводить к недетерминированной фильтрации в `WHERE`.

|

||||

|

||||

|

||||

# ClickHouse release 1.1.54378, 2018-04-16

|

||||

|

||||

## Новые возможности:

|

||||

|

||||

* Возможность изменения уровня логгирования без перезагрузки сервера.

|

||||

* Добавлен запрос `SHOW CREATE DATABASE`.

|

||||

* Возможность передать `query_id` в `clickhouse-client` (elBroom).

|

||||

* Добавлена настройка `max_network_bandwidth_for_all_users`.

|

||||

* Добавлена поддержка `ALTER TABLE ... PARTITION ... ` для `MATERIALIZED VIEW`.

|

||||

* Добавлена информация о размере кусков данных в несжатом виде в системные таблицы.

|

||||

* Поддержка межсерверного шифрования для distributed таблиц (`<secure>1</secure>` в конфигурации реплики в `<remote_servers>`).

|

||||

* Добавлена настройка уровня таблицы семейства `ReplicatedMergeTree` для уменьшения объема данных, хранимых в zookeeper: `use_minimalistic_checksums_in_zookeeper = 1`

|

||||

* Возможность настройки приглашения `clickhouse-client`. По-умолчанию добавлен вывод имени сервера в приглашение. Возможность изменить отображаемое имя сервера. Отправка его в HTTP заголовке `X-ClickHouse-Display-Name` (Kirill Shvakov).

|

||||

* Возможность указания нескольких `topics` через запятую для движка `Kafka` (Tobias Adamson)

|

||||

* При остановке запроса по причине `KILL QUERY` или `replace_running_query`, клиент получает исключение `Query was cancelled` вместо неполного результата.

|

||||

|

||||

## Улучшения:

|

||||

|

||||

* Запросы вида `ALTER TABLE ... DROP/DETACH PARTITION` выполняются впереди очереди репликации.

|

||||

* Возможность использовать `SELECT ... FINAL` и `OPTIMIZE ... FINAL` даже в случае, если данные в таблице представлены одним куском.

|

||||

* Пересоздание таблицы `query_log` налету в случае если было произведено её удаление вручную (Kirill Shvakov).

|

||||

* Ускорение функции `lengthUTF8` (zhang2014).

|

||||

* Улучшена производительность синхронной вставки в `Distributed` таблицы (`insert_distributed_sync = 1`) в случае очень большого количества шардов.

|

||||

* Сервер принимает настройки `send_timeout` и `receive_timeout` от клиента и применяет их на своей стороне для соединения с клиентом (в переставленном порядке: `send_timeout` у сокета на стороне сервера выставляется в значение `receive_timeout` принятое от клиента, и наоборот).

|

||||

* Более надёжное восстановление после сбоев при асинхронной вставке в `Distributed` таблицы.

|

||||

* Возвращаемый тип функции `countEqual` изменён с `UInt32` на `UInt64` (谢磊)

|

||||

|

||||

## Исправление ошибок:

|

||||

|

||||

* Исправлена ошибка c `IN` где левая часть выражения `Nullable`.

|

||||

* Исправлен неправильный результат при использовании кортежей с `IN` в случае, если часть компоненнтов кортежа есть в индексе таблицы.

|

||||

* Исправлена работа ограничения `max_execution_time` с распределенными запросами.

|

||||

* Исправлены ошибки при вычислении размеров составных столбцов в таблице `system.columns`.

|

||||

* Исправлена ошибка при создании временной таблицы `CREATE TEMPORARY TABLE IF NOT EXISTS`

|

||||

* Исправлены ошибки в `StorageKafka` (#2075)

|

||||

* Исправлены падения сервера от некорректных аргументов некоторых аггрегатных функций.

|

||||

* Исправлена ошибка, из-за которой запрос `DETACH DATABASE` мог не приводить к остановке фоновых задач таблицы типа `ReplicatedMergeTree`.

|

||||

* Исправлена проблема с появлением `Too many parts` в агрегирующих материализованных представлениях (#2084).

|

||||

* Исправлена рекурсивная обработка подстановок в конфиге, если после одной подстановки, требуется другая подстановка на том же уровне.

|

||||

* Исправлена ошибка с неправильным синтаксисом в файле с метаданными при создании `VIEW`, использующих запрос с `UNION ALL`.

|

||||

* Исправлена работа `SummingMergeTree` в случае суммирования вложенных структур данных с составным ключом.

|

||||

* Исправлена возможность возникновения race condition при выборе лидера таблиц `ReplicatedMergeTree`.

|

||||

|

||||

## Изменения сборки:

|

||||

|

||||

* Поддержка `ninja` вместо `make` при сборке. `ninja` используется по-умолчанию при сборке релизов.

|

||||

* Переименованы пакеты `clickhouse-server-base` в `clickhouse-common-static`; `clickhouse-server-common` в `clickhouse-server`; `clickhouse-common-dbg` в `clickhouse-common-static-dbg`. Для установки используйте `clickhouse-server clickhouse-client`. Для совместимости, пакеты со старыми именами продолжают загружаться в репозиторий.

|

||||

|

||||

## Обратно несовместимые изменения:

|

||||

|

||||

* Удалена специальная интерпретация выражения IN, если слева указан массив. Ранее выражение вида `arr IN (set)` воспринималось как "хотя бы один элемент `arr` принадлежит множеству `set`". Для получения такого же поведения в новой версии, напишите `arrayExists(x -> x IN (set), arr)`.

|

||||

* Отключено ошибочное использование опции сокета `SO_REUSEPORT` (которая по ошибке включена по-умолчанию в библиотеке Poco). Стоит обратить внимание, что на Linux системах теперь не имеет смысла указывать одновременно адреса `::` и `0.0.0.0` для listen - следует использовать лишь адрес `::`, который (с настройками ядра по-умолчанию) позволяет слушать соединения как по IPv4 так и по IPv6. Также вы можете вернуть поведение старых версий, указав в конфиге `<listen_reuse_port>1</listen_reuse_port>`.

|

||||

|

||||

|

||||

# ClickHouse release 1.1.54370, 2018-03-16

|

||||

|

||||

## Новые возможности:

|

||||

|

||||

@ -17,7 +17,6 @@ else ()

|

||||

message (WARNING "You are using an unsupported compiler! Compilation has only been tested with Clang 5+ and GCC 7+.")

|

||||

endif ()

|

||||

|

||||

|

||||

# Write compile_commands.json

|

||||

set(CMAKE_EXPORT_COMPILE_COMMANDS 1)

|

||||

|

||||

@ -247,6 +246,7 @@ include (cmake/find_boost.cmake)

|

||||

include (cmake/find_zlib.cmake)

|

||||

include (cmake/find_zstd.cmake)

|

||||

include (cmake/find_ltdl.cmake) # for odbc

|

||||

include (cmake/find_termcap.cmake)

|

||||

if (EXISTS ${CMAKE_CURRENT_SOURCE_DIR}/contrib/poco/cmake/FindODBC.cmake)

|

||||

include (${CMAKE_CURRENT_SOURCE_DIR}/contrib/poco/cmake/FindODBC.cmake) # for poco

|

||||

else ()

|

||||

|

||||

@ -1,8 +1,6 @@

|

||||

# ClickHouse

|

||||

ClickHouse is an open-source column-oriented database management system that allows generating analytical data reports in real time.

|

||||

|

||||

🎤🥂 **ClickHouse Meetup in [Sunnyvale](https://www.meetup.com/San-Francisco-Bay-Area-ClickHouse-Meetup/events/248898966/) & [San Francisco](https://www.meetup.com/San-Francisco-Bay-Area-ClickHouse-Meetup/events/249162518/), April 23-27** 🍰🔥🐻

|

||||

|

||||

Learn more about ClickHouse at [https://clickhouse.yandex/](https://clickhouse.yandex/)

|

||||

|

||||

[](https://travis-ci.org/yandex/ClickHouse)

|

||||

|

||||

142

ci/README.md

Normal file

142

ci/README.md

Normal file

@ -0,0 +1,142 @@

|

||||

## Build and test ClickHouse on various plaforms

|

||||

|

||||

Quick and dirty scripts.

|

||||

|

||||

Usage example:

|

||||

```

|

||||

./run-with-docker.sh ubuntu:bionic jobs/quick-build/run.sh

|

||||

```

|

||||

|

||||

Another example, check build on ARM 64:

|

||||

```

|

||||

./prepare-docker-image-ubuntu.sh

|

||||

./run-with-docker.sh multiarch/ubuntu-core:arm64-bionic jobs/quick-build/run.sh

|

||||

```

|

||||

|

||||

Another example, check build on FreeBSD:

|

||||

```

|

||||

./prepare-vagrant-image-freebsd.sh

|

||||

./run-with-vagrant.sh freebsd jobs/quick-build/run.sh

|

||||

```

|

||||

|

||||

Look at `default_config` and `jobs/quick-build/run.sh`

|

||||

|

||||

Various possible options. We are not going to automate testing all of them.

|

||||

|

||||

#### CPU architectures:

|

||||

- x86_64;

|

||||

- AArch64.

|

||||

|

||||

x86_64 is the main CPU architecture. We also have minimal support for AArch64.

|

||||

|

||||

#### Operating systems:

|

||||

- Linux;

|

||||

- FreeBSD.

|

||||

|

||||

We also target Mac OS X, but it's more difficult to test.

|

||||

Linux is the main. FreeBSD is also supported as production OS.

|

||||

Mac OS is intended only for development and have minimal support: client should work, server should just start.

|

||||

|

||||

#### Linux distributions:

|

||||

For build:

|

||||

- Ubuntu Bionic;

|

||||

- Ubuntu Trusty.

|

||||

|

||||

For run:

|

||||

- Ubuntu Hardy;

|

||||

- CentOS 5

|

||||

|

||||

We should support almost any Linux to run ClickHouse. That's why we test also on old distributions.

|

||||

|

||||

#### How to obtain sources:

|

||||

- use sources from local working copy;

|

||||

- clone sources from github;

|

||||

- download source tarball.

|

||||

|

||||

#### Compilers:

|

||||

- gcc-7;

|

||||

- gcc-8;

|

||||

- clang-6;

|

||||

- clang-svn.

|

||||

|

||||

#### Compiler installation:

|

||||

- from OS packages;

|

||||

- build from sources.

|

||||

|

||||

#### C++ standard library implementation:

|

||||

- libc++;

|

||||

- libstdc++ with C++11 ABI;

|

||||

- libstdc++ with old ABI.

|

||||

|

||||

When building with clang, libc++ is used. When building with gcc, we choose libstdc++ with C++11 ABI.

|

||||

|

||||

#### Linkers:

|

||||

- ldd;

|

||||

- gold;

|

||||

|

||||

When building with clang on x86_64, ldd is used. Otherwise we use gold.

|

||||

|

||||

#### Build types:

|

||||

- RelWithDebInfo;

|

||||

- Debug;

|

||||

- ASan;

|

||||

- TSan.

|

||||

|

||||

#### Build types, extra:

|

||||

- -g0 for quick build;

|

||||

- enable test coverage;

|

||||

- debug tcmalloc.

|

||||

|

||||

#### What to build:

|

||||

- only `clickhouse` target;

|

||||

- all targets;

|

||||

- debian packages;

|

||||

|

||||

We also have intent to build RPM and simple tgz packages.

|

||||

|

||||

#### Where to get third-party libraries:

|

||||

- from contrib directory (submodules);

|

||||

- from OS packages.

|

||||

|

||||

The only production option is to use libraries from contrib directory.

|

||||

Using libraries from OS packages is discouraged, but we also support this option.

|

||||

|

||||

#### Linkage types:

|

||||

- static;

|

||||

- shared;

|

||||

|

||||

Static linking is the only option for production usage.

|

||||

We also have support for shared linking, but it is indended only for developers.

|

||||

|

||||

#### Make tools:

|

||||

- make;

|

||||

- ninja.

|

||||

|

||||

#### Installation options:

|

||||

- run built `clickhouse` binary directly;

|

||||

- install from packages.

|

||||

|

||||

#### How to obtain packages:

|

||||

- build them;

|

||||

- download from repository.

|

||||

|

||||

#### Sanity checks:

|

||||

- check that clickhouse binary has no dependencies on unexpected shared libraries;

|

||||

- check that source code have no style violations.

|

||||

|

||||

#### Tests:

|

||||

- Functional tests;

|

||||

- Integration tests;

|

||||

- Unit tests;

|

||||

- Simple sh/reference tests;

|

||||

- Performance tests (note that they require predictable computing power);

|

||||

- Tests for external dictionaries (should be moved to integration tests);

|

||||

- Jepsen like tests for quorum inserts (not yet available in opensource).

|

||||

|

||||

#### Tests extra:

|

||||

- Run functional tests with Valgrind.

|

||||

|

||||

#### Static analyzers:

|

||||

- CppCheck;

|

||||

- clang-tidy;

|

||||

- Coverity.

|

||||

38

ci/build-clang-from-sources.sh

Executable file

38

ci/build-clang-from-sources.sh

Executable file

@ -0,0 +1,38 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

# TODO Non debian systems

|

||||

./install-os-packages.sh svn

|

||||

./install-os-packages.sh cmake

|

||||

|

||||

mkdir "${WORKSPACE}/llvm"

|

||||

|

||||

svn co "http://llvm.org/svn/llvm-project/llvm/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm"

|

||||

svn co "http://llvm.org/svn/llvm-project/cfe/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/tools/clang"

|

||||

svn co "http://llvm.org/svn/llvm-project/lld/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/tools/lld"

|

||||

svn co "http://llvm.org/svn/llvm-project/polly/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/tools/polly"

|

||||

svn co "http://llvm.org/svn/llvm-project/clang-tools-extra/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/tools/clang/tools/extra"

|

||||

svn co "http://llvm.org/svn/llvm-project/compiler-rt/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/projects/compiler-rt"

|

||||

svn co "http://llvm.org/svn/llvm-project/libcxx/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/projects/libcxx"

|

||||

svn co "http://llvm.org/svn/llvm-project/libcxxabi/${CLANG_SOURCES_BRANCH}" "${WORKSPACE}/llvm/llvm/projects/libcxxabi"

|

||||

|

||||

mkdir "${WORKSPACE}/llvm/build"

|

||||

cd "${WORKSPACE}/llvm/build"

|

||||

|

||||

# NOTE You must build LLVM with the same ABI as ClickHouse.

|

||||

# For example, if you compile ClickHouse with libc++, you must add

|

||||

# -DLLVM_ENABLE_LIBCXX=1

|

||||

# to the line below.

|

||||

|

||||

cmake -DCMAKE_BUILD_TYPE:STRING=Release ../llvm

|

||||

|

||||

make -j $THREADS

|

||||

$SUDO make install

|

||||

hash clang

|

||||

|

||||

cd ../../..

|

||||

|

||||

export CC=clang

|

||||

export CXX=clang++

|

||||

8

ci/build-debian-packages.sh

Executable file

8

ci/build-debian-packages.sh

Executable file

@ -0,0 +1,8 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

[[ -d "${WORKSPACE}/sources" ]] || die "Run get-sources.sh first"

|

||||

|

||||

./sources/release

|

||||

47

ci/build-gcc-from-sources.sh

Executable file

47

ci/build-gcc-from-sources.sh

Executable file

@ -0,0 +1,47 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

./install-os-packages.sh curl

|

||||

|

||||

if [[ "${GCC_SOURCES_VERSION}" == "latest" ]]; then

|

||||

GCC_SOURCES_VERSION=$(curl -sSL https://ftpmirror.gnu.org/gcc/ | grep -oE 'gcc-[0-9]+(\.[0-9]+)+' | sort -Vr | head -n1)

|

||||

fi

|

||||

|

||||

GCC_VERSION_SHORT=$(echo "$GCC_SOURCES_VERSION" | grep -oE '[0-9]' | head -n1)

|

||||

|

||||

echo "Will download ${GCC_SOURCES_VERSION} (short version: $GCC_VERSION_SHORT)."

|

||||

|

||||

THREADS=$(grep -c ^processor /proc/cpuinfo)

|

||||

|

||||

mkdir "${WORKSPACE}/gcc"

|

||||

pushd "${WORKSPACE}/gcc"

|

||||

|

||||

wget https://ftpmirror.gnu.org/gcc/${GCC_SOURCES_VERSION}/${GCC_SOURCES_VERSION}.tar.xz

|

||||

tar xf ${GCC_SOURCES_VERSION}.tar.xz

|

||||

pushd ${GCC_SOURCES_VERSION}

|

||||

./contrib/download_prerequisites

|

||||

popd

|

||||

mkdir gcc-build

|

||||

pushd gcc-build

|

||||

../${GCC_SOURCES_VERSION}/configure --enable-languages=c,c++ --disable-multilib

|

||||

make -j $THREADS

|

||||

$SUDO make install

|

||||

|

||||

popd

|

||||

popd

|

||||

|

||||

$SUDO ln -sf /usr/local/bin/gcc /usr/local/bin/gcc-${GCC_GCC_SOURCES_VERSION_SHORT}

|

||||

$SUDO ln -sf /usr/local/bin/g++ /usr/local/bin/g++-${GCC_GCC_SOURCES_VERSION_SHORT}

|

||||

$SUDO ln -sf /usr/local/bin/gcc /usr/local/bin/cc

|

||||

$SUDO ln -sf /usr/local/bin/g++ /usr/local/bin/c++

|

||||

|

||||

echo '/usr/local/lib64' | $SUDO tee /etc/ld.so.conf.d/10_local-lib64.conf

|

||||

$SUDO ldconfig

|

||||

|

||||

hash gcc g++

|

||||

gcc --version

|

||||

|

||||

export CC=gcc

|

||||

export CXX=g++

|

||||

22

ci/build-normal.sh

Executable file

22

ci/build-normal.sh

Executable file

@ -0,0 +1,22 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

[[ -d "${WORKSPACE}/sources" ]] || die "Run get-sources.sh first"

|

||||

|

||||

mkdir -p "${WORKSPACE}/build"

|

||||

pushd "${WORKSPACE}/build"

|

||||

|

||||

if [[ "${ENABLE_EMBEDDED_COMPILER}" == 1 ]]; then

|

||||

[[ "$USE_LLVM_LIBRARIES_FROM_SYSTEM" == 0 ]] && CMAKE_FLAGS="$CMAKE_FLAGS -DUSE_INTERNAL_LLVM_LIBRARY=1"

|

||||

[[ "$USE_LLVM_LIBRARIES_FROM_SYSTEM" != 0 ]] && CMAKE_FLAGS="$CMAKE_FLAGS -DUSE_INTERNAL_LLVM_LIBRARY=0"

|

||||

fi

|

||||

|

||||

cmake -DCMAKE_BUILD_TYPE=${BUILD_TYPE} -DENABLE_EMBEDDED_COMPILER=${ENABLE_EMBEDDED_COMPILER} $CMAKE_FLAGS ../sources

|

||||

|

||||

[[ "$BUILD_TARGETS" != 'all' ]] && BUILD_TARGETS_STRING="--target $BUILD_TARGETS"

|

||||

|

||||

cmake --build . $BUILD_TARGETS_STRING -- -j $THREADS

|

||||

|

||||

popd

|

||||

7

ci/check-docker.sh

Executable file

7

ci/check-docker.sh

Executable file

@ -0,0 +1,7 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

command -v docker > /dev/null || die "You need to install Docker"

|

||||

docker ps > /dev/null || die "You need to have access to Docker: run '$SUDO usermod -aG docker $USER' and relogin"

|

||||

20

ci/check-syntax.sh

Executable file

20

ci/check-syntax.sh

Executable file

@ -0,0 +1,20 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

./install-os-packages.sh jq

|

||||

|

||||

[[ -d "${WORKSPACE}/sources" ]] || die "Run get-sources.sh first"

|

||||

|

||||

mkdir -p "${WORKSPACE}/build"

|

||||

pushd "${WORKSPACE}/build"

|

||||

|

||||

cmake -DCMAKE_BUILD_TYPE=Debug $CMAKE_FLAGS ../sources

|

||||

|

||||

make -j $THREADS re2_st # Generated headers

|

||||

|

||||

jq --raw-output '.[] | .command' compile_commands.json | grep -v -P -- '-c .+/contrib/' | sed -r -e 's/-o\s+\S+/-fsyntax-only/' > syntax-commands

|

||||

xargs --arg-file=syntax-commands --max-procs=$THREADS --replace /bin/sh -c "{}"

|

||||

|

||||

popd

|

||||

10

ci/create-sources-tarball.sh

Executable file

10

ci/create-sources-tarball.sh

Executable file

@ -0,0 +1,10 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

if [[ -d "${WORKSPACE}/sources" ]]; then

|

||||

tar -c -z -f "${WORKSPACE}/sources.tar.gz" --directory "${WORKSPACE}/sources" .

|

||||

else

|

||||

die "Run get-sources first"

|

||||

fi

|

||||

65

ci/default-config

Normal file

65

ci/default-config

Normal file

@ -0,0 +1,65 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

if [[ -z "$INITIALIZED" ]]; then

|

||||

|

||||

INITIALIZED=1

|

||||

|

||||

SCRIPTPATH=$(pwd)

|

||||

WORKSPACE=${SCRIPTPATH}/workspace

|

||||

PROJECT_ROOT=$(cd $SCRIPTPATH/.. && pwd)

|

||||

|

||||

# Almost all scripts take no arguments. Arguments should be in config.

|

||||

|

||||

# get-sources

|

||||

SOURCES_METHOD=local # clone, local, tarball

|

||||

SOURCES_CLONE_URL="https://github.com/yandex/ClickHouse.git"

|

||||

SOURCES_BRANCH="master"

|

||||

SOURCES_COMMIT=HEAD # do checkout of this commit after clone

|

||||

|

||||

# prepare-toolchain

|

||||

COMPILER=gcc # gcc, clang

|

||||

COMPILER_INSTALL_METHOD=packages # packages, sources

|

||||

COMPILER_PACKAGE_VERSION=7 # or 6.0 for clang

|

||||

|

||||

# install-compiler-from-sources

|

||||

CLANG_SOURCES_BRANCH=trunk # or tags/RELEASE_600/final

|

||||

GCC_SOURCES_VERSION=latest # or gcc-7.1.0

|

||||

|

||||

# install-libraries

|

||||

USE_LLVM_LIBRARIES_FROM_SYSTEM=0 # 0 or 1

|

||||

ENABLE_EMBEDDED_COMPILER=1

|

||||

|

||||

# build

|

||||

BUILD_METHOD=normal # normal, debian

|

||||

BUILD_TARGETS=clickhouse # tagtet name, all; only for "normal"

|

||||

BUILD_TYPE=RelWithDebInfo # RelWithDebInfo, Debug, ASan, TSan

|

||||

CMAKE_FLAGS=""

|

||||

|

||||

# prepare-docker-image-ubuntu

|

||||

DOCKER_UBUNTU_VERSION=bionic

|

||||

DOCKER_UBUNTU_ARCH=arm64 # How the architecture is named in a tarball at https://partner-images.canonical.com/core/

|

||||

DOCKER_UBUNTU_QUEMU_ARCH=aarch64 # How the architecture is named in QEMU

|

||||

DOCKER_UBUNTU_TAG_ARCH=arm64 # How the architecture is named in Docker

|

||||

DOCKER_UBUNTU_QEMU_VER=v2.9.1

|

||||

DOCKER_UBUNTU_REPO=multiarch/ubuntu-core

|

||||

|

||||

THREADS=$(grep -c ^processor /proc/cpuinfo || nproc || sysctl -a | grep -F 'hw.ncpu' | grep -oE '[0-9]+')

|

||||

|

||||

# All scripts should return 0 in case of success, 1 in case of permanent error,

|

||||

# 2 in case of temporary error, any other code in case of permanent error.

|

||||

function die {

|

||||

echo ${1:-Error}

|

||||

exit ${2:1}

|

||||

}

|

||||

|

||||

[[ $EUID -ne 0 ]] && SUDO=sudo

|

||||

|

||||

./install-os-packages.sh prepare

|

||||

|

||||

# Configuration parameters may be overriden with CONFIG environment variable pointing to config file.

|

||||

[[ -n "$CONFIG" ]] && source $CONFIG

|

||||

|

||||

mkdir -p $WORKSPACE

|

||||

|

||||

fi

|

||||

21

ci/docker-multiarch/LICENSE

Normal file

21

ci/docker-multiarch/LICENSE

Normal file

@ -0,0 +1,21 @@

|

||||

The MIT License (MIT)

|

||||

|

||||

Copyright (c) 2016 Multiarch

|

||||

|

||||

Permission is hereby granted, free of charge, to any person obtaining a copy

|

||||

of this software and associated documentation files (the "Software"), to deal

|

||||

in the Software without restriction, including without limitation the rights

|

||||

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

||||

copies of the Software, and to permit persons to whom the Software is

|

||||

furnished to do so, subject to the following conditions:

|

||||

|

||||

The above copyright notice and this permission notice shall be included in all

|

||||

copies or substantial portions of the Software.

|

||||

|

||||

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

||||

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

||||

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

||||

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

||||

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

||||

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

||||

SOFTWARE.

|

||||

53

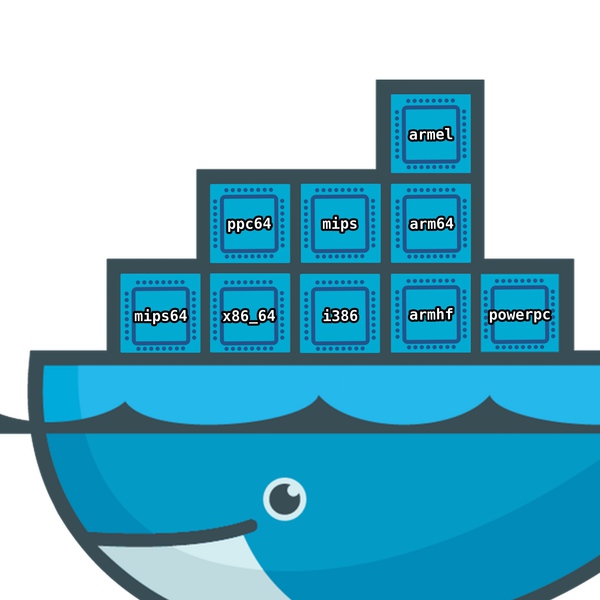

ci/docker-multiarch/README.md

Normal file

53

ci/docker-multiarch/README.md

Normal file

@ -0,0 +1,53 @@

|

||||

Source: https://github.com/multiarch/ubuntu-core

|

||||

Commit: 3972a7794b40a965615abd710759d3ed439c9a55

|

||||

|

||||

# :earth_africa: ubuntu-core

|

||||

|

||||

|

||||

|

||||

Multiarch Ubuntu images for Docker.

|

||||

|

||||

Based on https://github.com/tianon/docker-brew-ubuntu-core/

|

||||

|

||||

* `multiarch/ubuntu-core` on [Docker Hub](https://hub.docker.com/r/multiarch/ubuntu-core/)

|

||||

* [Available tags](https://hub.docker.com/r/multiarch/ubuntu-core/tags/)

|

||||

|

||||

## Usage

|

||||

|

||||

Once you need to configure binfmt-support on your Docker host.

|

||||

This works locally or remotely (i.e using boot2docker or swarm).

|

||||

|

||||

```console

|

||||

# configure binfmt-support on the Docker host (works locally or remotely, i.e: using boot2docker)

|

||||

$ docker run --rm --privileged multiarch/qemu-user-static:register --reset

|

||||

```

|

||||

|

||||

Then you can run an `armhf` image from your `x86_64` Docker host.

|

||||

|

||||

```console

|

||||

$ docker run -it --rm multiarch/ubuntu-core:armhf-wily

|

||||

root@a0818570f614:/# uname -a

|

||||

Linux a0818570f614 4.1.13-boot2docker #1 SMP Fri Nov 20 19:05:50 UTC 2015 armv7l armv7l armv7l GNU/Linux

|

||||

root@a0818570f614:/# exit

|

||||

```

|

||||

|

||||

Or an `x86_64` image from your `x86_64` Docker host, directly, without qemu emulation.

|

||||

|

||||

```console

|

||||

$ docker run -it --rm multiarch/ubuntu-core:amd64-wily

|

||||

root@27fe384370c9:/# uname -a

|

||||

Linux 27fe384370c9 4.1.13-boot2docker #1 SMP Fri Nov 20 19:05:50 UTC 2015 x86_64 x86_64 x86_64 GNU/Linux

|

||||

root@27fe384370c9:/#

|

||||

```

|

||||

|

||||

It also works for `arm64`

|

||||

|

||||

```console

|

||||

$ docker run -it --rm multiarch/ubuntu-core:arm64-wily

|

||||

root@723fb9f184fa:/# uname -a

|

||||

Linux 723fb9f184fa 4.1.13-boot2docker #1 SMP Fri Nov 20 19:05:50 UTC 2015 aarch64 aarch64 aarch64 GNU/Linux

|

||||

```

|

||||

|

||||

## License

|

||||

|

||||

MIT

|

||||

96

ci/docker-multiarch/update.sh

Executable file

96

ci/docker-multiarch/update.sh

Executable file

@ -0,0 +1,96 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

# A POSIX variable

|

||||

OPTIND=1 # Reset in case getopts has been used previously in the shell.

|

||||

|

||||

while getopts "a:v:q:u:d:t:" opt; do

|

||||

case "$opt" in

|

||||

a) ARCH=$OPTARG

|

||||

;;

|

||||

v) VERSION=$OPTARG

|

||||

;;

|

||||

q) QEMU_ARCH=$OPTARG

|

||||

;;

|

||||

u) QEMU_VER=$OPTARG

|

||||

;;

|

||||

d) DOCKER_REPO=$OPTARG

|

||||

;;

|

||||

t) TAG_ARCH=$OPTARG

|

||||

;;

|

||||

esac

|

||||

done

|

||||

|

||||

thisTarBase="ubuntu-$VERSION-core-cloudimg-$ARCH"

|

||||

thisTar="$thisTarBase-root.tar.gz"

|

||||

baseUrl="https://partner-images.canonical.com/core/$VERSION"

|

||||

|

||||

|

||||

# install qemu-user-static

|

||||

if [ -n "${QEMU_ARCH}" ]; then

|

||||

if [ ! -f x86_64_qemu-${QEMU_ARCH}-static.tar.gz ]; then

|

||||

wget -N https://github.com/multiarch/qemu-user-static/releases/download/${QEMU_VER}/x86_64_qemu-${QEMU_ARCH}-static.tar.gz

|

||||

fi

|

||||

tar -xvf x86_64_qemu-${QEMU_ARCH}-static.tar.gz -C $ROOTFS/usr/bin/

|

||||

fi

|

||||

|

||||

|

||||

# get the image

|

||||

if \

|

||||

wget -q --spider "$baseUrl/current" \

|

||||

&& wget -q --spider "$baseUrl/current/$thisTar" \

|

||||

; then

|

||||

baseUrl+='/current'

|

||||

fi

|

||||

wget -qN "$baseUrl/"{{MD5,SHA{1,256}}SUMS{,.gpg},"$thisTarBase.manifest",'unpacked/build-info.txt'} || true

|

||||

wget -N "$baseUrl/$thisTar"

|

||||

|

||||

# check checksum

|

||||

if [ -f SHA256SUMS ]; then

|

||||

sha256sum="$(sha256sum "$thisTar" | cut -d' ' -f1)"

|

||||

if ! grep -q "$sha256sum" SHA256SUMS; then

|

||||

echo >&2 "error: '$thisTar' has invalid SHA256"

|

||||

exit 1

|

||||

fi

|

||||

fi

|

||||

|

||||

cat > Dockerfile <<-EOF

|

||||

FROM scratch

|

||||

ADD $thisTar /

|

||||

ENV ARCH=${ARCH} UBUNTU_SUITE=${VERSION} DOCKER_REPO=${DOCKER_REPO}

|

||||

EOF

|

||||

|

||||

# add qemu-user-static binary

|

||||

if [ -n "${QEMU_ARCH}" ]; then

|

||||

cat >> Dockerfile <<EOF

|

||||

|

||||

# Add qemu-user-static binary for amd64 builders

|

||||

ADD x86_64_qemu-${QEMU_ARCH}-static.tar.gz /usr/bin

|

||||

EOF

|

||||

fi

|

||||

|

||||

cat >> Dockerfile <<-EOF

|

||||

# a few minor docker-specific tweaks

|

||||

# see https://github.com/docker/docker/blob/master/contrib/mkimage/debootstrap

|

||||

RUN echo '#!/bin/sh' > /usr/sbin/policy-rc.d \\

|

||||

&& echo 'exit 101' >> /usr/sbin/policy-rc.d \\

|

||||

&& chmod +x /usr/sbin/policy-rc.d \\

|

||||

&& dpkg-divert --local --rename --add /sbin/initctl \\

|

||||

&& cp -a /usr/sbin/policy-rc.d /sbin/initctl \\

|

||||

&& sed -i 's/^exit.*/exit 0/' /sbin/initctl \\

|

||||

&& echo 'force-unsafe-io' > /etc/dpkg/dpkg.cfg.d/docker-apt-speedup \\

|

||||

&& echo 'DPkg::Post-Invoke { "rm -f /var/cache/apt/archives/*.deb /var/cache/apt/archives/partial/*.deb /var/cache/apt/*.bin || true"; };' > /etc/apt/apt.conf.d/docker-clean \\

|

||||

&& echo 'APT::Update::Post-Invoke { "rm -f /var/cache/apt/archives/*.deb /var/cache/apt/archives/partial/*.deb /var/cache/apt/*.bin || true"; };' >> /etc/apt/apt.conf.d/docker-clean \\

|

||||

&& echo 'Dir::Cache::pkgcache ""; Dir::Cache::srcpkgcache "";' >> /etc/apt/apt.conf.d/docker-clean \\

|

||||

&& echo 'Acquire::Languages "none";' > /etc/apt/apt.conf.d/docker-no-languages \\

|

||||

&& echo 'Acquire::GzipIndexes "true"; Acquire::CompressionTypes::Order:: "gz";' > /etc/apt/apt.conf.d/docker-gzip-indexes

|

||||

|

||||

# enable the universe

|

||||

RUN sed -i 's/^#\s*\(deb.*universe\)$/\1/g' /etc/apt/sources.list

|

||||

|

||||

# overwrite this with 'CMD []' in a dependent Dockerfile

|

||||

CMD ["/bin/bash"]

|

||||

EOF

|

||||

|

||||

docker build -t "${DOCKER_REPO}:${TAG_ARCH}-${VERSION}" .

|

||||

docker run --rm "${DOCKER_REPO}:${TAG_ARCH}-${VERSION}" /bin/bash -ec "echo Hello from Ubuntu!"

|

||||

18

ci/get-sources.sh

Executable file

18

ci/get-sources.sh

Executable file

@ -0,0 +1,18 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

if [[ "$SOURCES_METHOD" == "clone" ]]; then

|

||||

./install-os-packages.sh git

|

||||

SOURCES_DIR="${WORKSPACE}/sources"

|

||||

mkdir -p "${SOURCES_DIR}"

|

||||

git clone --recursive --branch "$SOURCES_BRANCH" "$SOURCES_CLONE_URL" "${SOURCES_DIR}"

|

||||

pushd "${SOURCES_DIR}"

|

||||

git checkout --recurse-submodules "$SOURCES_COMMIT"

|

||||

popd

|

||||

elif [[ "$SOURCES_METHOD" == "local" ]]; then

|

||||

ln -f -s "${PROJECT_ROOT}" "${WORKSPACE}/sources"

|

||||

else

|

||||

die "Unknown SOURCES_METHOD"

|

||||

fi

|

||||

22

ci/install-compiler-from-packages.sh

Executable file

22

ci/install-compiler-from-packages.sh

Executable file

@ -0,0 +1,22 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

# TODO Install from PPA on older Ubuntu

|

||||

|

||||

./install-os-packages.sh ${COMPILER}-${COMPILER_PACKAGE_VERSION}

|

||||

|

||||

if [[ "$COMPILER" == "gcc" ]]; then

|

||||

if command -v gcc-${COMPILER_PACKAGE_VERSION}; then export CC=gcc-${COMPILER_PACKAGE_VERSION} CXX=g++-${COMPILER_PACKAGE_VERSION};

|

||||

elif command -v gcc${COMPILER_PACKAGE_VERSION}; then export CC=gcc${COMPILER_PACKAGE_VERSION} CXX=g++${COMPILER_PACKAGE_VERSION};

|

||||

elif command -v gcc; then export CC=gcc CXX=g++;

|

||||

fi

|

||||

elif [[ "$COMPILER" == "clang" ]]; then

|

||||

if command -v clang-${COMPILER_PACKAGE_VERSION}; then export CC=clang-${COMPILER_PACKAGE_VERSION} CXX=clang++-${COMPILER_PACKAGE_VERSION};

|

||||

elif command -v clang${COMPILER_PACKAGE_VERSION}; then export CC=clang${COMPILER_PACKAGE_VERSION} CXX=clang++${COMPILER_PACKAGE_VERSION};

|

||||

elif command -v clang; then export CC=clang CXX=clang++;

|

||||

fi

|

||||

else

|

||||

die "Unknown compiler specified"

|

||||

fi

|

||||

12

ci/install-compiler-from-sources.sh

Executable file

12

ci/install-compiler-from-sources.sh

Executable file

@ -0,0 +1,12 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

if [[ "$COMPILER" == "gcc" ]]; then

|

||||

. build-gcc-from-sources.sh

|

||||

elif [[ "$COMPILER" == "clang" ]]; then

|

||||

. build-clang-from-sources.sh

|

||||

else

|

||||

die "Unknown COMPILER"

|

||||

fi

|

||||

14

ci/install-libraries.sh

Executable file

14

ci/install-libraries.sh

Executable file

@ -0,0 +1,14 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

./install-os-packages.sh libssl-dev

|

||||

./install-os-packages.sh libicu-dev

|

||||

./install-os-packages.sh libreadline-dev

|

||||

./install-os-packages.sh libmariadbclient-dev

|

||||

./install-os-packages.sh libunixodbc-dev

|

||||

|

||||

if [[ "$ENABLE_EMBEDDED_COMPILER" == 1 && "$USE_LLVM_LIBRARIES_FROM_SYSTEM" == 1 ]]; then

|

||||

./install-os-packages.sh llvm-libs-5.0

|

||||

fi

|

||||

120

ci/install-os-packages.sh

Executable file

120

ci/install-os-packages.sh

Executable file

@ -0,0 +1,120 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

# Dispatches package installation on various OS and distributives

|

||||

|

||||

WHAT=$1

|

||||

|

||||

[[ $EUID -ne 0 ]] && SUDO=sudo

|

||||

|

||||

command -v apt-get && PACKAGE_MANAGER=apt

|

||||

command -v yum && PACKAGE_MANAGER=yum

|

||||

command -v pkg && PACKAGE_MANAGER=pkg

|

||||

|

||||

|

||||

case $PACKAGE_MANAGER in

|

||||

apt)

|

||||

case $WHAT in

|

||||

prepare)

|

||||

$SUDO apt-get update

|

||||

;;

|

||||

svn)

|

||||

$SUDO apt-get install -y subversion

|

||||

;;

|

||||

gcc*)

|

||||

$SUDO apt-get install -y $WHAT ${WHAT/cc/++}

|

||||

;;

|

||||

clang*)

|

||||

$SUDO apt-get install -y $WHAT libc++-dev libc++abi-dev

|

||||

[[ $(uname -m) == "x86_64" ]] && $SUDO apt-get install -y ${WHAT/clang/lld} || true

|

||||

;;

|

||||

git)

|

||||

$SUDO apt-get install -y git

|

||||

;;

|

||||

cmake)

|

||||

$SUDO apt-get install -y cmake3 || $SUDO apt-get install -y cmake

|

||||

;;

|

||||

curl)

|

||||

$SUDO apt-get install -y curl

|

||||

;;

|

||||

jq)

|

||||

$SUDO apt-get install -y jq

|

||||

;;

|

||||

libssl-dev)

|

||||

$SUDO apt-get install -y libssl-dev

|

||||

;;

|

||||

libicu-dev)

|

||||

$SUDO apt-get install -y libicu-dev

|

||||

;;

|

||||

libreadline-dev)

|

||||

$SUDO apt-get install -y libreadline-dev

|

||||

;;

|

||||

libunixodbc-dev)

|

||||

$SUDO apt-get install -y unixodbc-dev

|

||||

;;

|

||||

libmariadbclient-dev)

|

||||

$SUDO apt-get install -y libmariadbclient-dev

|

||||

;;

|

||||

llvm-libs*)

|

||||

$SUDO apt-get install -y ${WHAT/llvm-libs/liblld}-dev ${WHAT/llvm-libs/libclang}-dev

|

||||

;;

|

||||

qemu-user-static)

|

||||

$SUDO apt-get install -y qemu-user-static

|

||||

;;

|

||||

vagrant-virtualbox)

|

||||

$SUDO apt-get install -y vagrant virtualbox

|

||||

;;

|

||||

*)

|

||||

echo "Unknown package"; exit 1;

|

||||

;;

|

||||

esac

|

||||

;;

|

||||

pkg)

|

||||

case $WHAT in

|

||||

prepare)

|

||||

;;

|

||||

svn)

|

||||

$SUDO pkg install -y subversion

|

||||

;;

|

||||

gcc*)

|

||||

$SUDO pkg install -y ${WHAT/-/}

|

||||

;;

|

||||

clang*)

|

||||

$SUDO pkg install -y clang-devel

|

||||

;;

|

||||

git)

|

||||

$SUDO pkg install -y git

|

||||

;;

|

||||

cmake)

|

||||

$SUDO pkg install -y cmake

|

||||

;;

|

||||

curl)

|

||||

$SUDO pkg install -y curl

|

||||

;;

|

||||

jq)

|

||||

$SUDO pkg install -y jq

|

||||

;;

|

||||

libssl-dev)

|

||||

$SUDO pkg install -y openssl

|

||||

;;

|

||||

libicu-dev)

|

||||

$SUDO pkg install -y icu

|

||||

;;

|

||||

libreadline-dev)

|

||||

$SUDO pkg install -y readline

|

||||

;;

|

||||

libunixodbc-dev)

|

||||

$SUDO pkg install -y unixODBC libltdl

|

||||

;;

|

||||

libmariadbclient-dev)

|

||||

$SUDO pkg install -y mariadb102-client

|

||||

;;

|

||||

*)

|

||||

echo "Unknown package"; exit 1;

|

||||

;;

|

||||

esac

|

||||

;;

|

||||

*)

|

||||

echo "Unknown distributive"; exit 1;

|

||||

;;

|

||||

esac

|

||||

5

ci/jobs/quick-build/README.md

Normal file

5

ci/jobs/quick-build/README.md

Normal file

@ -0,0 +1,5 @@

|

||||

## Build with debug mode and without many libraries

|

||||

|

||||

This job is intended as first check that build is not broken on wide variety of platforms.

|

||||

|

||||

Results of this build are not intended for production usage.

|

||||

31

ci/jobs/quick-build/run.sh

Executable file

31

ci/jobs/quick-build/run.sh

Executable file

@ -0,0 +1,31 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

# How to run:

|

||||

# From "ci" directory:

|

||||

# jobs/quick-build/run.sh

|

||||

# or:

|

||||

# ./run-with-docker.sh ubuntu:bionic jobs/quick-build/run.sh

|

||||

|

||||

cd "$(dirname $0)"/../..

|

||||

|

||||

. default-config

|

||||

|

||||

SOURCES_METHOD=local

|

||||

COMPILER=clang

|

||||

COMPILER_INSTALL_METHOD=packages

|

||||

COMPILER_PACKAGE_VERSION=6.0

|

||||

USE_LLVM_LIBRARIES_FROM_SYSTEM=0

|

||||

BUILD_METHOD=normal

|

||||

BUILD_TARGETS=clickhouse

|

||||

BUILD_TYPE=Debug

|

||||

ENABLE_EMBEDDED_COMPILER=0

|

||||

|

||||

CMAKE_FLAGS="-D CMAKE_C_FLAGS_ADD=-g0 -D CMAKE_CXX_FLAGS_ADD=-g0 -D ENABLE_TCMALLOC=0 -D ENABLE_CAPNP=0 -D ENABLE_RDKAFKA=0 -D ENABLE_UNWIND=0 -D ENABLE_ICU=0 -D ENABLE_POCO_MONGODB=0 -D ENABLE_POCO_NETSSL=0 -D ENABLE_POCO_ODBC=0 -D ENABLE_MYSQL=0"

|

||||

|

||||

[[ $(uname) == "FreeBSD" ]] && COMPILER_PACKAGE_VERSION=devel && export COMPILER_PATH=/usr/local/bin

|

||||

|

||||

. get-sources.sh

|

||||

. prepare-toolchain.sh

|

||||

. install-libraries.sh

|

||||

. build-normal.sh

|

||||

23

ci/prepare-docker-image-ubuntu.sh

Executable file

23

ci/prepare-docker-image-ubuntu.sh

Executable file

@ -0,0 +1,23 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

./check-docker.sh

|

||||

|

||||

# http://fl47l1n3.net/2015/12/24/binfmt/

|

||||

./install-os-packages.sh qemu-user-static

|

||||

|

||||

pushd docker-multiarch

|

||||

|

||||

$SUDO ./update.sh \

|

||||

-a "$DOCKER_UBUNTU_ARCH" \

|

||||

-v "$DOCKER_UBUNTU_VERSION" \

|

||||

-q "$DOCKER_UBUNTU_QUEMU_ARCH" \

|

||||

-u "$DOCKER_UBUNTU_QEMU_VER" \

|

||||

-d "$DOCKER_UBUNTU_REPO" \

|

||||

-t "$DOCKER_UBUNTU_TAG_ARCH"

|

||||

|

||||

docker run --rm --privileged multiarch/qemu-user-static:register

|

||||

|

||||

popd

|

||||

14

ci/prepare-toolchain.sh

Executable file

14

ci/prepare-toolchain.sh

Executable file

@ -0,0 +1,14 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

./install-os-packages.sh cmake

|

||||

|

||||

if [[ "$COMPILER_INSTALL_METHOD" == "packages" ]]; then

|

||||

. install-compiler-from-packages.sh

|

||||

elif [[ "$COMPILER_INSTALL_METHOD" == "sources" ]]; then

|

||||

. install-compiler-from-sources.sh

|

||||

else

|

||||

die "Unknown COMPILER_INSTALL_METHOD"

|

||||

fi

|

||||

12

ci/prepare-vagrant-image-freebsd.sh

Executable file

12

ci/prepare-vagrant-image-freebsd.sh

Executable file

@ -0,0 +1,12 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

source default-config

|

||||

|

||||

./install-os-packages.sh vagrant-virtualbox

|

||||

|

||||

pushd "vagrant-freebsd"

|

||||

vagrant up

|

||||

vagrant ssh-config > vagrant-ssh

|

||||

ssh -F vagrant-ssh default 'uname -a'

|

||||

popd

|

||||

18

ci/run-clickhouse-from-binaries.sh

Executable file

18

ci/run-clickhouse-from-binaries.sh

Executable file

@ -0,0 +1,18 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

# Usage example:

|

||||

# ./run-with-docker.sh centos:centos6 ./run-clickhouse-from-binaries.sh

|

||||

|

||||

source default-config

|

||||

|

||||

SERVER_BIN="${WORKSPACE}/build/dbms/src/Server/clickhouse"

|

||||

SERVER_CONF="${WORKSPACE}/sources/dbms/src/Server/config.xml"

|

||||

SERVER_DATADIR="${WORKSPACE}/clickhouse"

|

||||

|

||||

[[ -x "$SERVER_BIN" ]] || die "Run build-normal.sh first"

|

||||

[[ -r "$SERVER_CONF" ]] || die "Run get-sources.sh first"

|

||||

|

||||

mkdir -p "${SERVER_DATADIR}"

|

||||

|

||||

$SERVER_BIN server --config-file "$SERVER_CONF" --pid-file="${WORKSPACE}/clickhouse.pid" -- --path "$SERVER_DATADIR"

|

||||

9

ci/run-with-docker.sh

Executable file

9

ci/run-with-docker.sh

Executable file

@ -0,0 +1,9 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

mkdir -p /var/cache/ccache

|

||||

DOCKER_ENV+=" --mount=type=bind,source=/var/cache/ccache,destination=/ccache -e CCACHE_DIR=/ccache "

|

||||

|

||||

PROJECT_ROOT="$(cd "$(dirname "$0")/.."; pwd -P)"

|

||||

[[ -n "$CONFIG" ]] && DOCKER_ENV="--env=CONFIG"

|

||||

docker run -t --network=host --mount=type=bind,source=${PROJECT_ROOT},destination=/ClickHouse --workdir=/ClickHouse/ci $DOCKER_ENV "$1" "$2"

|

||||

14

ci/run-with-vagrant.sh

Executable file

14

ci/run-with-vagrant.sh

Executable file

@ -0,0 +1,14 @@

|

||||

#!/usr/bin/env bash

|

||||

set -e -x

|

||||

|

||||

[[ -r "vagrant-${1}/vagrant-ssh" ]] || die "Run prepare-vagrant-image-... first."

|

||||

|

||||

pushd vagrant-$1

|

||||

|

||||

shopt -s extglob

|

||||

|

||||

vagrant ssh -c "mkdir -p ClickHouse"

|

||||

scp -q -F vagrant-ssh -r ../../!(*build*) default:~/ClickHouse

|

||||

vagrant ssh -c "cd ClickHouse/ci; $2"

|

||||

|

||||

popd

|

||||

1

ci/vagrant-freebsd/.gitignore

vendored

Normal file

1

ci/vagrant-freebsd/.gitignore

vendored

Normal file

@ -0,0 +1 @@

|

||||

.vagrant

|

||||

3

ci/vagrant-freebsd/Vagrantfile

vendored

Normal file

3

ci/vagrant-freebsd/Vagrantfile

vendored

Normal file

@ -0,0 +1,3 @@

|

||||

Vagrant.configure("2") do |config|

|

||||

config.vm.box = "generic/freebsd11"

|

||||

end

|

||||

@ -22,6 +22,10 @@ if (NOT MSVC)

|

||||