mirror of

https://github.com/ClickHouse/ClickHouse.git

synced 2024-11-21 23:21:59 +00:00

Merge branch 'ClickHouse:master' into bakwc-patch-2

This commit is contained in:

commit

ce87451b66

@ -73,8 +73,8 @@ struct uint128

|

||||

|

||||

uint128() = default;

|

||||

uint128(uint64 low64_, uint64 high64_) : low64(low64_), high64(high64_) {}

|

||||

friend bool operator ==(const uint128 & x, const uint128 & y) { return (x.low64 == y.low64) && (x.high64 == y.high64); }

|

||||

friend bool operator !=(const uint128 & x, const uint128 & y) { return !(x == y); }

|

||||

|

||||

friend auto operator<=>(const uint128 &, const uint128 &) = default;

|

||||

};

|

||||

|

||||

inline uint64 Uint128Low64(const uint128 & x) { return x.low64; }

|

||||

|

||||

2

contrib/curl

vendored

2

contrib/curl

vendored

@ -1 +1 @@

|

||||

Subproject commit b0edf0b7dae44d9e66f270a257cf654b35d5263d

|

||||

Subproject commit eb3b049df526bf125eda23218e680ce7fa9ec46c

|

||||

@ -8,125 +8,122 @@ endif()

|

||||

set (LIBRARY_DIR "${ClickHouse_SOURCE_DIR}/contrib/curl")

|

||||

|

||||

set (SRCS

|

||||

"${LIBRARY_DIR}/lib/fopen.c"

|

||||

"${LIBRARY_DIR}/lib/noproxy.c"

|

||||

"${LIBRARY_DIR}/lib/idn.c"

|

||||

"${LIBRARY_DIR}/lib/cfilters.c"

|

||||

"${LIBRARY_DIR}/lib/cf-socket.c"

|

||||

"${LIBRARY_DIR}/lib/altsvc.c"

|

||||

"${LIBRARY_DIR}/lib/amigaos.c"

|

||||

"${LIBRARY_DIR}/lib/asyn-thread.c"

|

||||

"${LIBRARY_DIR}/lib/base64.c"

|

||||

"${LIBRARY_DIR}/lib/bufq.c"

|

||||

"${LIBRARY_DIR}/lib/bufref.c"

|

||||

"${LIBRARY_DIR}/lib/cf-h1-proxy.c"

|

||||

"${LIBRARY_DIR}/lib/cf-haproxy.c"

|

||||

"${LIBRARY_DIR}/lib/cf-https-connect.c"

|

||||

"${LIBRARY_DIR}/lib/file.c"

|

||||

"${LIBRARY_DIR}/lib/timeval.c"

|

||||

"${LIBRARY_DIR}/lib/base64.c"

|

||||

"${LIBRARY_DIR}/lib/hostip.c"

|

||||

"${LIBRARY_DIR}/lib/progress.c"

|

||||

"${LIBRARY_DIR}/lib/formdata.c"

|

||||

"${LIBRARY_DIR}/lib/cookie.c"

|

||||

"${LIBRARY_DIR}/lib/http.c"

|

||||

"${LIBRARY_DIR}/lib/sendf.c"

|

||||

"${LIBRARY_DIR}/lib/url.c"

|

||||

"${LIBRARY_DIR}/lib/dict.c"

|

||||

"${LIBRARY_DIR}/lib/if2ip.c"

|

||||

"${LIBRARY_DIR}/lib/speedcheck.c"

|

||||

"${LIBRARY_DIR}/lib/ldap.c"

|

||||

"${LIBRARY_DIR}/lib/version.c"

|

||||

"${LIBRARY_DIR}/lib/getenv.c"

|

||||

"${LIBRARY_DIR}/lib/escape.c"

|

||||

"${LIBRARY_DIR}/lib/mprintf.c"

|

||||

"${LIBRARY_DIR}/lib/telnet.c"

|

||||

"${LIBRARY_DIR}/lib/netrc.c"

|

||||

"${LIBRARY_DIR}/lib/getinfo.c"

|

||||

"${LIBRARY_DIR}/lib/transfer.c"

|

||||

"${LIBRARY_DIR}/lib/strcase.c"

|

||||

"${LIBRARY_DIR}/lib/easy.c"

|

||||

"${LIBRARY_DIR}/lib/curl_fnmatch.c"

|

||||

"${LIBRARY_DIR}/lib/curl_log.c"

|

||||

"${LIBRARY_DIR}/lib/fileinfo.c"

|

||||

"${LIBRARY_DIR}/lib/krb5.c"

|

||||

"${LIBRARY_DIR}/lib/memdebug.c"

|

||||

"${LIBRARY_DIR}/lib/http_chunks.c"

|

||||

"${LIBRARY_DIR}/lib/strtok.c"

|

||||

"${LIBRARY_DIR}/lib/cf-socket.c"

|

||||

"${LIBRARY_DIR}/lib/cfilters.c"

|

||||

"${LIBRARY_DIR}/lib/conncache.c"

|

||||

"${LIBRARY_DIR}/lib/connect.c"

|

||||

"${LIBRARY_DIR}/lib/llist.c"

|

||||

"${LIBRARY_DIR}/lib/hash.c"

|

||||

"${LIBRARY_DIR}/lib/multi.c"

|

||||

"${LIBRARY_DIR}/lib/content_encoding.c"

|

||||

"${LIBRARY_DIR}/lib/share.c"

|

||||

"${LIBRARY_DIR}/lib/http_digest.c"

|

||||

"${LIBRARY_DIR}/lib/md4.c"

|

||||

"${LIBRARY_DIR}/lib/md5.c"

|

||||

"${LIBRARY_DIR}/lib/http_negotiate.c"

|

||||

"${LIBRARY_DIR}/lib/inet_pton.c"

|

||||

"${LIBRARY_DIR}/lib/strtoofft.c"

|

||||

"${LIBRARY_DIR}/lib/strerror.c"

|

||||

"${LIBRARY_DIR}/lib/amigaos.c"

|

||||

"${LIBRARY_DIR}/lib/cookie.c"

|

||||

"${LIBRARY_DIR}/lib/curl_addrinfo.c"

|

||||

"${LIBRARY_DIR}/lib/curl_des.c"

|

||||

"${LIBRARY_DIR}/lib/curl_endian.c"

|

||||

"${LIBRARY_DIR}/lib/curl_fnmatch.c"

|

||||

"${LIBRARY_DIR}/lib/curl_get_line.c"

|

||||

"${LIBRARY_DIR}/lib/curl_gethostname.c"

|

||||

"${LIBRARY_DIR}/lib/curl_gssapi.c"

|

||||

"${LIBRARY_DIR}/lib/curl_memrchr.c"

|

||||

"${LIBRARY_DIR}/lib/curl_multibyte.c"

|

||||

"${LIBRARY_DIR}/lib/curl_ntlm_core.c"

|

||||

"${LIBRARY_DIR}/lib/curl_ntlm_wb.c"

|

||||

"${LIBRARY_DIR}/lib/curl_path.c"

|

||||

"${LIBRARY_DIR}/lib/curl_range.c"

|

||||

"${LIBRARY_DIR}/lib/curl_rtmp.c"

|

||||

"${LIBRARY_DIR}/lib/curl_sasl.c"

|

||||

"${LIBRARY_DIR}/lib/curl_sspi.c"

|

||||

"${LIBRARY_DIR}/lib/curl_threads.c"

|

||||

"${LIBRARY_DIR}/lib/curl_trc.c"

|

||||

"${LIBRARY_DIR}/lib/dict.c"

|

||||

"${LIBRARY_DIR}/lib/doh.c"

|

||||

"${LIBRARY_DIR}/lib/dynbuf.c"

|

||||

"${LIBRARY_DIR}/lib/dynhds.c"

|

||||

"${LIBRARY_DIR}/lib/easy.c"

|

||||

"${LIBRARY_DIR}/lib/escape.c"

|

||||

"${LIBRARY_DIR}/lib/file.c"

|

||||

"${LIBRARY_DIR}/lib/fileinfo.c"

|

||||

"${LIBRARY_DIR}/lib/fopen.c"

|

||||

"${LIBRARY_DIR}/lib/formdata.c"

|

||||

"${LIBRARY_DIR}/lib/getenv.c"

|

||||

"${LIBRARY_DIR}/lib/getinfo.c"

|

||||

"${LIBRARY_DIR}/lib/gopher.c"

|

||||

"${LIBRARY_DIR}/lib/hash.c"

|

||||

"${LIBRARY_DIR}/lib/headers.c"

|

||||

"${LIBRARY_DIR}/lib/hmac.c"

|

||||

"${LIBRARY_DIR}/lib/hostasyn.c"

|

||||

"${LIBRARY_DIR}/lib/hostip.c"

|

||||

"${LIBRARY_DIR}/lib/hostip4.c"

|

||||

"${LIBRARY_DIR}/lib/hostip6.c"

|

||||

"${LIBRARY_DIR}/lib/hostsyn.c"

|

||||

"${LIBRARY_DIR}/lib/hsts.c"

|

||||

"${LIBRARY_DIR}/lib/http.c"

|

||||

"${LIBRARY_DIR}/lib/http2.c"

|

||||

"${LIBRARY_DIR}/lib/http_aws_sigv4.c"

|

||||

"${LIBRARY_DIR}/lib/http_chunks.c"

|

||||

"${LIBRARY_DIR}/lib/http_digest.c"

|

||||

"${LIBRARY_DIR}/lib/http_negotiate.c"

|

||||

"${LIBRARY_DIR}/lib/http_ntlm.c"

|

||||

"${LIBRARY_DIR}/lib/http_proxy.c"

|

||||

"${LIBRARY_DIR}/lib/idn.c"

|

||||

"${LIBRARY_DIR}/lib/if2ip.c"

|

||||

"${LIBRARY_DIR}/lib/imap.c"

|

||||

"${LIBRARY_DIR}/lib/inet_ntop.c"

|

||||

"${LIBRARY_DIR}/lib/inet_pton.c"

|

||||

"${LIBRARY_DIR}/lib/krb5.c"

|

||||

"${LIBRARY_DIR}/lib/ldap.c"

|

||||

"${LIBRARY_DIR}/lib/llist.c"

|

||||

"${LIBRARY_DIR}/lib/md4.c"

|

||||

"${LIBRARY_DIR}/lib/md5.c"

|

||||

"${LIBRARY_DIR}/lib/memdebug.c"

|

||||

"${LIBRARY_DIR}/lib/mime.c"

|

||||

"${LIBRARY_DIR}/lib/mprintf.c"

|

||||

"${LIBRARY_DIR}/lib/mqtt.c"

|

||||

"${LIBRARY_DIR}/lib/multi.c"

|

||||

"${LIBRARY_DIR}/lib/netrc.c"

|

||||

"${LIBRARY_DIR}/lib/nonblock.c"

|

||||

"${LIBRARY_DIR}/lib/noproxy.c"

|

||||

"${LIBRARY_DIR}/lib/openldap.c"

|

||||

"${LIBRARY_DIR}/lib/parsedate.c"

|

||||

"${LIBRARY_DIR}/lib/pingpong.c"

|

||||

"${LIBRARY_DIR}/lib/pop3.c"

|

||||

"${LIBRARY_DIR}/lib/progress.c"

|

||||

"${LIBRARY_DIR}/lib/psl.c"

|

||||

"${LIBRARY_DIR}/lib/rand.c"

|

||||

"${LIBRARY_DIR}/lib/rename.c"

|

||||

"${LIBRARY_DIR}/lib/rtsp.c"

|

||||

"${LIBRARY_DIR}/lib/select.c"

|

||||

"${LIBRARY_DIR}/lib/splay.c"

|

||||

"${LIBRARY_DIR}/lib/strdup.c"

|

||||

"${LIBRARY_DIR}/lib/sendf.c"

|

||||

"${LIBRARY_DIR}/lib/setopt.c"

|

||||

"${LIBRARY_DIR}/lib/sha256.c"

|

||||

"${LIBRARY_DIR}/lib/share.c"

|

||||

"${LIBRARY_DIR}/lib/slist.c"

|

||||

"${LIBRARY_DIR}/lib/smb.c"

|

||||

"${LIBRARY_DIR}/lib/smtp.c"

|

||||

"${LIBRARY_DIR}/lib/socketpair.c"

|

||||

"${LIBRARY_DIR}/lib/socks.c"

|

||||

"${LIBRARY_DIR}/lib/curl_addrinfo.c"

|

||||

"${LIBRARY_DIR}/lib/socks_gssapi.c"

|

||||

"${LIBRARY_DIR}/lib/socks_sspi.c"

|

||||

"${LIBRARY_DIR}/lib/curl_sspi.c"

|

||||

"${LIBRARY_DIR}/lib/slist.c"

|

||||

"${LIBRARY_DIR}/lib/nonblock.c"

|

||||

"${LIBRARY_DIR}/lib/curl_memrchr.c"

|

||||

"${LIBRARY_DIR}/lib/imap.c"

|

||||

"${LIBRARY_DIR}/lib/pop3.c"

|

||||

"${LIBRARY_DIR}/lib/smtp.c"

|

||||

"${LIBRARY_DIR}/lib/pingpong.c"

|

||||

"${LIBRARY_DIR}/lib/rtsp.c"

|

||||

"${LIBRARY_DIR}/lib/curl_threads.c"

|

||||

"${LIBRARY_DIR}/lib/warnless.c"

|

||||

"${LIBRARY_DIR}/lib/hmac.c"

|

||||

"${LIBRARY_DIR}/lib/curl_rtmp.c"

|

||||

"${LIBRARY_DIR}/lib/openldap.c"

|

||||

"${LIBRARY_DIR}/lib/curl_gethostname.c"

|

||||

"${LIBRARY_DIR}/lib/gopher.c"

|

||||

"${LIBRARY_DIR}/lib/http_proxy.c"

|

||||

"${LIBRARY_DIR}/lib/asyn-thread.c"

|

||||

"${LIBRARY_DIR}/lib/curl_gssapi.c"

|

||||

"${LIBRARY_DIR}/lib/http_ntlm.c"

|

||||

"${LIBRARY_DIR}/lib/curl_ntlm_wb.c"

|

||||

"${LIBRARY_DIR}/lib/curl_ntlm_core.c"

|

||||

"${LIBRARY_DIR}/lib/curl_sasl.c"

|

||||

"${LIBRARY_DIR}/lib/rand.c"

|

||||

"${LIBRARY_DIR}/lib/curl_multibyte.c"

|

||||

"${LIBRARY_DIR}/lib/conncache.c"

|

||||

"${LIBRARY_DIR}/lib/cf-h1-proxy.c"

|

||||

"${LIBRARY_DIR}/lib/http2.c"

|

||||

"${LIBRARY_DIR}/lib/smb.c"

|

||||

"${LIBRARY_DIR}/lib/curl_endian.c"

|

||||

"${LIBRARY_DIR}/lib/curl_des.c"

|

||||

"${LIBRARY_DIR}/lib/speedcheck.c"

|

||||

"${LIBRARY_DIR}/lib/splay.c"

|

||||

"${LIBRARY_DIR}/lib/strcase.c"

|

||||

"${LIBRARY_DIR}/lib/strdup.c"

|

||||

"${LIBRARY_DIR}/lib/strerror.c"

|

||||

"${LIBRARY_DIR}/lib/strtok.c"

|

||||

"${LIBRARY_DIR}/lib/strtoofft.c"

|

||||

"${LIBRARY_DIR}/lib/system_win32.c"

|

||||

"${LIBRARY_DIR}/lib/mime.c"

|

||||

"${LIBRARY_DIR}/lib/sha256.c"

|

||||

"${LIBRARY_DIR}/lib/setopt.c"

|

||||

"${LIBRARY_DIR}/lib/curl_path.c"

|

||||

"${LIBRARY_DIR}/lib/curl_range.c"

|

||||

"${LIBRARY_DIR}/lib/psl.c"

|

||||

"${LIBRARY_DIR}/lib/doh.c"

|

||||

"${LIBRARY_DIR}/lib/urlapi.c"

|

||||

"${LIBRARY_DIR}/lib/curl_get_line.c"

|

||||

"${LIBRARY_DIR}/lib/altsvc.c"

|

||||

"${LIBRARY_DIR}/lib/socketpair.c"

|

||||

"${LIBRARY_DIR}/lib/bufref.c"

|

||||

"${LIBRARY_DIR}/lib/bufq.c"

|

||||

"${LIBRARY_DIR}/lib/dynbuf.c"

|

||||

"${LIBRARY_DIR}/lib/dynhds.c"

|

||||

"${LIBRARY_DIR}/lib/hsts.c"

|

||||

"${LIBRARY_DIR}/lib/http_aws_sigv4.c"

|

||||

"${LIBRARY_DIR}/lib/mqtt.c"

|

||||

"${LIBRARY_DIR}/lib/rename.c"

|

||||

"${LIBRARY_DIR}/lib/headers.c"

|

||||

"${LIBRARY_DIR}/lib/telnet.c"

|

||||

"${LIBRARY_DIR}/lib/timediff.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/vauth.c"

|

||||

"${LIBRARY_DIR}/lib/timeval.c"

|

||||

"${LIBRARY_DIR}/lib/transfer.c"

|

||||

"${LIBRARY_DIR}/lib/url.c"

|

||||

"${LIBRARY_DIR}/lib/urlapi.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/cleartext.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/cram.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/digest.c"

|

||||

@ -138,23 +135,24 @@ set (SRCS

|

||||

"${LIBRARY_DIR}/lib/vauth/oauth2.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/spnego_gssapi.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/spnego_sspi.c"

|

||||

"${LIBRARY_DIR}/lib/vauth/vauth.c"

|

||||

"${LIBRARY_DIR}/lib/version.c"

|

||||

"${LIBRARY_DIR}/lib/vquic/vquic.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/openssl.c"

|

||||

"${LIBRARY_DIR}/lib/vssh/libssh.c"

|

||||

"${LIBRARY_DIR}/lib/vssh/libssh2.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/bearssl.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/gtls.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/vtls.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/nss.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/wolfssl.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/hostcheck.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/keylog.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/mbedtls.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/openssl.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/schannel.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/schannel_verify.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/sectransp.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/gskit.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/mbedtls.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/bearssl.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/keylog.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/vtls.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/wolfssl.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/x509asn1.c"

|

||||

"${LIBRARY_DIR}/lib/vtls/hostcheck.c"

|

||||

"${LIBRARY_DIR}/lib/vssh/libssh2.c"

|

||||

"${LIBRARY_DIR}/lib/vssh/libssh.c"

|

||||

"${LIBRARY_DIR}/lib/warnless.c"

|

||||

)

|

||||

|

||||

add_library (_curl ${SRCS})

|

||||

|

||||

@ -12,6 +12,7 @@ ENV \

|

||||

# install systemd packages

|

||||

RUN apt-get update && \

|

||||

apt-get install -y --no-install-recommends \

|

||||

sudo \

|

||||

systemd \

|

||||

&& \

|

||||

apt-get clean && \

|

||||

|

||||

@ -1,18 +1,7 @@

|

||||

# docker build -t clickhouse/performance-comparison .

|

||||

|

||||

# Using ubuntu:22.04 over 20.04 as all other images, since:

|

||||

# a) ubuntu 20.04 has too old parallel, and does not support --memsuspend

|

||||

# b) anyway for perf tests it should not be important (backward compatiblity

|

||||

# with older ubuntu had been checked lots of times in various tests)

|

||||

FROM ubuntu:22.04

|

||||

|

||||

# ARG for quick switch to a given ubuntu mirror

|

||||

ARG apt_archive="http://archive.ubuntu.com"

|

||||

RUN sed -i "s|http://archive.ubuntu.com|$apt_archive|g" /etc/apt/sources.list

|

||||

|

||||

ENV LANG=C.UTF-8

|

||||

ENV TZ=Europe/Amsterdam

|

||||

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

|

||||

ARG FROM_TAG=latest

|

||||

FROM clickhouse/test-base:$FROM_TAG

|

||||

|

||||

RUN apt-get update \

|

||||

&& DEBIAN_FRONTEND=noninteractive apt-get install --yes --no-install-recommends \

|

||||

@ -56,10 +45,9 @@ COPY * /

|

||||

# node #0 should be less stable because of system interruptions. We bind

|

||||

# randomly to node 1 or 0 to gather some statistics on that. We have to bind

|

||||

# both servers and the tmpfs on which the database is stored. How to do it

|

||||

# through Yandex Sandbox API is unclear, but by default tmpfs uses

|

||||

# is unclear, but by default tmpfs uses

|

||||

# 'process allocation policy', not sure which process but hopefully the one that

|

||||

# writes to it, so just bind the downloader script as well. We could also try to

|

||||

# remount it with proper options in Sandbox task.

|

||||

# writes to it, so just bind the downloader script as well.

|

||||

# https://www.kernel.org/doc/Documentation/filesystems/tmpfs.txt

|

||||

# Double-escaped backslashes are a tribute to the engineering wonder of docker --

|

||||

# it gives '/bin/sh: 1: [bash,: not found' otherwise.

|

||||

|

||||

@ -90,7 +90,7 @@ function configure

|

||||

set +m

|

||||

|

||||

wait_for_server $LEFT_SERVER_PORT $left_pid

|

||||

echo Server for setup started

|

||||

echo "Server for setup started"

|

||||

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "create database test" ||:

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "rename table datasets.hits_v1 to test.hits" ||:

|

||||

@ -156,9 +156,9 @@ function restart

|

||||

wait_for_server $RIGHT_SERVER_PORT $right_pid

|

||||

echo right ok

|

||||

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.tables where database != 'system'"

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.tables where database NOT IN ('system', 'INFORMATION_SCHEMA', 'information_schema')"

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.build_options"

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.tables where database != 'system'"

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.tables where database NOT IN ('system', 'INFORMATION_SCHEMA', 'information_schema')"

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.build_options"

|

||||

|

||||

# Check again that both servers we started are running -- this is important

|

||||

@ -352,14 +352,12 @@ function get_profiles

|

||||

wait

|

||||

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.query_log where type in ('QueryFinish', 'ExceptionWhileProcessing') format TSVWithNamesAndTypes" > left-query-log.tsv ||: &

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.query_thread_log format TSVWithNamesAndTypes" > left-query-thread-log.tsv ||: &

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.trace_log format TSVWithNamesAndTypes" > left-trace-log.tsv ||: &

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select arrayJoin(trace) addr, concat(splitByChar('/', addressToLine(addr))[-1], '#', demangle(addressToSymbol(addr)) ) name from system.trace_log group by addr format TSVWithNamesAndTypes" > left-addresses.tsv ||: &

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.metric_log format TSVWithNamesAndTypes" > left-metric-log.tsv ||: &

|

||||

clickhouse-client --port $LEFT_SERVER_PORT --query "select * from system.asynchronous_metric_log format TSVWithNamesAndTypes" > left-async-metric-log.tsv ||: &

|

||||

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.query_log where type in ('QueryFinish', 'ExceptionWhileProcessing') format TSVWithNamesAndTypes" > right-query-log.tsv ||: &

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.query_thread_log format TSVWithNamesAndTypes" > right-query-thread-log.tsv ||: &

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.trace_log format TSVWithNamesAndTypes" > right-trace-log.tsv ||: &

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select arrayJoin(trace) addr, concat(splitByChar('/', addressToLine(addr))[-1], '#', demangle(addressToSymbol(addr)) ) name from system.trace_log group by addr format TSVWithNamesAndTypes" > right-addresses.tsv ||: &

|

||||

clickhouse-client --port $RIGHT_SERVER_PORT --query "select * from system.metric_log format TSVWithNamesAndTypes" > right-metric-log.tsv ||: &

|

||||

|

||||

@ -19,31 +19,6 @@

|

||||

<opentelemetry_span_log remove="remove"/>

|

||||

<session_log remove="remove"/>

|

||||

|

||||

<!-- performance tests does not uses real block devices,

|

||||

instead they stores everything in memory.

|

||||

|

||||

And so, to avoid extra memory reference switch *_log to Memory engine. -->

|

||||

<query_log>

|

||||

<engine>ENGINE = Memory</engine>

|

||||

<partition_by remove="remove"/>

|

||||

</query_log>

|

||||

<query_thread_log>

|

||||

<engine>ENGINE = Memory</engine>

|

||||

<partition_by remove="remove"/>

|

||||

</query_thread_log>

|

||||

<trace_log>

|

||||

<engine>ENGINE = Memory</engine>

|

||||

<partition_by remove="remove"/>

|

||||

</trace_log>

|

||||

<metric_log>

|

||||

<engine>ENGINE = Memory</engine>

|

||||

<partition_by remove="remove"/>

|

||||

</metric_log>

|

||||

<asynchronous_metric_log>

|

||||

<engine>ENGINE = Memory</engine>

|

||||

<partition_by remove="remove"/>

|

||||

</asynchronous_metric_log>

|

||||

|

||||

<uncompressed_cache_size>1000000000</uncompressed_cache_size>

|

||||

|

||||

<asynchronous_metrics_update_period_s>10</asynchronous_metrics_update_period_s>

|

||||

|

||||

@ -31,8 +31,6 @@ function download

|

||||

# Test all of them.

|

||||

declare -a urls_to_try=(

|

||||

"$S3_URL/PRs/$left_pr/$left_sha/$BUILD_NAME/performance.tar.zst"

|

||||

"$S3_URL/$left_pr/$left_sha/$BUILD_NAME/performance.tar.zst"

|

||||

"$S3_URL/$left_pr/$left_sha/$BUILD_NAME/performance.tgz"

|

||||

)

|

||||

|

||||

for path in "${urls_to_try[@]}"

|

||||

|

||||

@ -130,7 +130,7 @@ then

|

||||

git -C right/ch diff --name-only "$base" pr -- :!tests/performance :!docker/test/performance-comparison | tee other-changed-files.txt

|

||||

fi

|

||||

|

||||

# Set python output encoding so that we can print queries with Russian letters.

|

||||

# Set python output encoding so that we can print queries with non-ASCII letters.

|

||||

export PYTHONIOENCODING=utf-8

|

||||

|

||||

# By default, use the main comparison script from the tested package, so that we

|

||||

@ -151,11 +151,7 @@ export PATH

|

||||

export REF_PR

|

||||

export REF_SHA

|

||||

|

||||

# Try to collect some core dumps. I've seen two patterns in Sandbox:

|

||||

# 1) |/home/zomb-sandbox/venv/bin/python /home/zomb-sandbox/client/sandbox/bin/coredumper.py %e %p %g %u %s %P %c

|

||||

# Not sure what this script does (puts them to sandbox resources, logs some messages?),

|

||||

# and it's not accessible from inside docker anyway.

|

||||

# 2) something like %e.%p.core.dmp. The dump should end up in the workspace directory.

|

||||

# Try to collect some core dumps.

|

||||

# At least we remove the ulimit and then try to pack some common file names into output.

|

||||

ulimit -c unlimited

|

||||

cat /proc/sys/kernel/core_pattern

|

||||

|

||||

@ -1,5 +1,5 @@

|

||||

# docker build -t clickhouse/style-test .

|

||||

FROM ubuntu:20.04

|

||||

FROM ubuntu:22.04

|

||||

ARG ACT_VERSION=0.2.33

|

||||

ARG ACTIONLINT_VERSION=1.6.22

|

||||

|

||||

|

||||

@ -190,7 +190,7 @@ These are the schema conversion manipulations you can do with table overrides fo

|

||||

* Modify [column TTL](/docs/en/engines/table-engines/mergetree-family/mergetree.md/#mergetree-column-ttl).

|

||||

* Modify [column compression codec](/docs/en/sql-reference/statements/create/table.md/#codecs).

|

||||

* Add [ALIAS columns](/docs/en/sql-reference/statements/create/table.md/#alias).

|

||||

* Add [skipping indexes](/docs/en/engines/table-engines/mergetree-family/mergetree.md/#table_engine-mergetree-data_skipping-indexes)

|

||||

* Add [skipping indexes](/docs/en/engines/table-engines/mergetree-family/mergetree.md/#table_engine-mergetree-data_skipping-indexes). Note that you need to enable `use_skip_indexes_if_final` setting to make them work (MaterializedMySQL is using `SELECT ... FINAL` by default)

|

||||

* Add [projections](/docs/en/engines/table-engines/mergetree-family/mergetree.md/#projections). Note that projection optimizations are

|

||||

disabled when using `SELECT ... FINAL` (which MaterializedMySQL does by default), so their utility is limited here.

|

||||

`INDEX ... TYPE hypothesis` as [described in the v21.12 blog post]](https://clickhouse.com/blog/en/2021/clickhouse-v21.12-released/)

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

# Approximate Nearest Neighbor Search Indexes [experimental] {#table_engines-ANNIndex}

|

||||

# Approximate Nearest Neighbor Search Indexes [experimental]

|

||||

|

||||

Nearest neighborhood search is the problem of finding the M closest points for a given point in an N-dimensional vector space. The most

|

||||

straightforward approach to solve this problem is a brute force search where the distance between all points in the vector space and the

|

||||

@ -17,7 +17,7 @@ In terms of SQL, the nearest neighborhood problem can be expressed as follows:

|

||||

|

||||

``` sql

|

||||

SELECT *

|

||||

FROM table

|

||||

FROM table_with_ann_index

|

||||

ORDER BY Distance(vectors, Point)

|

||||

LIMIT N

|

||||

```

|

||||

@ -32,7 +32,7 @@ An alternative formulation of the nearest neighborhood search problem looks as f

|

||||

|

||||

``` sql

|

||||

SELECT *

|

||||

FROM table

|

||||

FROM table_with_ann_index

|

||||

WHERE Distance(vectors, Point) < MaxDistance

|

||||

LIMIT N

|

||||

```

|

||||

@ -45,12 +45,12 @@ With brute force search, both queries are expensive (linear in the number of poi

|

||||

`Point` must be computed. To speed this process up, Approximate Nearest Neighbor Search Indexes (ANN indexes) store a compact representation

|

||||

of the search space (using clustering, search trees, etc.) which allows to compute an approximate answer much quicker (in sub-linear time).

|

||||

|

||||

# Creating and Using ANN Indexes

|

||||

# Creating and Using ANN Indexes {#creating_using_ann_indexes}

|

||||

|

||||

Syntax to create an ANN index over an [Array](../../../sql-reference/data-types/array.md) column:

|

||||

|

||||

```sql

|

||||

CREATE TABLE table

|

||||

CREATE TABLE table_with_ann_index

|

||||

(

|

||||

`id` Int64,

|

||||

`vectors` Array(Float32),

|

||||

@ -63,7 +63,7 @@ ORDER BY id;

|

||||

Syntax to create an ANN index over a [Tuple](../../../sql-reference/data-types/tuple.md) column:

|

||||

|

||||

```sql

|

||||

CREATE TABLE table

|

||||

CREATE TABLE table_with_ann_index

|

||||

(

|

||||

`id` Int64,

|

||||

`vectors` Tuple(Float32[, Float32[, ...]]),

|

||||

@ -83,7 +83,7 @@ ANN indexes support two types of queries:

|

||||

|

||||

``` sql

|

||||

SELECT *

|

||||

FROM table

|

||||

FROM table_with_ann_index

|

||||

[WHERE ...]

|

||||

ORDER BY Distance(vectors, Point)

|

||||

LIMIT N

|

||||

@ -93,7 +93,7 @@ ANN indexes support two types of queries:

|

||||

|

||||

``` sql

|

||||

SELECT *

|

||||

FROM table

|

||||

FROM table_with_ann_index

|

||||

WHERE Distance(vectors, Point) < MaxDistance

|

||||

LIMIT N

|

||||

```

|

||||

@ -103,7 +103,7 @@ To avoid writing out large vectors, you can use [query

|

||||

parameters](/docs/en/interfaces/cli.md#queries-with-parameters-cli-queries-with-parameters), e.g.

|

||||

|

||||

```bash

|

||||

clickhouse-client --param_vec='hello' --query="SELECT * FROM table WHERE L2Distance(vectors, {vec: Array(Float32)}) < 1.0"

|

||||

clickhouse-client --param_vec='hello' --query="SELECT * FROM table_with_ann_index WHERE L2Distance(vectors, {vec: Array(Float32)}) < 1.0"

|

||||

```

|

||||

:::

|

||||

|

||||

@ -138,7 +138,7 @@ back to a smaller `GRANULARITY` values only in case of problems like excessive m

|

||||

was specified for ANN indexes, the default value is 100 million.

|

||||

|

||||

|

||||

# Available ANN Indexes

|

||||

# Available ANN Indexes {#available_ann_indexes}

|

||||

|

||||

- [Annoy](/docs/en/engines/table-engines/mergetree-family/annindexes.md#annoy-annoy)

|

||||

|

||||

@ -165,7 +165,7 @@ space in random linear surfaces (lines in 2D, planes in 3D etc.).

|

||||

Syntax to create an Annoy index over an [Array](../../../sql-reference/data-types/array.md) column:

|

||||

|

||||

```sql

|

||||

CREATE TABLE table

|

||||

CREATE TABLE table_with_annoy_index

|

||||

(

|

||||

id Int64,

|

||||

vectors Array(Float32),

|

||||

@ -178,7 +178,7 @@ ORDER BY id;

|

||||

Syntax to create an ANN index over a [Tuple](../../../sql-reference/data-types/tuple.md) column:

|

||||

|

||||

```sql

|

||||

CREATE TABLE table

|

||||

CREATE TABLE table_with_annoy_index

|

||||

(

|

||||

id Int64,

|

||||

vectors Tuple(Float32[, Float32[, ...]]),

|

||||

@ -188,23 +188,17 @@ ENGINE = MergeTree

|

||||

ORDER BY id;

|

||||

```

|

||||

|

||||

Annoy currently supports `L2Distance` and `cosineDistance` as distance function `Distance`. If no distance function was specified during

|

||||

index creation, `L2Distance` is used as default. Parameter `NumTrees` is the number of trees which the algorithm creates (default if not

|

||||

specified: 100). Higher values of `NumTree` mean more accurate search results but slower index creation / query times (approximately

|

||||

linearly) as well as larger index sizes.

|

||||

Annoy currently supports two distance functions:

|

||||

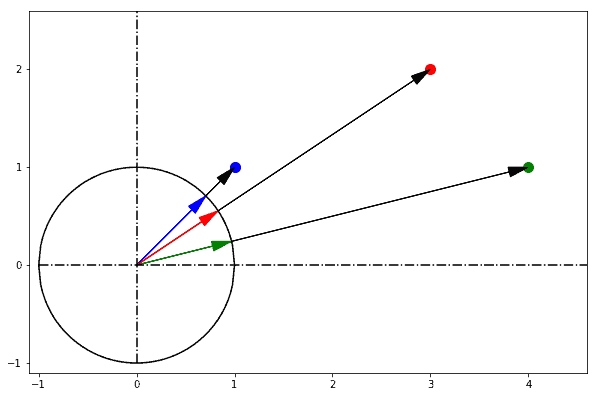

- `L2Distance`, also called Euclidean distance, is the length of a line segment between two points in Euclidean space

|

||||

([Wikipedia](https://en.wikipedia.org/wiki/Euclidean_distance)).

|

||||

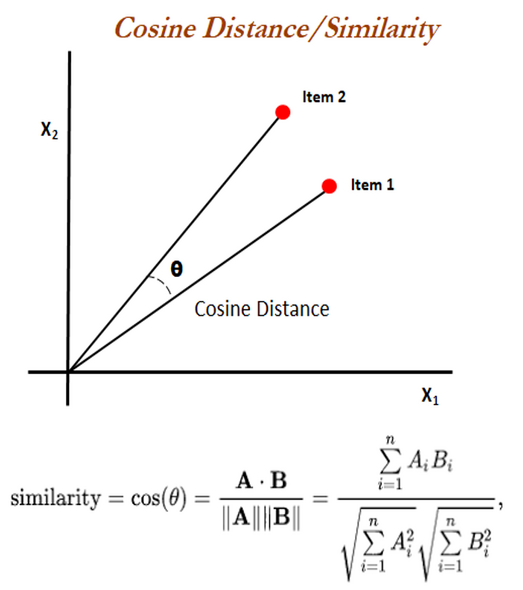

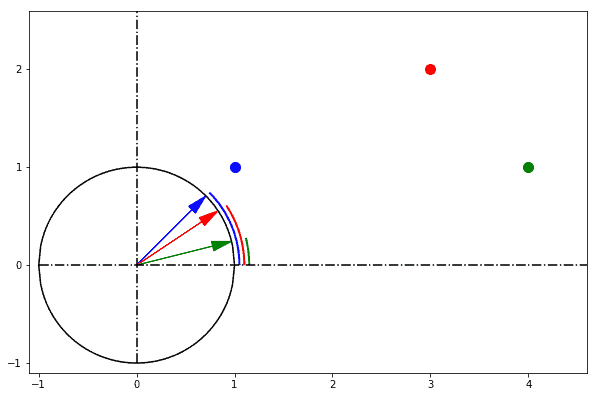

- `cosineDistance`, also called cosine similarity, is the cosine of the angle between two (non-zero) vectors

|

||||

([Wikipedia](https://en.wikipedia.org/wiki/Cosine_similarity)).

|

||||

|

||||

`L2Distance` is also called Euclidean distance, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points.

|

||||

For example: If we have point P(p1,p2), Q(q1,q2), their distance will be d(p,q)

|

||||

|

||||

For normalized data, `L2Distance` is usually a better choice, otherwise `cosineDistance` is recommended to compensate for scale. If no

|

||||

distance function was specified during index creation, `L2Distance` is used as default.

|

||||

|

||||

`cosineDistance` also called cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths.

|

||||

|

||||

|

||||

The Euclidean distance corresponds to the L2-norm of a difference between vectors. The cosine similarity is proportional to the dot product of two vectors and inversely proportional to the product of their magnitudes.

|

||||

|

||||

In one sentence: cosine similarity care only about the angle between them, but do not care about the "distance" we normally think.

|

||||

|

||||

|

||||

Parameter `NumTrees` is the number of trees which the algorithm creates (default if not specified: 100). Higher values of `NumTree` mean

|

||||

more accurate search results but slower index creation / query times (approximately linearly) as well as larger index sizes.

|

||||

|

||||

:::note

|

||||

Indexes over columns of type `Array` will generally work faster than indexes on `Tuple` columns. All arrays **must** have same length. Use

|

||||

|

||||

@ -11,7 +11,7 @@ Inserts data into a table.

|

||||

**Syntax**

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||

```

|

||||

|

||||

You can specify a list of columns to insert using the `(c1, c2, c3)`. You can also use an expression with column [matcher](../../sql-reference/statements/select/index.md#asterisk) such as `*` and/or [modifiers](../../sql-reference/statements/select/index.md#select-modifiers) such as [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#except-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier).

|

||||

@ -107,7 +107,7 @@ If table has [constraints](../../sql-reference/statements/create/table.md#constr

|

||||

**Syntax**

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] SELECT ...

|

||||

```

|

||||

|

||||

Columns are mapped according to their position in the SELECT clause. However, their names in the SELECT expression and the table for INSERT may differ. If necessary, type casting is performed.

|

||||

@ -126,7 +126,7 @@ To insert a default value instead of `NULL` into a column with not nullable data

|

||||

**Syntax**

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

||||

```

|

||||

|

||||

Use the syntax above to insert data from a file, or files, stored on the **client** side. `file_name` and `type` are string literals. Input file [format](../../interfaces/formats.md) must be set in the `FORMAT` clause.

|

||||

|

||||

@ -11,7 +11,7 @@ sidebar_label: INSERT INTO

|

||||

**Синтаксис**

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||

```

|

||||

|

||||

Вы можете указать список столбцов для вставки, используя синтаксис `(c1, c2, c3)`. Также можно использовать выражение cо [звездочкой](../../sql-reference/statements/select/index.md#asterisk) и/или модификаторами, такими как [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#except-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier).

|

||||

@ -100,7 +100,7 @@ INSERT INTO t FORMAT TabSeparated

|

||||

**Синтаксис**

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] SELECT ...

|

||||

```

|

||||

|

||||

Соответствие столбцов определяется их позицией в секции SELECT. При этом, их имена в выражении SELECT и в таблице для INSERT, могут отличаться. При необходимости выполняется приведение типов данных, эквивалентное соответствующему оператору CAST.

|

||||

@ -120,7 +120,7 @@ INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

||||

**Синтаксис**

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

||||

```

|

||||

|

||||

Используйте этот синтаксис, чтобы вставить данные из файла, который хранится на стороне **клиента**. `file_name` и `type` задаются в виде строковых литералов. [Формат](../../interfaces/formats.md) входного файла должен быть задан в секции `FORMAT`.

|

||||

|

||||

@ -8,7 +8,7 @@ INSERT INTO 语句主要用于向系统中添加数据.

|

||||

查询的基本格式:

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||

```

|

||||

|

||||

您可以在查询中指定要插入的列的列表,如:`[(c1, c2, c3)]`。您还可以使用列[匹配器](../../sql-reference/statements/select/index.md#asterisk)的表达式,例如`*`和/或[修饰符](../../sql-reference/statements/select/index.md#select-modifiers),例如 [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#apply-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier)。

|

||||

@ -71,7 +71,7 @@ INSERT INTO [db.]table [(c1, c2, c3)] FORMAT format_name data_set

|

||||

例如,下面的查询所使用的输入格式就与上面INSERT … VALUES的中使用的输入格式相同:

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] FORMAT Values (v11, v12, v13), (v21, v22, v23), ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] FORMAT Values (v11, v12, v13), (v21, v22, v23), ...

|

||||

```

|

||||

|

||||

ClickHouse会清除数据前所有的空白字符与一个换行符(如果有换行符的话)。所以在进行查询时,我们建议您将数据放入到输入输出格式名称后的新的一行中去(如果数据是以空白字符开始的,这将非常重要)。

|

||||

@ -93,7 +93,7 @@ INSERT INTO t FORMAT TabSeparated

|

||||

### 使用`SELECT`的结果写入 {#inserting-the-results-of-select}

|

||||

|

||||

``` sql

|

||||

INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

||||

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] SELECT ...

|

||||

```

|

||||

|

||||

写入与SELECT的列的对应关系是使用位置来进行对应的,尽管它们在SELECT表达式与INSERT中的名称可能是不同的。如果需要,会对它们执行对应的类型转换。

|

||||

|

||||

@ -997,7 +997,9 @@ namespace

|

||||

{

|

||||

/// sudo respects limits in /etc/security/limits.conf e.g. open files,

|

||||

/// that's why we are using it instead of the 'clickhouse su' tool.

|

||||

command = fmt::format("sudo -u '{}' {}", user, command);

|

||||

/// by default, sudo resets all the ENV variables, but we should preserve

|

||||

/// the values /etc/default/clickhouse in /etc/init.d/clickhouse file

|

||||

command = fmt::format("sudo --preserve-env -u '{}' {}", user, command);

|

||||

}

|

||||

|

||||

fmt::print("Will run {}\n", command);

|

||||

|

||||

@ -2,6 +2,8 @@

|

||||

|

||||

#include <sys/resource.h>

|

||||

#include <Common/logger_useful.h>

|

||||

#include <Common/formatReadable.h>

|

||||

#include <base/getMemoryAmount.h>

|

||||

#include <base/errnoToString.h>

|

||||

#include <Poco/Util/XMLConfiguration.h>

|

||||

#include <Poco/String.h>

|

||||

@ -655,43 +657,66 @@ void LocalServer::processConfig()

|

||||

/// There is no need for concurrent queries, override max_concurrent_queries.

|

||||

global_context->getProcessList().setMaxSize(0);

|

||||

|

||||

/// Size of cache for uncompressed blocks. Zero means disabled.

|

||||

String uncompressed_cache_policy = config().getString("uncompressed_cache_policy", "");

|

||||

size_t uncompressed_cache_size = config().getUInt64("uncompressed_cache_size", 0);

|

||||

const size_t memory_amount = getMemoryAmount();

|

||||

const double cache_size_to_ram_max_ratio = config().getDouble("cache_size_to_ram_max_ratio", 0.5);

|

||||

const size_t max_cache_size = static_cast<size_t>(memory_amount * cache_size_to_ram_max_ratio);

|

||||

|

||||

String uncompressed_cache_policy = config().getString("uncompressed_cache_policy", DEFAULT_UNCOMPRESSED_CACHE_POLICY);

|

||||

size_t uncompressed_cache_size = config().getUInt64("uncompressed_cache_size", DEFAULT_UNCOMPRESSED_CACHE_MAX_SIZE);

|

||||

if (uncompressed_cache_size > max_cache_size)

|

||||

{

|

||||

uncompressed_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered uncompressed cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (uncompressed_cache_size)

|

||||

global_context->setUncompressedCache(uncompressed_cache_policy, uncompressed_cache_size);

|

||||

|

||||

/// Size of cache for marks (index of MergeTree family of tables).

|

||||

String mark_cache_policy = config().getString("mark_cache_policy", "");

|

||||

size_t mark_cache_size = config().getUInt64("mark_cache_size", 5368709120);

|

||||

String mark_cache_policy = config().getString("mark_cache_policy", DEFAULT_MARK_CACHE_POLICY);

|

||||

size_t mark_cache_size = config().getUInt64("mark_cache_size", DEFAULT_MARK_CACHE_MAX_SIZE);

|

||||

if (!mark_cache_size)

|

||||

LOG_ERROR(log, "Too low mark cache size will lead to severe performance degradation.");

|

||||

if (mark_cache_size > max_cache_size)

|

||||

{

|

||||

mark_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered mark cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(mark_cache_size));

|

||||

}

|

||||

if (mark_cache_size)

|

||||

global_context->setMarkCache(mark_cache_policy, mark_cache_size);

|

||||

|

||||

/// Size of cache for uncompressed blocks of MergeTree indices. Zero means disabled.

|

||||

size_t index_uncompressed_cache_size = config().getUInt64("index_uncompressed_cache_size", 0);

|

||||

size_t index_uncompressed_cache_size = config().getUInt64("index_uncompressed_cache_size", DEFAULT_INDEX_UNCOMPRESSED_CACHE_MAX_SIZE);

|

||||

if (index_uncompressed_cache_size > max_cache_size)

|

||||

{

|

||||

index_uncompressed_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered index uncompressed cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (index_uncompressed_cache_size)

|

||||

global_context->setIndexUncompressedCache(index_uncompressed_cache_size);

|

||||

|

||||

/// Size of cache for index marks (index of MergeTree skip indices).

|

||||

size_t index_mark_cache_size = config().getUInt64("index_mark_cache_size", 0);

|

||||

size_t index_mark_cache_size = config().getUInt64("index_mark_cache_size", DEFAULT_INDEX_MARK_CACHE_MAX_SIZE);

|

||||

if (index_mark_cache_size > max_cache_size)

|

||||

{

|

||||

index_mark_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered index mark cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (index_mark_cache_size)

|

||||

global_context->setIndexMarkCache(index_mark_cache_size);

|

||||

|

||||

/// A cache for mmapped files.

|

||||

size_t mmap_cache_size = config().getUInt64("mmap_cache_size", 1000); /// The choice of default is arbitrary.

|

||||

size_t mmap_cache_size = config().getUInt64("mmap_cache_size", DEFAULT_MMAP_CACHE_MAX_SIZE);

|

||||

if (mmap_cache_size > max_cache_size)

|

||||

{

|

||||

mmap_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered mmap file cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (mmap_cache_size)

|

||||

global_context->setMMappedFileCache(mmap_cache_size);

|

||||

|

||||

/// In Server.cpp (./clickhouse-server), we would initialize the query cache here.

|

||||

/// Intentionally not doing this in clickhouse-local as it doesn't make sense.

|

||||

|

||||

#if USE_EMBEDDED_COMPILER

|

||||

/// 128 MB

|

||||

constexpr size_t compiled_expression_cache_size_default = 1024 * 1024 * 128;

|

||||

size_t compiled_expression_cache_size = config().getUInt64("compiled_expression_cache_size", compiled_expression_cache_size_default);

|

||||

|

||||

constexpr size_t compiled_expression_cache_elements_size_default = 10000;

|

||||

size_t compiled_expression_cache_elements_size

|

||||

= config().getUInt64("compiled_expression_cache_elements_size", compiled_expression_cache_elements_size_default);

|

||||

|

||||

CompiledExpressionCacheFactory::instance().init(compiled_expression_cache_size, compiled_expression_cache_elements_size);

|

||||

size_t compiled_expression_cache_max_size_in_bytes = config().getUInt64("compiled_expression_cache_size", DEFAULT_COMPILED_EXPRESSION_CACHE_MAX_SIZE);

|

||||

size_t compiled_expression_cache_max_elements = config().getUInt64("compiled_expression_cache_elements_size", DEFAULT_COMPILED_EXPRESSION_CACHE_MAX_ENTRIES);

|

||||

CompiledExpressionCacheFactory::instance().init(compiled_expression_cache_max_size_in_bytes, compiled_expression_cache_max_elements);

|

||||

#endif

|

||||

|

||||

/// NOTE: it is important to apply any overrides before

|

||||

|

||||

@ -365,17 +365,14 @@ static void transformFixedString(const UInt8 * src, UInt8 * dst, size_t size, UI

|

||||

hash.update(seed);

|

||||

hash.update(i);

|

||||

|

||||

const auto checksum = getSipHash128AsArray(hash);

|

||||

if (size >= 16)

|

||||

{

|

||||

char * hash_dst = reinterpret_cast<char *>(std::min(pos, end - 16));

|

||||

hash.get128(hash_dst);

|

||||

auto * hash_dst = std::min(pos, end - 16);

|

||||

memcpy(hash_dst, checksum.data(), checksum.size());

|

||||

}

|

||||

else

|

||||

{

|

||||

char value[16];

|

||||

hash.get128(value);

|

||||

memcpy(dst, value, end - dst);

|

||||

}

|

||||

memcpy(dst, checksum.data(), end - dst);

|

||||

|

||||

pos += 16;

|

||||

++i;

|

||||

@ -401,7 +398,7 @@ static void transformUUID(const UUID & src_uuid, UUID & dst_uuid, UInt64 seed)

|

||||

hash.update(reinterpret_cast<const char *>(&src), sizeof(UUID));

|

||||

|

||||

/// Saving version and variant from an old UUID

|

||||

hash.get128(reinterpret_cast<char *>(&dst));

|

||||

dst = hash.get128();

|

||||

|

||||

dst.items[1] = (dst.items[1] & 0x1fffffffffffffffull) | (src.items[1] & 0xe000000000000000ull);

|

||||

dst.items[0] = (dst.items[0] & 0xffffffffffff0fffull) | (src.items[0] & 0x000000000000f000ull);

|

||||

|

||||

@ -29,6 +29,7 @@

|

||||

#include <Common/ShellCommand.h>

|

||||

#include <Common/ZooKeeper/ZooKeeper.h>

|

||||

#include <Common/ZooKeeper/ZooKeeperNodeCache.h>

|

||||

#include <Common/formatReadable.h>

|

||||

#include <Common/getMultipleKeysFromConfig.h>

|

||||

#include <Common/getNumberOfPhysicalCPUCores.h>

|

||||

#include <Common/getExecutablePath.h>

|

||||

@ -658,7 +659,7 @@ try

|

||||

global_context->addWarningMessage("Server was built with sanitizer. It will work slowly.");

|

||||

#endif

|

||||

|

||||

const auto memory_amount = getMemoryAmount();

|

||||

const size_t memory_amount = getMemoryAmount();

|

||||

|

||||

LOG_INFO(log, "Available RAM: {}; physical cores: {}; logical cores: {}.",

|

||||

formatReadableSizeWithBinarySuffix(memory_amount),

|

||||

@ -1485,16 +1486,14 @@ try

|

||||

|

||||

/// Set up caches.

|

||||

|

||||

size_t max_cache_size = static_cast<size_t>(memory_amount * server_settings.cache_size_to_ram_max_ratio);

|

||||

const size_t max_cache_size = static_cast<size_t>(memory_amount * server_settings.cache_size_to_ram_max_ratio);

|

||||

|

||||

String uncompressed_cache_policy = server_settings.uncompressed_cache_policy;

|

||||

LOG_INFO(log, "Uncompressed cache policy name {}", uncompressed_cache_policy);

|

||||

size_t uncompressed_cache_size = server_settings.uncompressed_cache_size;

|

||||

if (uncompressed_cache_size > max_cache_size)

|

||||

{

|

||||

uncompressed_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Uncompressed cache size was lowered to {} because the system has low amount of memory",

|

||||

formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

LOG_INFO(log, "Lowered uncompressed cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

global_context->setUncompressedCache(uncompressed_cache_policy, uncompressed_cache_size);

|

||||

|

||||

@ -1513,39 +1512,59 @@ try

|

||||

server_settings.async_insert_queue_flush_on_shutdown));

|

||||

}

|

||||

|

||||

size_t mark_cache_size = server_settings.mark_cache_size;

|

||||

String mark_cache_policy = server_settings.mark_cache_policy;

|

||||

size_t mark_cache_size = server_settings.mark_cache_size;

|

||||

if (!mark_cache_size)

|

||||

LOG_ERROR(log, "Too low mark cache size will lead to severe performance degradation.");

|

||||

if (mark_cache_size > max_cache_size)

|

||||

{

|

||||

mark_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Mark cache size was lowered to {} because the system has low amount of memory",

|

||||

formatReadableSizeWithBinarySuffix(mark_cache_size));

|

||||

LOG_INFO(log, "Lowered mark cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(mark_cache_size));

|

||||

}

|

||||

global_context->setMarkCache(mark_cache_policy, mark_cache_size);

|

||||

|

||||

if (server_settings.index_uncompressed_cache_size)

|

||||

size_t index_uncompressed_cache_size = server_settings.index_uncompressed_cache_size;

|

||||

if (index_uncompressed_cache_size > max_cache_size)

|

||||

{

|

||||

index_uncompressed_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered index uncompressed cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (index_uncompressed_cache_size)

|

||||

global_context->setIndexUncompressedCache(server_settings.index_uncompressed_cache_size);

|

||||

|

||||

if (server_settings.index_mark_cache_size)

|

||||

size_t index_mark_cache_size = server_settings.index_mark_cache_size;

|

||||

if (index_mark_cache_size > max_cache_size)

|

||||

{

|

||||

index_mark_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered index mark cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (index_mark_cache_size)

|

||||

global_context->setIndexMarkCache(server_settings.index_mark_cache_size);

|

||||

|

||||

if (server_settings.mmap_cache_size)

|

||||

size_t mmap_cache_size = server_settings.mmap_cache_size;

|

||||

if (mmap_cache_size > max_cache_size)

|

||||

{

|

||||

mmap_cache_size = max_cache_size;

|

||||

LOG_INFO(log, "Lowered mmap file cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

if (mmap_cache_size)

|

||||

global_context->setMMappedFileCache(server_settings.mmap_cache_size);

|

||||

|

||||

/// A cache for query results.

|

||||

global_context->setQueryCache(config());

|

||||

size_t query_cache_max_size_in_bytes = config().getUInt64("query_cache.max_size_in_bytes", DEFAULT_QUERY_CACHE_MAX_SIZE);

|

||||

size_t query_cache_max_entries = config().getUInt64("query_cache.max_entries", DEFAULT_QUERY_CACHE_MAX_ENTRIES);

|

||||

size_t query_cache_query_cache_max_entry_size_in_bytes = config().getUInt64("query_cache.max_entry_size_in_bytes", DEFAULT_QUERY_CACHE_MAX_ENTRY_SIZE_IN_BYTES);

|

||||

size_t query_cache_max_entry_size_in_rows = config().getUInt64("query_cache.max_entry_rows_in_rows", DEFAULT_QUERY_CACHE_MAX_ENTRY_SIZE_IN_ROWS);

|

||||

if (query_cache_max_size_in_bytes > max_cache_size)

|

||||

{

|

||||

query_cache_max_size_in_bytes = max_cache_size;

|

||||

LOG_INFO(log, "Lowered query cache size to {} because the system has limited RAM", formatReadableSizeWithBinarySuffix(uncompressed_cache_size));

|

||||

}

|

||||

global_context->setQueryCache(query_cache_max_size_in_bytes, query_cache_max_entries, query_cache_query_cache_max_entry_size_in_bytes, query_cache_max_entry_size_in_rows);

|

||||

|

||||

#if USE_EMBEDDED_COMPILER

|

||||

/// 128 MB

|

||||

constexpr size_t compiled_expression_cache_size_default = 1024 * 1024 * 128;

|

||||

size_t compiled_expression_cache_size = config().getUInt64("compiled_expression_cache_size", compiled_expression_cache_size_default);

|

||||

|

||||

constexpr size_t compiled_expression_cache_elements_size_default = 10000;

|

||||

size_t compiled_expression_cache_elements_size = config().getUInt64("compiled_expression_cache_elements_size", compiled_expression_cache_elements_size_default);

|

||||

|

||||

CompiledExpressionCacheFactory::instance().init(compiled_expression_cache_size, compiled_expression_cache_elements_size);

|

||||

size_t compiled_expression_cache_max_size_in_bytes = config().getUInt64("compiled_expression_cache_size", DEFAULT_COMPILED_EXPRESSION_CACHE_MAX_SIZE);

|

||||

size_t compiled_expression_cache_max_elements = config().getUInt64("compiled_expression_cache_elements_size", DEFAULT_COMPILED_EXPRESSION_CACHE_MAX_ENTRIES);

|

||||

CompiledExpressionCacheFactory::instance().init(compiled_expression_cache_max_size_in_bytes, compiled_expression_cache_max_elements);

|

||||

#endif

|

||||

|

||||

/// Set path for format schema files

|

||||

|

||||

@ -315,10 +315,9 @@ struct Adder

|

||||

{

|

||||

StringRef value = column.getDataAt(row_num);

|

||||

|

||||

UInt128 key;

|

||||

SipHash hash;

|

||||

hash.update(value.data, value.size);

|

||||

hash.get128(key);

|

||||

const auto key = hash.get128();

|

||||

|

||||

data.set.template insert<const UInt128 &, use_single_level_hash_table>(key);

|

||||

}

|

||||

|

||||

@ -107,9 +107,7 @@ struct UniqVariadicHash<true, false>

|

||||

++column;

|

||||

}

|

||||

|

||||

UInt128 key;

|

||||

hash.get128(key);

|

||||

return key;

|

||||

return hash.get128();

|

||||

}

|

||||

};

|

||||

|

||||

@ -131,9 +129,7 @@ struct UniqVariadicHash<true, true>

|

||||

++column;

|

||||

}

|

||||

|

||||

UInt128 key;

|

||||

hash.get128(key);

|

||||

return key;

|

||||

return hash.get128();

|

||||

}

|

||||

};

|

||||

|

||||

|

||||

@ -20,7 +20,7 @@ struct QueryTreeNodeWithHash

|

||||

{}

|

||||

|

||||

QueryTreeNodePtrType node = nullptr;

|

||||

std::pair<UInt64, UInt64> hash;

|

||||

CityHash_v1_0_2::uint128 hash;

|

||||

};

|

||||

|

||||

template <typename T>

|

||||

@ -55,6 +55,6 @@ struct std::hash<DB::QueryTreeNodeWithHash<T>>

|

||||

{

|

||||

size_t operator()(const DB::QueryTreeNodeWithHash<T> & node_with_hash) const

|

||||

{

|

||||

return node_with_hash.hash.first;

|

||||

return node_with_hash.hash.low64;

|

||||

}

|

||||

};

|

||||

|

||||

@ -229,10 +229,7 @@ IQueryTreeNode::Hash IQueryTreeNode::getTreeHash() const

|

||||

}

|

||||

}

|

||||

|

||||

Hash result;

|

||||

hash_state.get128(result);

|

||||

|

||||

return result;

|

||||

return getSipHash128AsPair(hash_state);

|

||||

}

|

||||

|

||||

QueryTreeNodePtr IQueryTreeNode::clone() const

|

||||

|

||||

@ -106,7 +106,7 @@ public:

|

||||

*/

|

||||

bool isEqual(const IQueryTreeNode & rhs, CompareOptions compare_options = { .compare_aliases = true }) const;

|

||||

|

||||

using Hash = std::pair<UInt64, UInt64>;

|

||||

using Hash = CityHash_v1_0_2::uint128;

|

||||

using HashState = SipHash;

|

||||

|

||||

/** Get tree hash identifying current tree

|

||||

|

||||

@ -2033,7 +2033,7 @@ void QueryAnalyzer::evaluateScalarSubqueryIfNeeded(QueryTreeNodePtr & node, Iden

|

||||

auto & nearest_query_scope_query_node = nearest_query_scope->scope_node->as<QueryNode &>();

|

||||

auto & mutable_context = nearest_query_scope_query_node.getMutableContext();

|

||||

|

||||

auto scalar_query_hash_string = std::to_string(node_with_hash.hash.first) + '_' + std::to_string(node_with_hash.hash.second);

|

||||

auto scalar_query_hash_string = DB::toString(node_with_hash.hash);

|

||||

|

||||

if (mutable_context->hasQueryContext())

|

||||

mutable_context->getQueryContext()->addScalar(scalar_query_hash_string, scalar_block);

|

||||

|

||||

@ -105,6 +105,7 @@ namespace ErrorCodes

|

||||

extern const int LOGICAL_ERROR;

|

||||

extern const int CANNOT_OPEN_FILE;

|

||||

extern const int FILE_ALREADY_EXISTS;

|

||||

extern const int USER_SESSION_LIMIT_EXCEEDED;

|

||||

}

|

||||

|

||||

}

|

||||

@ -846,7 +847,9 @@ void ClientBase::processOrdinaryQuery(const String & query_to_execute, ASTPtr pa

|

||||

visitor.visit(parsed_query);

|

||||

|

||||

/// Get new query after substitutions.

|

||||

query = serializeAST(*parsed_query);

|

||||

if (visitor.getNumberOfReplacedParameters())

|

||||

query = serializeAST(*parsed_query);

|

||||

chassert(!query.empty());

|

||||

}

|

||||

|

||||

if (allow_merge_tree_settings && parsed_query->as<ASTCreateQuery>())

|

||||

@ -1331,7 +1334,9 @@ void ClientBase::processInsertQuery(const String & query_to_execute, ASTPtr pars

|

||||

visitor.visit(parsed_query);

|

||||

|

||||

/// Get new query after substitutions.

|

||||

query = serializeAST(*parsed_query);

|

||||

if (visitor.getNumberOfReplacedParameters())

|

||||

query = serializeAST(*parsed_query);

|

||||

chassert(!query.empty());

|

||||

}

|

||||

|

||||

/// Process the query that requires transferring data blocks to the server.

|

||||

@ -1810,7 +1815,7 @@ void ClientBase::processParsedSingleQuery(const String & full_query, const Strin

|

||||

}

|

||||

if (const auto * use_query = parsed_query->as<ASTUseQuery>())

|

||||

{

|

||||

const String & new_database = use_query->database;

|

||||

const String & new_database = use_query->getDatabase();

|

||||

/// If the client initiates the reconnection, it takes the settings from the config.

|

||||

config().setString("database", new_database);

|

||||

/// If the connection initiates the reconnection, it uses its variable.

|

||||

@ -2408,6 +2413,13 @@ void ClientBase::runInteractive()

|

||||

}

|

||||

}

|

||||

|

||||

if (suggest && suggest->getLastError() == ErrorCodes::USER_SESSION_LIMIT_EXCEEDED)

|

||||

{

|

||||

// If a separate connection loading suggestions failed to open a new session,

|

||||

// use the main session to receive them.

|

||||

suggest->load(*connection, connection_parameters.timeouts, config().getInt("suggestion_limit"));

|

||||

}

|

||||

|

||||

try

|

||||

{

|

||||

if (!processQueryText(input))

|

||||

|

||||

@ -521,8 +521,7 @@ void QueryFuzzer::fuzzCreateQuery(ASTCreateQuery & create)

|

||||

if (create.storage)

|

||||

create.storage->updateTreeHash(sip_hash);

|

||||

|

||||

IAST::Hash hash;

|

||||

sip_hash.get128(hash);

|

||||

const auto hash = getSipHash128AsPair(sip_hash);

|

||||

|

||||

/// Save only tables with unique definition.

|

||||

if (created_tables_hashes.insert(hash).second)

|

||||

|

||||

@ -22,9 +22,11 @@ namespace DB

|

||||

{

|

||||

namespace ErrorCodes

|

||||

{

|

||||

extern const int OK;

|

||||

extern const int LOGICAL_ERROR;

|

||||

extern const int UNKNOWN_PACKET_FROM_SERVER;

|

||||

extern const int DEADLOCK_AVOIDED;

|

||||

extern const int USER_SESSION_LIMIT_EXCEEDED;

|

||||

}

|

||||

|

||||

Suggest::Suggest()

|

||||

@ -121,21 +123,24 @@ void Suggest::load(ContextPtr context, const ConnectionParameters & connection_p

|

||||

}

|

||||

catch (const Exception & e)

|

||||

{

|

||||

last_error = e.code();

|

||||

if (e.code() == ErrorCodes::DEADLOCK_AVOIDED)

|

||||

continue;

|

||||

|

||||

/// Client can successfully connect to the server and

|

||||

/// get ErrorCodes::USER_SESSION_LIMIT_EXCEEDED for suggestion connection.

|

||||

|

||||

/// We should not use std::cerr here, because this method works concurrently with the main thread.

|

||||

/// WriteBufferFromFileDescriptor will write directly to the file descriptor, avoiding data race on std::cerr.

|

||||

|

||||

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

||||

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

||||

out.next();

|

||||

else if (e.code() != ErrorCodes::USER_SESSION_LIMIT_EXCEEDED)

|

||||

{

|

||||

/// We should not use std::cerr here, because this method works concurrently with the main thread.

|

||||

/// WriteBufferFromFileDescriptor will write directly to the file descriptor, avoiding data race on std::cerr.

|

||||

///

|

||||

/// USER_SESSION_LIMIT_EXCEEDED is ignored here. The client will try to receive

|

||||

/// suggestions using the main connection later.

|

||||

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

||||

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

||||

out.next();

|

||||

}

|

||||

}

|

||||

catch (...)

|

||||

{

|

||||

last_error = getCurrentExceptionCode();

|

||||

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

||||

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

||||

out.next();

|

||||

@ -148,6 +153,21 @@ void Suggest::load(ContextPtr context, const ConnectionParameters & connection_p

|

||||

});

|

||||

}

|

||||

|

||||

void Suggest::load(IServerConnection & connection,

|

||||

const ConnectionTimeouts & timeouts,

|

||||

Int32 suggestion_limit)

|

||||

{

|

||||

try

|

||||

{

|

||||

fetch(connection, timeouts, getLoadSuggestionQuery(suggestion_limit, true));

|

||||

}

|

||||

catch (...)

|

||||

{

|

||||

std::cerr << "Suggestions loading exception: " << getCurrentExceptionMessage(false, true) << std::endl;

|

||||

last_error = getCurrentExceptionCode();

|

||||

}

|

||||

}

|

||||

|

||||

void Suggest::fetch(IServerConnection & connection, const ConnectionTimeouts & timeouts, const std::string & query)

|

||||

{

|

||||

connection.sendQuery(

|

||||

@ -176,6 +196,7 @@ void Suggest::fetch(IServerConnection & connection, const ConnectionTimeouts & t

|

||||

return;

|

||||

|

||||

case Protocol::Server::EndOfStream:

|

||||

last_error = ErrorCodes::OK;

|

||||

return;

|

||||

|

||||

default:

|

||||

|

||||

@ -7,6 +7,7 @@

|

||||

#include <Client/LocalConnection.h>

|

||||

#include <Client/LineReader.h>

|

||||

#include <IO/ConnectionTimeouts.h>

|

||||

#include <atomic>

|

||||

#include <thread>

|

||||

|

||||

|

||||

@ -28,9 +29,15 @@ public:

|

||||

template <typename ConnectionType>

|

||||

void load(ContextPtr context, const ConnectionParameters & connection_parameters, Int32 suggestion_limit);

|

||||

|

||||

void load(IServerConnection & connection,

|

||||

const ConnectionTimeouts & timeouts,

|

||||

Int32 suggestion_limit);

|

||||

|

||||

/// Older server versions cannot execute the query loading suggestions.

|

||||

static constexpr int MIN_SERVER_REVISION = DBMS_MIN_PROTOCOL_VERSION_WITH_VIEW_IF_PERMITTED;

|

||||

|

||||

int getLastError() const { return last_error.load(); }

|

||||

|

||||

private:

|

||||

void fetch(IServerConnection & connection, const ConnectionTimeouts & timeouts, const std::string & query);

|

||||

|

||||

@ -38,6 +45,8 @@ private:

|

||||

|

||||

/// Words are fetched asynchronously.

|

||||

std::thread loading_thread;

|

||||

|

||||

std::atomic<int> last_error { -1 };

|

||||

};

|

||||

|

||||

}

|

||||

|

||||

@ -524,7 +524,7 @@ void ColumnAggregateFunction::insertDefault()

|

||||

pushBackAndCreateState(data, arena, func.get());

|

||||

}

|

||||

|

||||