mirror of

https://github.com/ClickHouse/ClickHouse.git

synced 2024-11-23 08:02:02 +00:00

Merge branch 'master' into nullable-num-intdiv

This commit is contained in:

commit

d1a8a88ae0

4

.gitmodules

vendored

4

.gitmodules

vendored

@ -331,6 +331,10 @@

|

|||||||

[submodule "contrib/liburing"]

|

[submodule "contrib/liburing"]

|

||||||

path = contrib/liburing

|

path = contrib/liburing

|

||||||

url = https://github.com/axboe/liburing

|

url = https://github.com/axboe/liburing

|

||||||

|

[submodule "contrib/libarchive"]

|

||||||

|

path = contrib/libarchive

|

||||||

|

url = https://github.com/libarchive/libarchive.git

|

||||||

|

ignore = dirty

|

||||||

[submodule "contrib/libfiu"]

|

[submodule "contrib/libfiu"]

|

||||||

path = contrib/libfiu

|

path = contrib/libfiu

|

||||||

url = https://github.com/ClickHouse/libfiu.git

|

url = https://github.com/ClickHouse/libfiu.git

|

||||||

|

|||||||

@ -52,7 +52,6 @@

|

|||||||

* Add new setting `disable_url_encoding` that allows to disable decoding/encoding path in uri in URL engine. [#52337](https://github.com/ClickHouse/ClickHouse/pull/52337) ([Kruglov Pavel](https://github.com/Avogar)).

|

* Add new setting `disable_url_encoding` that allows to disable decoding/encoding path in uri in URL engine. [#52337](https://github.com/ClickHouse/ClickHouse/pull/52337) ([Kruglov Pavel](https://github.com/Avogar)).

|

||||||

|

|

||||||

#### Performance Improvement

|

#### Performance Improvement

|

||||||

* Writing parquet files is 10x faster, it's multi-threaded now. Almost the same speed as reading. [#49367](https://github.com/ClickHouse/ClickHouse/pull/49367) ([Michael Kolupaev](https://github.com/al13n321)).

|

|

||||||

* Enable automatic selection of the sparse serialization format by default. It improves performance. The format is supported since version 22.1. After this change, downgrading to versions older than 22.1 might not be possible. You can turn off the usage of the sparse serialization format by providing the `ratio_of_defaults_for_sparse_serialization = 1` setting for your MergeTree tables. [#49631](https://github.com/ClickHouse/ClickHouse/pull/49631) ([Alexey Milovidov](https://github.com/alexey-milovidov)).

|

* Enable automatic selection of the sparse serialization format by default. It improves performance. The format is supported since version 22.1. After this change, downgrading to versions older than 22.1 might not be possible. You can turn off the usage of the sparse serialization format by providing the `ratio_of_defaults_for_sparse_serialization = 1` setting for your MergeTree tables. [#49631](https://github.com/ClickHouse/ClickHouse/pull/49631) ([Alexey Milovidov](https://github.com/alexey-milovidov)).

|

||||||

* Enable `move_all_conditions_to_prewhere` and `enable_multiple_prewhere_read_steps` settings by default. [#46365](https://github.com/ClickHouse/ClickHouse/pull/46365) ([Alexander Gololobov](https://github.com/davenger)).

|

* Enable `move_all_conditions_to_prewhere` and `enable_multiple_prewhere_read_steps` settings by default. [#46365](https://github.com/ClickHouse/ClickHouse/pull/46365) ([Alexander Gololobov](https://github.com/davenger)).

|

||||||

* Improves performance of some queries by tuning allocator. [#46416](https://github.com/ClickHouse/ClickHouse/pull/46416) ([Azat Khuzhin](https://github.com/azat)).

|

* Improves performance of some queries by tuning allocator. [#46416](https://github.com/ClickHouse/ClickHouse/pull/46416) ([Azat Khuzhin](https://github.com/azat)).

|

||||||

@ -114,6 +113,7 @@

|

|||||||

* Now interserver port will be closed only after tables are shut down. [#52498](https://github.com/ClickHouse/ClickHouse/pull/52498) ([alesapin](https://github.com/alesapin)).

|

* Now interserver port will be closed only after tables are shut down. [#52498](https://github.com/ClickHouse/ClickHouse/pull/52498) ([alesapin](https://github.com/alesapin)).

|

||||||

|

|

||||||

#### Experimental Feature

|

#### Experimental Feature

|

||||||

|

* Writing parquet files is 10x faster, it's multi-threaded now. Almost the same speed as reading. [#49367](https://github.com/ClickHouse/ClickHouse/pull/49367) ([Michael Kolupaev](https://github.com/al13n321)). This is controlled by the setting `output_format_parquet_use_custom_encoder` which is disabled by default, because the feature is non-ideal.

|

||||||

* Added support for [PRQL](https://prql-lang.org/) as a query language. [#50686](https://github.com/ClickHouse/ClickHouse/pull/50686) ([János Benjamin Antal](https://github.com/antaljanosbenjamin)).

|

* Added support for [PRQL](https://prql-lang.org/) as a query language. [#50686](https://github.com/ClickHouse/ClickHouse/pull/50686) ([János Benjamin Antal](https://github.com/antaljanosbenjamin)).

|

||||||

* Allow to add disk name for custom disks. Previously custom disks would use an internal generated disk name. Now it will be possible with `disk = disk_<name>(...)` (e.g. disk will have name `name`) . [#51552](https://github.com/ClickHouse/ClickHouse/pull/51552) ([Kseniia Sumarokova](https://github.com/kssenii)). This syntax can be changed in this release.

|

* Allow to add disk name for custom disks. Previously custom disks would use an internal generated disk name. Now it will be possible with `disk = disk_<name>(...)` (e.g. disk will have name `name`) . [#51552](https://github.com/ClickHouse/ClickHouse/pull/51552) ([Kseniia Sumarokova](https://github.com/kssenii)). This syntax can be changed in this release.

|

||||||

* (experimental MaterializedMySQL) Fixed crash when `mysqlxx::Pool::Entry` is used after it was disconnected. [#52063](https://github.com/ClickHouse/ClickHouse/pull/52063) ([Val Doroshchuk](https://github.com/valbok)).

|

* (experimental MaterializedMySQL) Fixed crash when `mysqlxx::Pool::Entry` is used after it was disconnected. [#52063](https://github.com/ClickHouse/ClickHouse/pull/52063) ([Val Doroshchuk](https://github.com/valbok)).

|

||||||

|

|||||||

@ -23,11 +23,8 @@ curl https://clickhouse.com/ | sh

|

|||||||

|

|

||||||

## Upcoming Events

|

## Upcoming Events

|

||||||

|

|

||||||

* [**v23.7 Release Webinar**](https://clickhouse.com/company/events/v23-7-community-release-call?utm_source=github&utm_medium=social&utm_campaign=release-webinar-2023-07) - Jul 27 - 23.7 is rapidly approaching. Original creator, co-founder, and CTO of ClickHouse Alexey Milovidov will walk us through the highlights of the release.

|

* [**v23.8 Community Call**](https://clickhouse.com/company/events/v23-8-community-release-call?utm_source=github&utm_medium=social&utm_campaign=release-webinar-2023-08) - Aug 31 - 23.8 is rapidly approaching. Original creator, co-founder, and CTO of ClickHouse Alexey Milovidov will walk us through the highlights of the release.

|

||||||

* [**ClickHouse Meetup in Boston**](https://www.meetup.com/clickhouse-boston-user-group/events/293913596) - Jul 18

|

* [**ClickHouse & AI - A Meetup in San Francisco**](https://www.meetup.com/clickhouse-silicon-valley-meetup-group/events/294472987) - Aug 8

|

||||||

* [**ClickHouse Meetup in NYC**](https://www.meetup.com/clickhouse-new-york-user-group/events/293913441) - Jul 19

|

|

||||||

* [**ClickHouse Meetup in Toronto**](https://www.meetup.com/clickhouse-toronto-user-group/events/294183127) - Jul 20

|

|

||||||

* [**ClickHouse Meetup in Singapore**](https://www.meetup.com/clickhouse-singapore-meetup-group/events/294428050/) - Jul 27

|

|

||||||

* [**ClickHouse Meetup in Paris**](https://www.meetup.com/clickhouse-france-user-group/events/294283460) - Sep 12

|

* [**ClickHouse Meetup in Paris**](https://www.meetup.com/clickhouse-france-user-group/events/294283460) - Sep 12

|

||||||

|

|

||||||

Also, keep an eye out for upcoming meetups around the world. Somewhere else you want us to be? Please feel free to reach out to tyler <at> clickhouse <dot> com.

|

Also, keep an eye out for upcoming meetups around the world. Somewhere else you want us to be? Please feel free to reach out to tyler <at> clickhouse <dot> com.

|

||||||

|

|||||||

1

contrib/CMakeLists.txt

vendored

1

contrib/CMakeLists.txt

vendored

@ -92,6 +92,7 @@ add_contrib (google-protobuf-cmake google-protobuf)

|

|||||||

add_contrib (openldap-cmake openldap)

|

add_contrib (openldap-cmake openldap)

|

||||||

add_contrib (grpc-cmake grpc)

|

add_contrib (grpc-cmake grpc)

|

||||||

add_contrib (msgpack-c-cmake msgpack-c)

|

add_contrib (msgpack-c-cmake msgpack-c)

|

||||||

|

add_contrib (libarchive-cmake libarchive)

|

||||||

|

|

||||||

add_contrib (corrosion-cmake corrosion)

|

add_contrib (corrosion-cmake corrosion)

|

||||||

|

|

||||||

|

|||||||

1

contrib/libarchive

vendored

Submodule

1

contrib/libarchive

vendored

Submodule

@ -0,0 +1 @@

|

|||||||

|

Subproject commit ee45796171324519f0c0bfd012018dd099296336

|

||||||

172

contrib/libarchive-cmake/CMakeLists.txt

Normal file

172

contrib/libarchive-cmake/CMakeLists.txt

Normal file

@ -0,0 +1,172 @@

|

|||||||

|

set (LIBRARY_DIR "${ClickHouse_SOURCE_DIR}/contrib/libarchive")

|

||||||

|

|

||||||

|

set(SRCS

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_acl.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_blake2sp_ref.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_blake2s_ref.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_check_magic.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_cmdline.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_cryptor.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_digest.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_disk_acl_darwin.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_disk_acl_freebsd.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_disk_acl_linux.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_disk_acl_sunos.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_copy_bhfi.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_copy_stat.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_link_resolver.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_sparse.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_stat.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_strmode.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_entry_xattr.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_getdate.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_hmac.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_match.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_options.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_pack_dev.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_pathmatch.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_ppmd7.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_ppmd8.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_random.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_rb.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_add_passphrase.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_append_filter.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_data_into_fd.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_disk_entry_from_file.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_disk_posix.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_disk_set_standard_lookup.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_disk_windows.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_extract2.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_extract.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_open_fd.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_open_file.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_open_filename.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_open_memory.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_set_format.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_set_options.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_all.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_by_code.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_bzip2.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_compress.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_grzip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_gzip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_lrzip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_lz4.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_lzop.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_none.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_program.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_rpm.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_uu.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_xz.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_filter_zstd.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_7zip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_all.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_ar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_by_code.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_cab.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_cpio.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_empty.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_iso9660.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_lha.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_mtree.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_rar5.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_rar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_raw.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_tar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_warc.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_xar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_read_support_format_zip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_string.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_string_sprintf.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_util.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_version_details.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_virtual.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_windows.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_b64encode.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_by_name.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_bzip2.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_compress.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_grzip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_gzip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_lrzip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_lz4.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_lzop.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_none.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_program.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_uuencode.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_xz.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_add_filter_zstd.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_disk_posix.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_disk_set_standard_lookup.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_disk_windows.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_open_fd.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_open_file.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_open_filename.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_open_memory.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_7zip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_ar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_by_name.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_cpio_binary.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_cpio.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_cpio_newc.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_cpio_odc.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_filter_by_ext.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_gnutar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_iso9660.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_mtree.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_pax.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_raw.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_shar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_ustar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_v7tar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_warc.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_xar.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_format_zip.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_options.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/archive_write_set_passphrase.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/filter_fork_posix.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/filter_fork_windows.c"

|

||||||

|

"${LIBRARY_DIR}/libarchive/xxhash.c"

|

||||||

|

)

|

||||||

|

|

||||||

|

add_library(_libarchive ${SRCS})

|

||||||

|

target_include_directories(_libarchive PUBLIC

|

||||||

|

${CMAKE_CURRENT_SOURCE_DIR}

|

||||||

|

"${LIBRARY_DIR}/libarchive"

|

||||||

|

)

|

||||||

|

|

||||||

|

target_compile_definitions(_libarchive PUBLIC

|

||||||

|

HAVE_CONFIG_H

|

||||||

|

)

|

||||||

|

|

||||||

|

target_compile_options(_libarchive PRIVATE "-Wno-reserved-macro-identifier")

|

||||||

|

|

||||||

|

if (TARGET ch_contrib::xz)

|

||||||

|

target_compile_definitions(_libarchive PUBLIC HAVE_LZMA_H=1)

|

||||||

|

target_link_libraries(_libarchive PRIVATE ch_contrib::xz)

|

||||||

|

endif()

|

||||||

|

|

||||||

|

if (TARGET ch_contrib::zlib)

|

||||||

|

target_compile_definitions(_libarchive PUBLIC HAVE_ZLIB_H=1)

|

||||||

|

target_link_libraries(_libarchive PRIVATE ch_contrib::zlib)

|

||||||

|

endif()

|

||||||

|

|

||||||

|

if (OS_LINUX)

|

||||||

|

target_compile_definitions(

|

||||||

|

_libarchive PUBLIC

|

||||||

|

MAJOR_IN_SYSMACROS=1

|

||||||

|

HAVE_LINUX_FS_H=1

|

||||||

|

HAVE_STRUCT_STAT_ST_MTIM_TV_NSEC=1

|

||||||

|

HAVE_LINUX_TYPES_H=1

|

||||||

|

HAVE_SYS_STATFS_H=1

|

||||||

|

HAVE_FUTIMESAT=1

|

||||||

|

HAVE_ICONV=1

|

||||||

|

)

|

||||||

|

endif()

|

||||||

|

|

||||||

|

add_library(ch_contrib::libarchive ALIAS _libarchive)

|

||||||

1391

contrib/libarchive-cmake/config.h

Normal file

1391

contrib/libarchive-cmake/config.h

Normal file

File diff suppressed because it is too large

Load Diff

@ -32,7 +32,7 @@ RUN arch=${TARGETARCH:-amd64} \

|

|||||||

esac

|

esac

|

||||||

|

|

||||||

ARG REPOSITORY="https://s3.amazonaws.com/clickhouse-builds/22.4/31c367d3cd3aefd316778601ff6565119fe36682/package_release"

|

ARG REPOSITORY="https://s3.amazonaws.com/clickhouse-builds/22.4/31c367d3cd3aefd316778601ff6565119fe36682/package_release"

|

||||||

ARG VERSION="23.7.1.2470"

|

ARG VERSION="23.7.4.5"

|

||||||

ARG PACKAGES="clickhouse-keeper"

|

ARG PACKAGES="clickhouse-keeper"

|

||||||

|

|

||||||

# user/group precreated explicitly with fixed uid/gid on purpose.

|

# user/group precreated explicitly with fixed uid/gid on purpose.

|

||||||

|

|||||||

@ -33,7 +33,7 @@ RUN arch=${TARGETARCH:-amd64} \

|

|||||||

# lts / testing / prestable / etc

|

# lts / testing / prestable / etc

|

||||||

ARG REPO_CHANNEL="stable"

|

ARG REPO_CHANNEL="stable"

|

||||||

ARG REPOSITORY="https://packages.clickhouse.com/tgz/${REPO_CHANNEL}"

|

ARG REPOSITORY="https://packages.clickhouse.com/tgz/${REPO_CHANNEL}"

|

||||||

ARG VERSION="23.7.1.2470"

|

ARG VERSION="23.7.4.5"

|

||||||

ARG PACKAGES="clickhouse-client clickhouse-server clickhouse-common-static"

|

ARG PACKAGES="clickhouse-client clickhouse-server clickhouse-common-static"

|

||||||

|

|

||||||

# user/group precreated explicitly with fixed uid/gid on purpose.

|

# user/group precreated explicitly with fixed uid/gid on purpose.

|

||||||

|

|||||||

@ -23,7 +23,7 @@ RUN sed -i "s|http://archive.ubuntu.com|${apt_archive}|g" /etc/apt/sources.list

|

|||||||

|

|

||||||

ARG REPO_CHANNEL="stable"

|

ARG REPO_CHANNEL="stable"

|

||||||

ARG REPOSITORY="deb [signed-by=/usr/share/keyrings/clickhouse-keyring.gpg] https://packages.clickhouse.com/deb ${REPO_CHANNEL} main"

|

ARG REPOSITORY="deb [signed-by=/usr/share/keyrings/clickhouse-keyring.gpg] https://packages.clickhouse.com/deb ${REPO_CHANNEL} main"

|

||||||

ARG VERSION="23.7.1.2470"

|

ARG VERSION="23.7.4.5"

|

||||||

ARG PACKAGES="clickhouse-client clickhouse-server clickhouse-common-static"

|

ARG PACKAGES="clickhouse-client clickhouse-server clickhouse-common-static"

|

||||||

|

|

||||||

# set non-empty deb_location_url url to create a docker image

|

# set non-empty deb_location_url url to create a docker image

|

||||||

|

|||||||

@ -19,13 +19,13 @@ RUN apt-get update \

|

|||||||

# and MEMORY_LIMIT_EXCEEDED exceptions in Functional tests (total memory limit in Functional tests is ~55.24 GiB).

|

# and MEMORY_LIMIT_EXCEEDED exceptions in Functional tests (total memory limit in Functional tests is ~55.24 GiB).

|

||||||

# TSAN will flush shadow memory when reaching this limit.

|

# TSAN will flush shadow memory when reaching this limit.

|

||||||

# It may cause false-negatives, but it's better than OOM.

|

# It may cause false-negatives, but it's better than OOM.

|

||||||

RUN echo "TSAN_OPTIONS='verbosity=1000 halt_on_error=1 history_size=7 memory_limit_mb=46080 second_deadlock_stack=1'" >> /etc/environment

|

RUN echo "TSAN_OPTIONS='verbosity=1000 halt_on_error=1 abort_on_error=1 history_size=7 memory_limit_mb=46080 second_deadlock_stack=1'" >> /etc/environment

|

||||||

RUN echo "UBSAN_OPTIONS='print_stacktrace=1'" >> /etc/environment

|

RUN echo "UBSAN_OPTIONS='print_stacktrace=1'" >> /etc/environment

|

||||||

RUN echo "MSAN_OPTIONS='abort_on_error=1 poison_in_dtor=1'" >> /etc/environment

|

RUN echo "MSAN_OPTIONS='abort_on_error=1 poison_in_dtor=1'" >> /etc/environment

|

||||||

RUN echo "LSAN_OPTIONS='suppressions=/usr/share/clickhouse-test/config/lsan_suppressions.txt'" >> /etc/environment

|

RUN echo "LSAN_OPTIONS='suppressions=/usr/share/clickhouse-test/config/lsan_suppressions.txt'" >> /etc/environment

|

||||||

# Sanitizer options for current shell (not current, but the one that will be spawned on "docker run")

|

# Sanitizer options for current shell (not current, but the one that will be spawned on "docker run")

|

||||||

# (but w/o verbosity for TSAN, otherwise test.reference will not match)

|

# (but w/o verbosity for TSAN, otherwise test.reference will not match)

|

||||||

ENV TSAN_OPTIONS='halt_on_error=1 history_size=7 memory_limit_mb=46080 second_deadlock_stack=1'

|

ENV TSAN_OPTIONS='halt_on_error=1 abort_on_error=1 history_size=7 memory_limit_mb=46080 second_deadlock_stack=1'

|

||||||

ENV UBSAN_OPTIONS='print_stacktrace=1'

|

ENV UBSAN_OPTIONS='print_stacktrace=1'

|

||||||

ENV MSAN_OPTIONS='abort_on_error=1 poison_in_dtor=1'

|

ENV MSAN_OPTIONS='abort_on_error=1 poison_in_dtor=1'

|

||||||

|

|

||||||

|

|||||||

@ -130,7 +130,7 @@ COPY misc/ /misc/

|

|||||||

|

|

||||||

# Same options as in test/base/Dockerfile

|

# Same options as in test/base/Dockerfile

|

||||||

# (in case you need to override them in tests)

|

# (in case you need to override them in tests)

|

||||||

ENV TSAN_OPTIONS='halt_on_error=1 history_size=7 memory_limit_mb=46080 second_deadlock_stack=1'

|

ENV TSAN_OPTIONS='halt_on_error=1 abort_on_error=1 history_size=7 memory_limit_mb=46080 second_deadlock_stack=1'

|

||||||

ENV UBSAN_OPTIONS='print_stacktrace=1'

|

ENV UBSAN_OPTIONS='print_stacktrace=1'

|

||||||

ENV MSAN_OPTIONS='abort_on_error=1 poison_in_dtor=1'

|

ENV MSAN_OPTIONS='abort_on_error=1 poison_in_dtor=1'

|

||||||

|

|

||||||

|

|||||||

@ -12,3 +12,5 @@ services:

|

|||||||

- type: ${HDFS_FS:-tmpfs}

|

- type: ${HDFS_FS:-tmpfs}

|

||||||

source: ${HDFS_LOGS:-}

|

source: ${HDFS_LOGS:-}

|

||||||

target: /usr/local/hadoop/logs

|

target: /usr/local/hadoop/logs

|

||||||

|

sysctls:

|

||||||

|

net.ipv4.ip_local_port_range: '55000 65535'

|

||||||

|

|||||||

@ -31,6 +31,8 @@ services:

|

|||||||

- kafka_zookeeper

|

- kafka_zookeeper

|

||||||

security_opt:

|

security_opt:

|

||||||

- label:disable

|

- label:disable

|

||||||

|

sysctls:

|

||||||

|

net.ipv4.ip_local_port_range: '55000 65535'

|

||||||

|

|

||||||

schema-registry:

|

schema-registry:

|

||||||

image: confluentinc/cp-schema-registry:5.2.0

|

image: confluentinc/cp-schema-registry:5.2.0

|

||||||

|

|||||||

@ -20,6 +20,8 @@ services:

|

|||||||

depends_on:

|

depends_on:

|

||||||

- hdfskerberos

|

- hdfskerberos

|

||||||

entrypoint: /etc/bootstrap.sh -d

|

entrypoint: /etc/bootstrap.sh -d

|

||||||

|

sysctls:

|

||||||

|

net.ipv4.ip_local_port_range: '55000 65535'

|

||||||

|

|

||||||

hdfskerberos:

|

hdfskerberos:

|

||||||

image: clickhouse/kerberos-kdc:${DOCKER_KERBEROS_KDC_TAG:-latest}

|

image: clickhouse/kerberos-kdc:${DOCKER_KERBEROS_KDC_TAG:-latest}

|

||||||

@ -29,3 +31,5 @@ services:

|

|||||||

- ${KERBERIZED_HDFS_DIR}/../../kerberos_image_config.sh:/config.sh

|

- ${KERBERIZED_HDFS_DIR}/../../kerberos_image_config.sh:/config.sh

|

||||||

- /dev/urandom:/dev/random

|

- /dev/urandom:/dev/random

|

||||||

expose: [88, 749]

|

expose: [88, 749]

|

||||||

|

sysctls:

|

||||||

|

net.ipv4.ip_local_port_range: '55000 65535'

|

||||||

|

|||||||

@ -48,6 +48,8 @@ services:

|

|||||||

- kafka_kerberos

|

- kafka_kerberos

|

||||||

security_opt:

|

security_opt:

|

||||||

- label:disable

|

- label:disable

|

||||||

|

sysctls:

|

||||||

|

net.ipv4.ip_local_port_range: '55000 65535'

|

||||||

|

|

||||||

kafka_kerberos:

|

kafka_kerberos:

|

||||||

image: clickhouse/kerberos-kdc:${DOCKER_KERBEROS_KDC_TAG:-latest}

|

image: clickhouse/kerberos-kdc:${DOCKER_KERBEROS_KDC_TAG:-latest}

|

||||||

|

|||||||

@ -14,7 +14,7 @@ services:

|

|||||||

MINIO_ACCESS_KEY: minio

|

MINIO_ACCESS_KEY: minio

|

||||||

MINIO_SECRET_KEY: minio123

|

MINIO_SECRET_KEY: minio123

|

||||||

MINIO_PROMETHEUS_AUTH_TYPE: public

|

MINIO_PROMETHEUS_AUTH_TYPE: public

|

||||||

command: server --address :9001 --certs-dir /certs /data1-1

|

command: server --console-address 127.0.0.1:19001 --address :9001 --certs-dir /certs /data1-1

|

||||||

depends_on:

|

depends_on:

|

||||||

- proxy1

|

- proxy1

|

||||||

- proxy2

|

- proxy2

|

||||||

|

|||||||

@ -3,7 +3,7 @@

|

|||||||

<default>

|

<default>

|

||||||

<allow_introspection_functions>1</allow_introspection_functions>

|

<allow_introspection_functions>1</allow_introspection_functions>

|

||||||

<log_queries>1</log_queries>

|

<log_queries>1</log_queries>

|

||||||

<metrics_perf_events_enabled>1</metrics_perf_events_enabled>

|

<metrics_perf_events_enabled>0</metrics_perf_events_enabled>

|

||||||

<!--

|

<!--

|

||||||

If a test takes too long by mistake, the entire test task can

|

If a test takes too long by mistake, the entire test task can

|

||||||

time out and the author won't get a proper message. Put some cap

|

time out and the author won't get a proper message. Put some cap

|

||||||

|

|||||||

@ -369,6 +369,7 @@ for query_index in queries_to_run:

|

|||||||

"max_execution_time": args.prewarm_max_query_seconds,

|

"max_execution_time": args.prewarm_max_query_seconds,

|

||||||

"query_profiler_real_time_period_ns": 10000000,

|

"query_profiler_real_time_period_ns": 10000000,

|

||||||

"query_profiler_cpu_time_period_ns": 10000000,

|

"query_profiler_cpu_time_period_ns": 10000000,

|

||||||

|

"metrics_perf_events_enabled": 1,

|

||||||

"memory_profiler_step": "4Mi",

|

"memory_profiler_step": "4Mi",

|

||||||

},

|

},

|

||||||

)

|

)

|

||||||

@ -503,6 +504,7 @@ for query_index in queries_to_run:

|

|||||||

settings={

|

settings={

|

||||||

"query_profiler_real_time_period_ns": 10000000,

|

"query_profiler_real_time_period_ns": 10000000,

|

||||||

"query_profiler_cpu_time_period_ns": 10000000,

|

"query_profiler_cpu_time_period_ns": 10000000,

|

||||||

|

"metrics_perf_events_enabled": 1,

|

||||||

},

|

},

|

||||||

)

|

)

|

||||||

print(

|

print(

|

||||||

|

|||||||

@ -96,5 +96,4 @@ rg -Fa "Fatal" /var/log/clickhouse-server/clickhouse-server.log ||:

|

|||||||

zstd < /var/log/clickhouse-server/clickhouse-server.log > /test_output/clickhouse-server.log.zst &

|

zstd < /var/log/clickhouse-server/clickhouse-server.log > /test_output/clickhouse-server.log.zst &

|

||||||

|

|

||||||

# Compressed (FIXME: remove once only github actions will be left)

|

# Compressed (FIXME: remove once only github actions will be left)

|

||||||

rm /var/log/clickhouse-server/clickhouse-server.log

|

|

||||||

mv /var/log/clickhouse-server/stderr.log /test_output/ ||:

|

mv /var/log/clickhouse-server/stderr.log /test_output/ ||:

|

||||||

|

|||||||

@ -41,6 +41,8 @@ RUN apt-get update -y \

|

|||||||

zstd \

|

zstd \

|

||||||

file \

|

file \

|

||||||

pv \

|

pv \

|

||||||

|

zip \

|

||||||

|

p7zip-full \

|

||||||

&& apt-get clean

|

&& apt-get clean

|

||||||

|

|

||||||

RUN pip3 install numpy scipy pandas Jinja2

|

RUN pip3 install numpy scipy pandas Jinja2

|

||||||

|

|||||||

@ -200,8 +200,8 @@ Templates:

|

|||||||

- [Server Setting](_description_templates/template-server-setting.md)

|

- [Server Setting](_description_templates/template-server-setting.md)

|

||||||

- [Database or Table engine](_description_templates/template-engine.md)

|

- [Database or Table engine](_description_templates/template-engine.md)

|

||||||

- [System table](_description_templates/template-system-table.md)

|

- [System table](_description_templates/template-system-table.md)

|

||||||

- [Data type](_description_templates/data-type.md)

|

- [Data type](_description_templates/template-data-type.md)

|

||||||

- [Statement](_description_templates/statement.md)

|

- [Statement](_description_templates/template-statement.md)

|

||||||

|

|

||||||

|

|

||||||

<a name="how-to-build-docs"/>

|

<a name="how-to-build-docs"/>

|

||||||

|

|||||||

31

docs/changelogs/v23.7.2.25-stable.md

Normal file

31

docs/changelogs/v23.7.2.25-stable.md

Normal file

@ -0,0 +1,31 @@

|

|||||||

|

---

|

||||||

|

sidebar_position: 1

|

||||||

|

sidebar_label: 2023

|

||||||

|

---

|

||||||

|

|

||||||

|

# 2023 Changelog

|

||||||

|

|

||||||

|

### ClickHouse release v23.7.2.25-stable (8dd1107b032) FIXME as compared to v23.7.1.2470-stable (a70127baecc)

|

||||||

|

|

||||||

|

#### Backward Incompatible Change

|

||||||

|

* Backported in [#52850](https://github.com/ClickHouse/ClickHouse/issues/52850): If a dynamic disk contains a name, it should be specified as `disk = disk(name = 'disk_name'`, ...) in disk function arguments. In previous version it could be specified as `disk = disk_<disk_name>(...)`, which is no longer supported. [#52820](https://github.com/ClickHouse/ClickHouse/pull/52820) ([Kseniia Sumarokova](https://github.com/kssenii)).

|

||||||

|

|

||||||

|

#### Build/Testing/Packaging Improvement

|

||||||

|

* Backported in [#52913](https://github.com/ClickHouse/ClickHouse/issues/52913): Add `clickhouse-keeper-client` symlink to the clickhouse-server package. [#51882](https://github.com/ClickHouse/ClickHouse/pull/51882) ([Mikhail f. Shiryaev](https://github.com/Felixoid)).

|

||||||

|

|

||||||

|

#### Bug Fix (user-visible misbehavior in an official stable release)

|

||||||

|

|

||||||

|

* Fix binary arithmetic for Nullable(IPv4) [#51642](https://github.com/ClickHouse/ClickHouse/pull/51642) ([Yakov Olkhovskiy](https://github.com/yakov-olkhovskiy)).

|

||||||

|

* Support IPv4 and IPv6 as dictionary attributes [#51756](https://github.com/ClickHouse/ClickHouse/pull/51756) ([Yakov Olkhovskiy](https://github.com/yakov-olkhovskiy)).

|

||||||

|

* init and destroy ares channel on demand.. [#52634](https://github.com/ClickHouse/ClickHouse/pull/52634) ([Arthur Passos](https://github.com/arthurpassos)).

|

||||||

|

* Fix crash in function `tuple` with one sparse column argument [#52659](https://github.com/ClickHouse/ClickHouse/pull/52659) ([Anton Popov](https://github.com/CurtizJ)).

|

||||||

|

* Fix data race in Keeper reconfiguration [#52804](https://github.com/ClickHouse/ClickHouse/pull/52804) ([Antonio Andelic](https://github.com/antonio2368)).

|

||||||

|

* clickhouse-keeper: fix implementation of server with poll() [#52833](https://github.com/ClickHouse/ClickHouse/pull/52833) ([Andy Fiddaman](https://github.com/citrus-it)).

|

||||||

|

|

||||||

|

#### NOT FOR CHANGELOG / INSIGNIFICANT

|

||||||

|

|

||||||

|

* Rename setting disable_url_encoding to enable_url_encoding and add a test [#52656](https://github.com/ClickHouse/ClickHouse/pull/52656) ([Kruglov Pavel](https://github.com/Avogar)).

|

||||||

|

* Fix bugs and better test for SYSTEM STOP LISTEN [#52680](https://github.com/ClickHouse/ClickHouse/pull/52680) ([Nikolay Degterinsky](https://github.com/evillique)).

|

||||||

|

* Increase min protocol version for sparse serialization [#52835](https://github.com/ClickHouse/ClickHouse/pull/52835) ([Anton Popov](https://github.com/CurtizJ)).

|

||||||

|

* Docker improvements [#52869](https://github.com/ClickHouse/ClickHouse/pull/52869) ([Mikhail f. Shiryaev](https://github.com/Felixoid)).

|

||||||

|

|

||||||

23

docs/changelogs/v23.7.3.14-stable.md

Normal file

23

docs/changelogs/v23.7.3.14-stable.md

Normal file

@ -0,0 +1,23 @@

|

|||||||

|

---

|

||||||

|

sidebar_position: 1

|

||||||

|

sidebar_label: 2023

|

||||||

|

---

|

||||||

|

|

||||||

|

# 2023 Changelog

|

||||||

|

|

||||||

|

### ClickHouse release v23.7.3.14-stable (bd9a510550c) FIXME as compared to v23.7.2.25-stable (8dd1107b032)

|

||||||

|

|

||||||

|

#### Build/Testing/Packaging Improvement

|

||||||

|

* Backported in [#53025](https://github.com/ClickHouse/ClickHouse/issues/53025): Packing inline cache into docker images sometimes causes strange special effects. Since we don't use it at all, it's good to go. [#53008](https://github.com/ClickHouse/ClickHouse/pull/53008) ([Mikhail f. Shiryaev](https://github.com/Felixoid)).

|

||||||

|

|

||||||

|

#### Bug Fix (user-visible misbehavior in an official stable release)

|

||||||

|

|

||||||

|

* Fix named collections on cluster 23.7 [#52687](https://github.com/ClickHouse/ClickHouse/pull/52687) ([Al Korgun](https://github.com/alkorgun)).

|

||||||

|

* Fix password leak in show create mysql table [#52962](https://github.com/ClickHouse/ClickHouse/pull/52962) ([Duc Canh Le](https://github.com/canhld94)).

|

||||||

|

* Fix ZstdDeflatingWriteBuffer truncating the output sometimes [#53064](https://github.com/ClickHouse/ClickHouse/pull/53064) ([Michael Kolupaev](https://github.com/al13n321)).

|

||||||

|

|

||||||

|

#### NOT FOR CHANGELOG / INSIGNIFICANT

|

||||||

|

|

||||||

|

* Suspicious DISTINCT crashes from sqlancer [#52636](https://github.com/ClickHouse/ClickHouse/pull/52636) ([Igor Nikonov](https://github.com/devcrafter)).

|

||||||

|

* Fix Parquet stats for Float32 and Float64 [#53067](https://github.com/ClickHouse/ClickHouse/pull/53067) ([Michael Kolupaev](https://github.com/al13n321)).

|

||||||

|

|

||||||

17

docs/changelogs/v23.7.4.5-stable.md

Normal file

17

docs/changelogs/v23.7.4.5-stable.md

Normal file

@ -0,0 +1,17 @@

|

|||||||

|

---

|

||||||

|

sidebar_position: 1

|

||||||

|

sidebar_label: 2023

|

||||||

|

---

|

||||||

|

|

||||||

|

# 2023 Changelog

|

||||||

|

|

||||||

|

### ClickHouse release v23.7.4.5-stable (bd2fcd44553) FIXME as compared to v23.7.3.14-stable (bd9a510550c)

|

||||||

|

|

||||||

|

#### Bug Fix (user-visible misbehavior in an official stable release)

|

||||||

|

|

||||||

|

* Disable the new parquet encoder [#53130](https://github.com/ClickHouse/ClickHouse/pull/53130) ([Alexey Milovidov](https://github.com/alexey-milovidov)).

|

||||||

|

|

||||||

|

#### NOT FOR CHANGELOG / INSIGNIFICANT

|

||||||

|

|

||||||

|

* Revert changes in `ZstdDeflatingAppendableWriteBuffer` [#53111](https://github.com/ClickHouse/ClickHouse/pull/53111) ([Antonio Andelic](https://github.com/antonio2368)).

|

||||||

|

|

||||||

@ -42,20 +42,20 @@ sudo apt-get install git cmake ccache python3 ninja-build nasm yasm gawk lsb-rel

|

|||||||

|

|

||||||

### Install and Use the Clang compiler

|

### Install and Use the Clang compiler

|

||||||

|

|

||||||

On Ubuntu/Debian you can use LLVM's automatic installation script, see [here](https://apt.llvm.org/).

|

On Ubuntu/Debian, you can use LLVM's automatic installation script; see [here](https://apt.llvm.org/).

|

||||||

|

|

||||||

``` bash

|

``` bash

|

||||||

sudo bash -c "$(wget -O - https://apt.llvm.org/llvm.sh)"

|

sudo bash -c "$(wget -O - https://apt.llvm.org/llvm.sh)"

|

||||||

```

|

```

|

||||||

|

|

||||||

Note: in case of troubles, you can also use this:

|

Note: in case of trouble, you can also use this:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

sudo apt-get install software-properties-common

|

sudo apt-get install software-properties-common

|

||||||

sudo add-apt-repository -y ppa:ubuntu-toolchain-r/test

|

sudo add-apt-repository -y ppa:ubuntu-toolchain-r/test

|

||||||

```

|

```

|

||||||

|

|

||||||

For other Linux distribution - check the availability of LLVM's [prebuild packages](https://releases.llvm.org/download.html).

|

For other Linux distributions - check the availability of LLVM's [prebuild packages](https://releases.llvm.org/download.html).

|

||||||

|

|

||||||

As of April 2023, clang-16 or higher will work.

|

As of April 2023, clang-16 or higher will work.

|

||||||

GCC as a compiler is not supported.

|

GCC as a compiler is not supported.

|

||||||

@ -92,8 +92,12 @@ cmake -S . -B build

|

|||||||

cmake --build build # or: `cd build; ninja`

|

cmake --build build # or: `cd build; ninja`

|

||||||

```

|

```

|

||||||

|

|

||||||

|

:::tip

|

||||||

|

In case `cmake` isn't able to detect the number of available logical cores, the build will be done by one thread. To overcome this, you can tweak `cmake` to use a specific number of threads with `-j` flag, for example, `cmake --build build -j 16`. Alternatively, you can generate build files with a specific number of jobs in advance to avoid always setting the flag: `cmake -DPARALLEL_COMPILE_JOBS=16 -S . -B build`, where `16` is the desired number of threads.

|

||||||

|

:::

|

||||||

|

|

||||||

To create an executable, run `cmake --build build --target clickhouse` (or: `cd build; ninja clickhouse`).

|

To create an executable, run `cmake --build build --target clickhouse` (or: `cd build; ninja clickhouse`).

|

||||||

This will create executable `build/programs/clickhouse` which can be used with `client` or `server` arguments.

|

This will create an executable `build/programs/clickhouse`, which can be used with `client` or `server` arguments.

|

||||||

|

|

||||||

## Building on Any Linux {#how-to-build-clickhouse-on-any-linux}

|

## Building on Any Linux {#how-to-build-clickhouse-on-any-linux}

|

||||||

|

|

||||||

@ -107,7 +111,7 @@ The build requires the following components:

|

|||||||

- Yasm

|

- Yasm

|

||||||

- Gawk

|

- Gawk

|

||||||

|

|

||||||

If all the components are installed, you may build in the same way as the steps above.

|

If all the components are installed, you may build it in the same way as the steps above.

|

||||||

|

|

||||||

Example for OpenSUSE Tumbleweed:

|

Example for OpenSUSE Tumbleweed:

|

||||||

|

|

||||||

@ -123,7 +127,7 @@ Example for Fedora Rawhide:

|

|||||||

|

|

||||||

``` bash

|

``` bash

|

||||||

sudo yum update

|

sudo yum update

|

||||||

sudo yum --nogpg install git cmake make clang python3 ccache nasm yasm gawk

|

sudo yum --nogpg install git cmake make clang python3 ccache lld nasm yasm gawk

|

||||||

git clone --recursive https://github.com/ClickHouse/ClickHouse.git

|

git clone --recursive https://github.com/ClickHouse/ClickHouse.git

|

||||||

mkdir build

|

mkdir build

|

||||||

cmake -S . -B build

|

cmake -S . -B build

|

||||||

|

|||||||

@ -141,6 +141,10 @@ Runs [stateful functional tests](tests.md#functional-tests). Treat them in the s

|

|||||||

Runs [integration tests](tests.md#integration-tests).

|

Runs [integration tests](tests.md#integration-tests).

|

||||||

|

|

||||||

|

|

||||||

|

## Bugfix validate check

|

||||||

|

Checks that either a new test (functional or integration) or there some changed tests that fail with the binary built on master branch. This check is triggered when pull request has "pr-bugfix" label.

|

||||||

|

|

||||||

|

|

||||||

## Stress Test

|

## Stress Test

|

||||||

Runs stateless functional tests concurrently from several clients to detect

|

Runs stateless functional tests concurrently from several clients to detect

|

||||||

concurrency-related errors. If it fails:

|

concurrency-related errors. If it fails:

|

||||||

|

|||||||

@ -22,7 +22,7 @@ CREATE TABLE deltalake

|

|||||||

- `url` — Bucket url with path to the existing Delta Lake table.

|

- `url` — Bucket url with path to the existing Delta Lake table.

|

||||||

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file.

|

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file.

|

||||||

|

|

||||||

Engine parameters can be specified using [Named Collections](../../../operations/named-collections.md)

|

Engine parameters can be specified using [Named Collections](/docs/en/operations/named-collections.md).

|

||||||

|

|

||||||

**Example**

|

**Example**

|

||||||

|

|

||||||

|

|||||||

@ -22,7 +22,7 @@ CREATE TABLE hudi_table

|

|||||||

- `url` — Bucket url with the path to an existing Hudi table.

|

- `url` — Bucket url with the path to an existing Hudi table.

|

||||||

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file.

|

- `aws_access_key_id`, `aws_secret_access_key` - Long-term credentials for the [AWS](https://aws.amazon.com/) account user. You can use these to authenticate your requests. Parameter is optional. If credentials are not specified, they are used from the configuration file.

|

||||||

|

|

||||||

Engine parameters can be specified using [Named Collections](../../../operations/named-collections.md)

|

Engine parameters can be specified using [Named Collections](/docs/en/operations/named-collections.md).

|

||||||

|

|

||||||

**Example**

|

**Example**

|

||||||

|

|

||||||

|

|||||||

@ -237,7 +237,7 @@ The following settings can be set before query execution or placed into configur

|

|||||||

- `s3_max_get_rps` — Maximum GET requests per second rate before throttling. Default value is `0` (unlimited).

|

- `s3_max_get_rps` — Maximum GET requests per second rate before throttling. Default value is `0` (unlimited).

|

||||||

- `s3_max_get_burst` — Max number of requests that can be issued simultaneously before hitting request per second limit. By default (`0` value) equals to `s3_max_get_rps`.

|

- `s3_max_get_burst` — Max number of requests that can be issued simultaneously before hitting request per second limit. By default (`0` value) equals to `s3_max_get_rps`.

|

||||||

- `s3_upload_part_size_multiply_factor` - Multiply `s3_min_upload_part_size` by this factor each time `s3_multiply_parts_count_threshold` parts were uploaded from a single write to S3. Default values is `2`.

|

- `s3_upload_part_size_multiply_factor` - Multiply `s3_min_upload_part_size` by this factor each time `s3_multiply_parts_count_threshold` parts were uploaded from a single write to S3. Default values is `2`.

|

||||||

- `s3_upload_part_size_multiply_parts_count_threshold` - Each time this number of parts was uploaded to S3 `s3_min_upload_part_size multiplied` by `s3_upload_part_size_multiply_factor`. Default value us `500`.

|

- `s3_upload_part_size_multiply_parts_count_threshold` - Each time this number of parts was uploaded to S3, `s3_min_upload_part_size` is multiplied by `s3_upload_part_size_multiply_factor`. Default value is `500`.

|

||||||

- `s3_max_inflight_parts_for_one_file` - Limits the number of put requests that can be run concurrently for one object. Its number should be limited. The value `0` means unlimited. Default value is `20`. Each in-flight part has a buffer with size `s3_min_upload_part_size` for the first `s3_upload_part_size_multiply_factor` parts and more when file is big enough, see `upload_part_size_multiply_factor`. With default settings one uploaded file consumes not more than `320Mb` for a file which is less than `8G`. The consumption is greater for a larger file.

|

- `s3_max_inflight_parts_for_one_file` - Limits the number of put requests that can be run concurrently for one object. Its number should be limited. The value `0` means unlimited. Default value is `20`. Each in-flight part has a buffer with size `s3_min_upload_part_size` for the first `s3_upload_part_size_multiply_factor` parts and more when file is big enough, see `upload_part_size_multiply_factor`. With default settings one uploaded file consumes not more than `320Mb` for a file which is less than `8G`. The consumption is greater for a larger file.

|

||||||

|

|

||||||

Security consideration: if malicious user can specify arbitrary S3 URLs, `s3_max_redirects` must be set to zero to avoid [SSRF](https://en.wikipedia.org/wiki/Server-side_request_forgery) attacks; or alternatively, `remote_host_filter` must be specified in server configuration.

|

Security consideration: if malicious user can specify arbitrary S3 URLs, `s3_max_redirects` must be set to zero to avoid [SSRF](https://en.wikipedia.org/wiki/Server-side_request_forgery) attacks; or alternatively, `remote_host_filter` must be specified in server configuration.

|

||||||

|

|||||||

@ -193,6 +193,19 @@ index creation, `L2Distance` is used as default. Parameter `NumTrees` is the num

|

|||||||

specified: 100). Higher values of `NumTree` mean more accurate search results but slower index creation / query times (approximately

|

specified: 100). Higher values of `NumTree` mean more accurate search results but slower index creation / query times (approximately

|

||||||

linearly) as well as larger index sizes.

|

linearly) as well as larger index sizes.

|

||||||

|

|

||||||

|

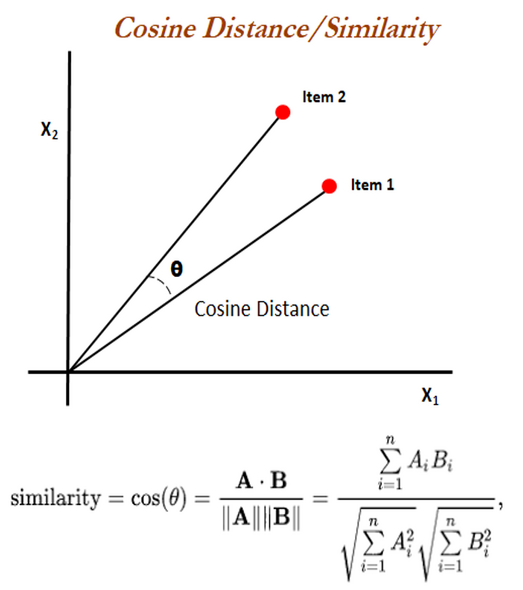

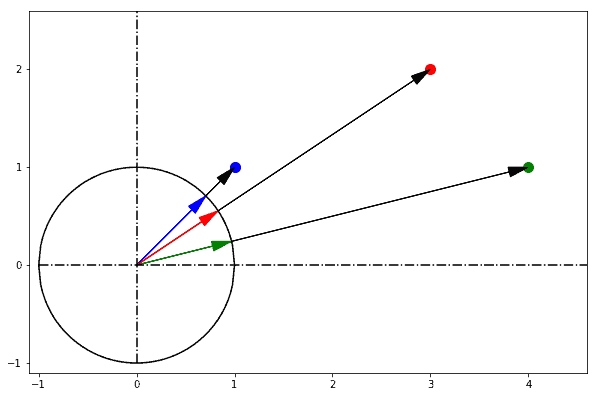

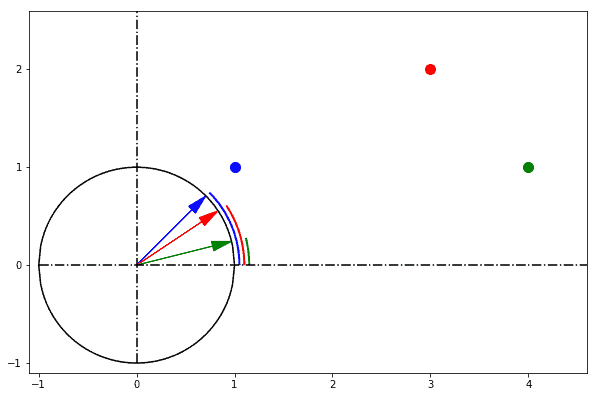

`L2Distance` is also called Euclidean distance, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points.

|

||||||

|

For example: If we have point P(p1,p2), Q(q1,q2), their distance will be d(p,q)

|

||||||

|

|

||||||

|

|

||||||

|

`cosineDistance` also called cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths.

|

||||||

|

|

||||||

|

|

||||||

|

The Euclidean distance corresponds to the L2-norm of a difference between vectors. The cosine similarity is proportional to the dot product of two vectors and inversely proportional to the product of their magnitudes.

|

||||||

|

|

||||||

|

In one sentence: cosine similarity care only about the angle between them, but do not care about the "distance" we normally think.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

:::note

|

:::note

|

||||||

Indexes over columns of type `Array` will generally work faster than indexes on `Tuple` columns. All arrays **must** have same length. Use

|

Indexes over columns of type `Array` will generally work faster than indexes on `Tuple` columns. All arrays **must** have same length. Use

|

||||||

[CONSTRAINT](/docs/en/sql-reference/statements/create/table.md#constraints) to avoid errors. For example, `CONSTRAINT constraint_name_1

|

[CONSTRAINT](/docs/en/sql-reference/statements/create/table.md#constraints) to avoid errors. For example, `CONSTRAINT constraint_name_1

|

||||||

|

|||||||

@ -13,7 +13,7 @@ A recommended alternative to the Buffer Table Engine is enabling [asynchronous i

|

|||||||

:::

|

:::

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

Buffer(database, table, num_layers, min_time, max_time, min_rows, max_rows, min_bytes, max_bytes)

|

Buffer(database, table, num_layers, min_time, max_time, min_rows, max_rows, min_bytes, max_bytes [,flush_time [,flush_rows [,flush_bytes]]])

|

||||||

```

|

```

|

||||||

|

|

||||||

### Engine parameters:

|

### Engine parameters:

|

||||||

|

|||||||

@ -2131,7 +2131,6 @@ To exchange data with Hadoop, you can use [HDFS table engine](/docs/en/engines/t

|

|||||||

|

|

||||||

- [output_format_parquet_row_group_size](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_row_group_size) - row group size in rows while data output. Default value - `1000000`.

|

- [output_format_parquet_row_group_size](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_row_group_size) - row group size in rows while data output. Default value - `1000000`.

|

||||||

- [output_format_parquet_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_string_as_string) - use Parquet String type instead of Binary for String columns. Default value - `false`.

|

- [output_format_parquet_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_string_as_string) - use Parquet String type instead of Binary for String columns. Default value - `false`.

|

||||||

- [input_format_parquet_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_import_nested) - allow inserting array of structs into [Nested](/docs/en/sql-reference/data-types/nested-data-structures/index.md) table in Parquet input format. Default value - `false`.

|

|

||||||

- [input_format_parquet_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_case_insensitive_column_matching) - ignore case when matching Parquet columns with ClickHouse columns. Default value - `false`.

|

- [input_format_parquet_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_case_insensitive_column_matching) - ignore case when matching Parquet columns with ClickHouse columns. Default value - `false`.

|

||||||

- [input_format_parquet_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_allow_missing_columns) - allow missing columns while reading Parquet data. Default value - `false`.

|

- [input_format_parquet_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_allow_missing_columns) - allow missing columns while reading Parquet data. Default value - `false`.

|

||||||

- [input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Parquet format. Default value - `false`.

|

- [input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Parquet format. Default value - `false`.

|

||||||

@ -2336,7 +2335,6 @@ $ clickhouse-client --query="SELECT * FROM {some_table} FORMAT Arrow" > {filenam

|

|||||||

|

|

||||||

- [output_format_arrow_low_cardinality_as_dictionary](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_low_cardinality_as_dictionary) - enable output ClickHouse LowCardinality type as Dictionary Arrow type. Default value - `false`.

|

- [output_format_arrow_low_cardinality_as_dictionary](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_low_cardinality_as_dictionary) - enable output ClickHouse LowCardinality type as Dictionary Arrow type. Default value - `false`.

|

||||||

- [output_format_arrow_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_string_as_string) - use Arrow String type instead of Binary for String columns. Default value - `false`.

|

- [output_format_arrow_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_string_as_string) - use Arrow String type instead of Binary for String columns. Default value - `false`.

|

||||||

- [input_format_arrow_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_import_nested) - allow inserting array of structs into Nested table in Arrow input format. Default value - `false`.

|

|

||||||

- [input_format_arrow_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_case_insensitive_column_matching) - ignore case when matching Arrow columns with ClickHouse columns. Default value - `false`.

|

- [input_format_arrow_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_case_insensitive_column_matching) - ignore case when matching Arrow columns with ClickHouse columns. Default value - `false`.

|

||||||

- [input_format_arrow_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_allow_missing_columns) - allow missing columns while reading Arrow data. Default value - `false`.

|

- [input_format_arrow_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_allow_missing_columns) - allow missing columns while reading Arrow data. Default value - `false`.

|

||||||

- [input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Arrow format. Default value - `false`.

|

- [input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Arrow format. Default value - `false`.

|

||||||

@ -2402,7 +2400,6 @@ $ clickhouse-client --query="SELECT * FROM {some_table} FORMAT ORC" > {filename.

|

|||||||

|

|

||||||

- [output_format_arrow_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_string_as_string) - use Arrow String type instead of Binary for String columns. Default value - `false`.

|

- [output_format_arrow_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_arrow_string_as_string) - use Arrow String type instead of Binary for String columns. Default value - `false`.

|

||||||

- [output_format_orc_compression_method](/docs/en/operations/settings/settings-formats.md/#output_format_orc_compression_method) - compression method used in output ORC format. Default value - `none`.

|

- [output_format_orc_compression_method](/docs/en/operations/settings/settings-formats.md/#output_format_orc_compression_method) - compression method used in output ORC format. Default value - `none`.

|

||||||

- [input_format_arrow_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_import_nested) - allow inserting array of structs into Nested table in Arrow input format. Default value - `false`.

|

|

||||||

- [input_format_arrow_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_case_insensitive_column_matching) - ignore case when matching Arrow columns with ClickHouse columns. Default value - `false`.

|

- [input_format_arrow_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_case_insensitive_column_matching) - ignore case when matching Arrow columns with ClickHouse columns. Default value - `false`.

|

||||||

- [input_format_arrow_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_allow_missing_columns) - allow missing columns while reading Arrow data. Default value - `false`.

|

- [input_format_arrow_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_allow_missing_columns) - allow missing columns while reading Arrow data. Default value - `false`.

|

||||||

- [input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Arrow format. Default value - `false`.

|

- [input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_arrow_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Arrow format. Default value - `false`.

|

||||||

|

|||||||

@ -84,6 +84,7 @@ The BACKUP and RESTORE statements take a list of DATABASE and TABLE names, a des

|

|||||||

- `password` for the file on disk

|

- `password` for the file on disk

|

||||||

- `base_backup`: the destination of the previous backup of this source. For example, `Disk('backups', '1.zip')`

|

- `base_backup`: the destination of the previous backup of this source. For example, `Disk('backups', '1.zip')`

|

||||||

- `structure_only`: if enabled, allows to only backup or restore the CREATE statements without the data of tables

|

- `structure_only`: if enabled, allows to only backup or restore the CREATE statements without the data of tables

|

||||||

|

- `storage_policy`: storage policy for the tables being restored. See [Using Multiple Block Devices for Data Storage](../engines/table-engines/mergetree-family/mergetree.md#table_engine-mergetree-multiple-volumes). This setting is only applicable to the `RESTORE` command. The specified storage policy applies only to tables with an engine from the `MergeTree` family.

|

||||||

- `s3_storage_class`: the storage class used for S3 backup. For example, `STANDARD`

|

- `s3_storage_class`: the storage class used for S3 backup. For example, `STANDARD`

|

||||||

|

|

||||||

### Usage examples

|

### Usage examples

|

||||||

|

|||||||

@ -7,6 +7,10 @@ pagination_next: en/operations/settings/settings

|

|||||||

|

|

||||||

# Settings Overview

|

# Settings Overview

|

||||||

|

|

||||||

|

:::note

|

||||||

|

XML-based Settings Profiles and [configuration files](https://clickhouse.com/docs/en/operations/configuration-files) are currently not supported for ClickHouse Cloud. To specify settings for your ClickHouse Cloud service, you must use [SQL-driven Settings Profiles](https://clickhouse.com/docs/en/operations/access-rights#settings-profiles-management).

|

||||||

|

:::

|

||||||

|

|

||||||

There are two main groups of ClickHouse settings:

|

There are two main groups of ClickHouse settings:

|

||||||

|

|

||||||

- Global server settings

|

- Global server settings

|

||||||

|

|||||||

@ -298,7 +298,7 @@ Default value: `THROW`.

|

|||||||

- [JOIN clause](../../sql-reference/statements/select/join.md#select-join)

|

- [JOIN clause](../../sql-reference/statements/select/join.md#select-join)

|

||||||

- [Join table engine](../../engines/table-engines/special/join.md)

|

- [Join table engine](../../engines/table-engines/special/join.md)

|

||||||

|

|

||||||

## max_partitions_per_insert_block {#max-partitions-per-insert-block}

|

## max_partitions_per_insert_block {#settings-max_partitions_per_insert_block}

|

||||||

|

|

||||||

Limits the maximum number of partitions in a single inserted block.

|

Limits the maximum number of partitions in a single inserted block.

|

||||||

|

|

||||||

@ -309,9 +309,18 @@ Default value: 100.

|

|||||||

|

|

||||||

**Details**

|

**Details**

|

||||||

|

|

||||||

When inserting data, ClickHouse calculates the number of partitions in the inserted block. If the number of partitions is more than `max_partitions_per_insert_block`, ClickHouse throws an exception with the following text:

|

When inserting data, ClickHouse calculates the number of partitions in the inserted block. If the number of partitions is more than `max_partitions_per_insert_block`, ClickHouse either logs a warning or throws an exception based on `throw_on_max_partitions_per_insert_block`. Exceptions have the following text:

|

||||||

|

|

||||||

> “Too many partitions for single INSERT block (more than” + toString(max_parts) + “). The limit is controlled by ‘max_partitions_per_insert_block’ setting. A large number of partitions is a common misconception. It will lead to severe negative performance impact, including slow server startup, slow INSERT queries and slow SELECT queries. Recommended total number of partitions for a table is under 1000..10000. Please note, that partitioning is not intended to speed up SELECT queries (ORDER BY key is sufficient to make range queries fast). Partitions are intended for data manipulation (DROP PARTITION, etc).”

|

> “Too many partitions for a single INSERT block (`partitions_count` partitions, limit is ” + toString(max_partitions) + “). The limit is controlled by the ‘max_partitions_per_insert_block’ setting. A large number of partitions is a common misconception. It will lead to severe negative performance impact, including slow server startup, slow INSERT queries and slow SELECT queries. Recommended total number of partitions for a table is under 1000..10000. Please note, that partitioning is not intended to speed up SELECT queries (ORDER BY key is sufficient to make range queries fast). Partitions are intended for data manipulation (DROP PARTITION, etc).”

|

||||||

|

|

||||||

|

## throw_on_max_partitions_per_insert_block {#settings-throw_on_max_partition_per_insert_block}

|

||||||

|

|

||||||

|

Allows you to control behaviour when `max_partitions_per_insert_block` is reached.

|

||||||

|

|

||||||

|

- `true` - When an insert block reaches `max_partitions_per_insert_block`, an exception is raised.

|

||||||

|