mirror of

https://github.com/ClickHouse/ClickHouse.git

synced 2024-11-10 01:25:21 +00:00

Merge remote-tracking branch 'upstream/master' into impove-filecache-removal

This commit is contained in:

commit

dadf84f37b

@ -122,6 +122,23 @@ EOL

|

|||||||

<core_path>$PWD</core_path>

|

<core_path>$PWD</core_path>

|

||||||

</clickhouse>

|

</clickhouse>

|

||||||

EOL

|

EOL

|

||||||

|

|

||||||

|

# Setup a cluster for logs export to ClickHouse Cloud

|

||||||

|

# Note: these variables are provided to the Docker run command by the Python script in tests/ci

|

||||||

|

if [ -n "${CLICKHOUSE_CI_LOGS_HOST}" ]

|

||||||

|

then

|

||||||

|

echo "

|

||||||

|

remote_servers:

|

||||||

|

system_logs_export:

|

||||||

|

shard:

|

||||||

|

replica:

|

||||||

|

secure: 1

|

||||||

|

user: ci

|

||||||

|

host: '${CLICKHOUSE_CI_LOGS_HOST}'

|

||||||

|

port: 9440

|

||||||

|

password: '${CLICKHOUSE_CI_LOGS_PASSWORD}'

|

||||||

|

" > db/config.d/system_logs_export.yaml

|

||||||

|

fi

|

||||||

}

|

}

|

||||||

|

|

||||||

function filter_exists_and_template

|

function filter_exists_and_template

|

||||||

@ -223,7 +240,22 @@ quit

|

|||||||

done

|

done

|

||||||

clickhouse-client --query "select 1" # This checks that the server is responding

|

clickhouse-client --query "select 1" # This checks that the server is responding

|

||||||

kill -0 $server_pid # This checks that it is our server that is started and not some other one

|

kill -0 $server_pid # This checks that it is our server that is started and not some other one

|

||||||

echo Server started and responded

|

echo 'Server started and responded'

|

||||||

|

|

||||||

|

# Initialize export of system logs to ClickHouse Cloud

|

||||||

|

if [ -n "${CLICKHOUSE_CI_LOGS_HOST}" ]

|

||||||

|

then

|

||||||

|

export EXTRA_COLUMNS_EXPRESSION="$PR_TO_TEST AS pull_request_number, '$SHA_TO_TEST' AS commit_sha, '$CHECK_START_TIME' AS check_start_time, '$CHECK_NAME' AS check_name, '$INSTANCE_TYPE' AS instance_type"

|

||||||

|

# TODO: Check if the password will appear in the logs.

|

||||||

|

export CONNECTION_PARAMETERS="--secure --user ci --host ${CLICKHOUSE_CI_LOGS_HOST} --password ${CLICKHOUSE_CI_LOGS_PASSWORD}"

|

||||||

|

|

||||||

|

/setup_export_logs.sh

|

||||||

|

|

||||||

|

# Unset variables after use

|

||||||

|

export CONNECTION_PARAMETERS=''

|

||||||

|

export CLICKHOUSE_CI_LOGS_HOST=''

|

||||||

|

export CLICKHOUSE_CI_LOGS_PASSWORD=''

|

||||||

|

fi

|

||||||

|

|

||||||

# SC2012: Use find instead of ls to better handle non-alphanumeric filenames. They are all alphanumeric.

|

# SC2012: Use find instead of ls to better handle non-alphanumeric filenames. They are all alphanumeric.

|

||||||

# SC2046: Quote this to prevent word splitting. Actually I need word splitting.

|

# SC2046: Quote this to prevent word splitting. Actually I need word splitting.

|

||||||

|

|||||||

@ -36,6 +36,9 @@ then

|

|||||||

elif [ "${ARCH}" = "riscv64" ]

|

elif [ "${ARCH}" = "riscv64" ]

|

||||||

then

|

then

|

||||||

DIR="riscv64"

|

DIR="riscv64"

|

||||||

|

elif [ "${ARCH}" = "s390x" ]

|

||||||

|

then

|

||||||

|

DIR="s390x"

|

||||||

fi

|

fi

|

||||||

elif [ "${OS}" = "FreeBSD" ]

|

elif [ "${OS}" = "FreeBSD" ]

|

||||||

then

|

then

|

||||||

|

|||||||

@ -1,4 +1,4 @@

|

|||||||

# Approximate Nearest Neighbor Search Indexes [experimental] {#table_engines-ANNIndex}

|

# Approximate Nearest Neighbor Search Indexes [experimental]

|

||||||

|

|

||||||

Nearest neighborhood search is the problem of finding the M closest points for a given point in an N-dimensional vector space. The most

|

Nearest neighborhood search is the problem of finding the M closest points for a given point in an N-dimensional vector space. The most

|

||||||

straightforward approach to solve this problem is a brute force search where the distance between all points in the vector space and the

|

straightforward approach to solve this problem is a brute force search where the distance between all points in the vector space and the

|

||||||

@ -17,7 +17,7 @@ In terms of SQL, the nearest neighborhood problem can be expressed as follows:

|

|||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

SELECT *

|

SELECT *

|

||||||

FROM table

|

FROM table_with_ann_index

|

||||||

ORDER BY Distance(vectors, Point)

|

ORDER BY Distance(vectors, Point)

|

||||||

LIMIT N

|

LIMIT N

|

||||||

```

|

```

|

||||||

@ -32,7 +32,7 @@ An alternative formulation of the nearest neighborhood search problem looks as f

|

|||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

SELECT *

|

SELECT *

|

||||||

FROM table

|

FROM table_with_ann_index

|

||||||

WHERE Distance(vectors, Point) < MaxDistance

|

WHERE Distance(vectors, Point) < MaxDistance

|

||||||

LIMIT N

|

LIMIT N

|

||||||

```

|

```

|

||||||

@ -45,12 +45,12 @@ With brute force search, both queries are expensive (linear in the number of poi

|

|||||||

`Point` must be computed. To speed this process up, Approximate Nearest Neighbor Search Indexes (ANN indexes) store a compact representation

|

`Point` must be computed. To speed this process up, Approximate Nearest Neighbor Search Indexes (ANN indexes) store a compact representation

|

||||||

of the search space (using clustering, search trees, etc.) which allows to compute an approximate answer much quicker (in sub-linear time).

|

of the search space (using clustering, search trees, etc.) which allows to compute an approximate answer much quicker (in sub-linear time).

|

||||||

|

|

||||||

# Creating and Using ANN Indexes

|

# Creating and Using ANN Indexes {#creating_using_ann_indexes}

|

||||||

|

|

||||||

Syntax to create an ANN index over an [Array](../../../sql-reference/data-types/array.md) column:

|

Syntax to create an ANN index over an [Array](../../../sql-reference/data-types/array.md) column:

|

||||||

|

|

||||||

```sql

|

```sql

|

||||||

CREATE TABLE table

|

CREATE TABLE table_with_ann_index

|

||||||

(

|

(

|

||||||

`id` Int64,

|

`id` Int64,

|

||||||

`vectors` Array(Float32),

|

`vectors` Array(Float32),

|

||||||

@ -63,7 +63,7 @@ ORDER BY id;

|

|||||||

Syntax to create an ANN index over a [Tuple](../../../sql-reference/data-types/tuple.md) column:

|

Syntax to create an ANN index over a [Tuple](../../../sql-reference/data-types/tuple.md) column:

|

||||||

|

|

||||||

```sql

|

```sql

|

||||||

CREATE TABLE table

|

CREATE TABLE table_with_ann_index

|

||||||

(

|

(

|

||||||

`id` Int64,

|

`id` Int64,

|

||||||

`vectors` Tuple(Float32[, Float32[, ...]]),

|

`vectors` Tuple(Float32[, Float32[, ...]]),

|

||||||

@ -83,7 +83,7 @@ ANN indexes support two types of queries:

|

|||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

SELECT *

|

SELECT *

|

||||||

FROM table

|

FROM table_with_ann_index

|

||||||

[WHERE ...]

|

[WHERE ...]

|

||||||

ORDER BY Distance(vectors, Point)

|

ORDER BY Distance(vectors, Point)

|

||||||

LIMIT N

|

LIMIT N

|

||||||

@ -93,7 +93,7 @@ ANN indexes support two types of queries:

|

|||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

SELECT *

|

SELECT *

|

||||||

FROM table

|

FROM table_with_ann_index

|

||||||

WHERE Distance(vectors, Point) < MaxDistance

|

WHERE Distance(vectors, Point) < MaxDistance

|

||||||

LIMIT N

|

LIMIT N

|

||||||

```

|

```

|

||||||

@ -103,7 +103,7 @@ To avoid writing out large vectors, you can use [query

|

|||||||

parameters](/docs/en/interfaces/cli.md#queries-with-parameters-cli-queries-with-parameters), e.g.

|

parameters](/docs/en/interfaces/cli.md#queries-with-parameters-cli-queries-with-parameters), e.g.

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

clickhouse-client --param_vec='hello' --query="SELECT * FROM table WHERE L2Distance(vectors, {vec: Array(Float32)}) < 1.0"

|

clickhouse-client --param_vec='hello' --query="SELECT * FROM table_with_ann_index WHERE L2Distance(vectors, {vec: Array(Float32)}) < 1.0"

|

||||||

```

|

```

|

||||||

:::

|

:::

|

||||||

|

|

||||||

@ -138,7 +138,7 @@ back to a smaller `GRANULARITY` values only in case of problems like excessive m

|

|||||||

was specified for ANN indexes, the default value is 100 million.

|

was specified for ANN indexes, the default value is 100 million.

|

||||||

|

|

||||||

|

|

||||||

# Available ANN Indexes

|

# Available ANN Indexes {#available_ann_indexes}

|

||||||

|

|

||||||

- [Annoy](/docs/en/engines/table-engines/mergetree-family/annindexes.md#annoy-annoy)

|

- [Annoy](/docs/en/engines/table-engines/mergetree-family/annindexes.md#annoy-annoy)

|

||||||

|

|

||||||

@ -165,7 +165,7 @@ space in random linear surfaces (lines in 2D, planes in 3D etc.).

|

|||||||

Syntax to create an Annoy index over an [Array](../../../sql-reference/data-types/array.md) column:

|

Syntax to create an Annoy index over an [Array](../../../sql-reference/data-types/array.md) column:

|

||||||

|

|

||||||

```sql

|

```sql

|

||||||

CREATE TABLE table

|

CREATE TABLE table_with_annoy_index

|

||||||

(

|

(

|

||||||

id Int64,

|

id Int64,

|

||||||

vectors Array(Float32),

|

vectors Array(Float32),

|

||||||

@ -178,7 +178,7 @@ ORDER BY id;

|

|||||||

Syntax to create an ANN index over a [Tuple](../../../sql-reference/data-types/tuple.md) column:

|

Syntax to create an ANN index over a [Tuple](../../../sql-reference/data-types/tuple.md) column:

|

||||||

|

|

||||||

```sql

|

```sql

|

||||||

CREATE TABLE table

|

CREATE TABLE table_with_annoy_index

|

||||||

(

|

(

|

||||||

id Int64,

|

id Int64,

|

||||||

vectors Tuple(Float32[, Float32[, ...]]),

|

vectors Tuple(Float32[, Float32[, ...]]),

|

||||||

@ -188,23 +188,17 @@ ENGINE = MergeTree

|

|||||||

ORDER BY id;

|

ORDER BY id;

|

||||||

```

|

```

|

||||||

|

|

||||||

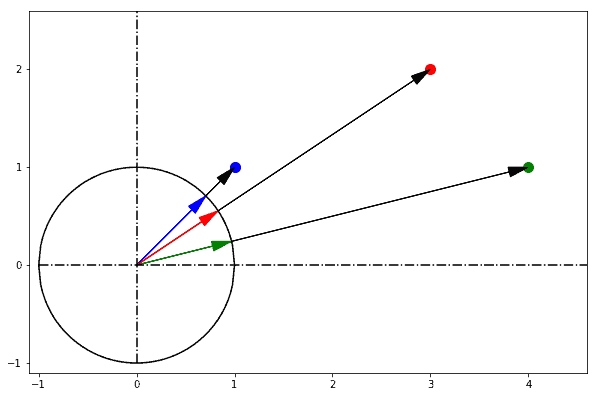

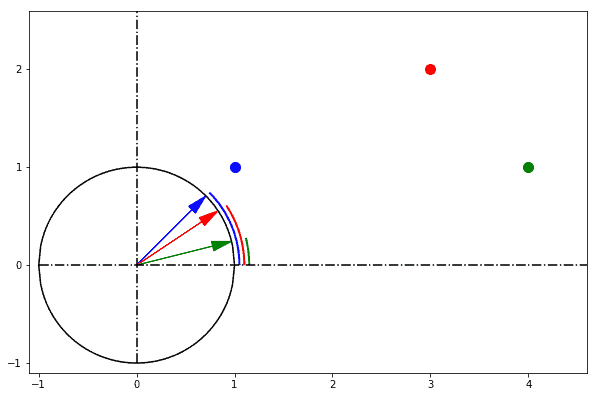

Annoy currently supports `L2Distance` and `cosineDistance` as distance function `Distance`. If no distance function was specified during

|

Annoy currently supports two distance functions:

|

||||||

index creation, `L2Distance` is used as default. Parameter `NumTrees` is the number of trees which the algorithm creates (default if not

|

- `L2Distance`, also called Euclidean distance, is the length of a line segment between two points in Euclidean space

|

||||||

specified: 100). Higher values of `NumTree` mean more accurate search results but slower index creation / query times (approximately

|

([Wikipedia](https://en.wikipedia.org/wiki/Euclidean_distance)).

|

||||||

linearly) as well as larger index sizes.

|

- `cosineDistance`, also called cosine similarity, is the cosine of the angle between two (non-zero) vectors

|

||||||

|

([Wikipedia](https://en.wikipedia.org/wiki/Cosine_similarity)).

|

||||||

|

|

||||||

`L2Distance` is also called Euclidean distance, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points.

|

For normalized data, `L2Distance` is usually a better choice, otherwise `cosineDistance` is recommended to compensate for scale. If no

|

||||||

For example: If we have point P(p1,p2), Q(q1,q2), their distance will be d(p,q)

|

distance function was specified during index creation, `L2Distance` is used as default.

|

||||||

|

|

||||||

|

|

||||||

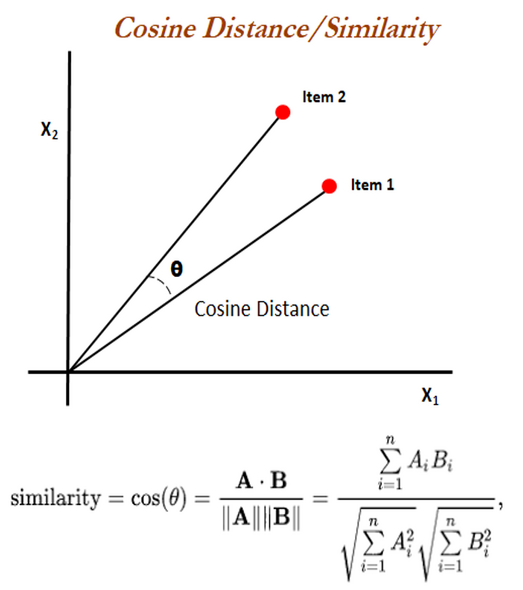

`cosineDistance` also called cosine similarity is a measure of similarity between two non-zero vectors defined in an inner product space. Cosine similarity is the cosine of the angle between the vectors; that is, it is the dot product of the vectors divided by the product of their lengths.

|

Parameter `NumTrees` is the number of trees which the algorithm creates (default if not specified: 100). Higher values of `NumTree` mean

|

||||||

|

more accurate search results but slower index creation / query times (approximately linearly) as well as larger index sizes.

|

||||||

|

|

||||||

The Euclidean distance corresponds to the L2-norm of a difference between vectors. The cosine similarity is proportional to the dot product of two vectors and inversely proportional to the product of their magnitudes.

|

|

||||||

|

|

||||||

In one sentence: cosine similarity care only about the angle between them, but do not care about the "distance" we normally think.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

:::note

|

:::note

|

||||||

Indexes over columns of type `Array` will generally work faster than indexes on `Tuple` columns. All arrays **must** have same length. Use

|

Indexes over columns of type `Array` will generally work faster than indexes on `Tuple` columns. All arrays **must** have same length. Use

|

||||||

|

|||||||

@ -11,82 +11,83 @@ results of a `SELECT`, and to perform `INSERT`s into a file-backed table.

|

|||||||

The supported formats are:

|

The supported formats are:

|

||||||

|

|

||||||

| Format | Input | Output |

|

| Format | Input | Output |

|

||||||

|-------------------------------------------------------------------------------------------|------|--------|

|

|-------------------------------------------------------------------------------------------|------|-------|

|

||||||

| [TabSeparated](#tabseparated) | ✔ | ✔ |

|

| [TabSeparated](#tabseparated) | ✔ | ✔ |

|

||||||

| [TabSeparatedRaw](#tabseparatedraw) | ✔ | ✔ |

|

| [TabSeparatedRaw](#tabseparatedraw) | ✔ | ✔ |

|

||||||

| [TabSeparatedWithNames](#tabseparatedwithnames) | ✔ | ✔ |

|

| [TabSeparatedWithNames](#tabseparatedwithnames) | ✔ | ✔ |

|

||||||

| [TabSeparatedWithNamesAndTypes](#tabseparatedwithnamesandtypes) | ✔ | ✔ |

|

| [TabSeparatedWithNamesAndTypes](#tabseparatedwithnamesandtypes) | ✔ | ✔ |

|

||||||

| [TabSeparatedRawWithNames](#tabseparatedrawwithnames) | ✔ | ✔ |

|

| [TabSeparatedRawWithNames](#tabseparatedrawwithnames) | ✔ | ✔ |

|

||||||

| [TabSeparatedRawWithNamesAndTypes](#tabseparatedrawwithnamesandtypes) | ✔ | ✔ |

|

| [TabSeparatedRawWithNamesAndTypes](#tabseparatedrawwithnamesandtypes) | ✔ | ✔ |

|

||||||

| [Template](#format-template) | ✔ | ✔ |

|

| [Template](#format-template) | ✔ | ✔ |

|

||||||

| [TemplateIgnoreSpaces](#templateignorespaces) | ✔ | ✗ |

|

| [TemplateIgnoreSpaces](#templateignorespaces) | ✔ | ✗ |

|

||||||

| [CSV](#csv) | ✔ | ✔ |

|

| [CSV](#csv) | ✔ | ✔ |

|

||||||

| [CSVWithNames](#csvwithnames) | ✔ | ✔ |

|

| [CSVWithNames](#csvwithnames) | ✔ | ✔ |

|

||||||

| [CSVWithNamesAndTypes](#csvwithnamesandtypes) | ✔ | ✔ |

|

| [CSVWithNamesAndTypes](#csvwithnamesandtypes) | ✔ | ✔ |

|

||||||

| [CustomSeparated](#format-customseparated) | ✔ | ✔ |

|

| [CustomSeparated](#format-customseparated) | ✔ | ✔ |

|

||||||

| [CustomSeparatedWithNames](#customseparatedwithnames) | ✔ | ✔ |

|

| [CustomSeparatedWithNames](#customseparatedwithnames) | ✔ | ✔ |

|

||||||

| [CustomSeparatedWithNamesAndTypes](#customseparatedwithnamesandtypes) | ✔ | ✔ |

|

| [CustomSeparatedWithNamesAndTypes](#customseparatedwithnamesandtypes) | ✔ | ✔ |

|

||||||

| [SQLInsert](#sqlinsert) | ✗ | ✔ |

|

| [SQLInsert](#sqlinsert) | ✗ | ✔ |

|

||||||

| [Values](#data-format-values) | ✔ | ✔ |

|

| [Values](#data-format-values) | ✔ | ✔ |

|

||||||

| [Vertical](#vertical) | ✗ | ✔ |

|

| [Vertical](#vertical) | ✗ | ✔ |

|

||||||

| [JSON](#json) | ✔ | ✔ |

|

| [JSON](#json) | ✔ | ✔ |

|

||||||

| [JSONAsString](#jsonasstring) | ✔ | ✗ |

|

| [JSONAsString](#jsonasstring) | ✔ | ✗ |

|

||||||

| [JSONStrings](#jsonstrings) | ✔ | ✔ |

|

| [JSONStrings](#jsonstrings) | ✔ | ✔ |

|

||||||

| [JSONColumns](#jsoncolumns) | ✔ | ✔ |

|

| [JSONColumns](#jsoncolumns) | ✔ | ✔ |

|

||||||

| [JSONColumnsWithMetadata](#jsoncolumnsmonoblock)) | ✔ | ✔ |

|

| [JSONColumnsWithMetadata](#jsoncolumnsmonoblock)) | ✔ | ✔ |

|

||||||

| [JSONCompact](#jsoncompact) | ✔ | ✔ |

|

| [JSONCompact](#jsoncompact) | ✔ | ✔ |

|

||||||

| [JSONCompactStrings](#jsoncompactstrings) | ✗ | ✔ |

|

| [JSONCompactStrings](#jsoncompactstrings) | ✗ | ✔ |

|

||||||

| [JSONCompactColumns](#jsoncompactcolumns) | ✔ | ✔ |

|

| [JSONCompactColumns](#jsoncompactcolumns) | ✔ | ✔ |

|

||||||

| [JSONEachRow](#jsoneachrow) | ✔ | ✔ |

|

| [JSONEachRow](#jsoneachrow) | ✔ | ✔ |

|

||||||

| [PrettyJSONEachRow](#prettyjsoneachrow) | ✗ | ✔ |

|

| [PrettyJSONEachRow](#prettyjsoneachrow) | ✗ | ✔ |

|

||||||

| [JSONEachRowWithProgress](#jsoneachrowwithprogress) | ✗ | ✔ |

|

| [JSONEachRowWithProgress](#jsoneachrowwithprogress) | ✗ | ✔ |

|

||||||

| [JSONStringsEachRow](#jsonstringseachrow) | ✔ | ✔ |

|

| [JSONStringsEachRow](#jsonstringseachrow) | ✔ | ✔ |

|

||||||

| [JSONStringsEachRowWithProgress](#jsonstringseachrowwithprogress) | ✗ | ✔ |

|

| [JSONStringsEachRowWithProgress](#jsonstringseachrowwithprogress) | ✗ | ✔ |

|

||||||

| [JSONCompactEachRow](#jsoncompacteachrow) | ✔ | ✔ |

|

| [JSONCompactEachRow](#jsoncompacteachrow) | ✔ | ✔ |

|

||||||

| [JSONCompactEachRowWithNames](#jsoncompacteachrowwithnames) | ✔ | ✔ |

|

| [JSONCompactEachRowWithNames](#jsoncompacteachrowwithnames) | ✔ | ✔ |

|

||||||

| [JSONCompactEachRowWithNamesAndTypes](#jsoncompacteachrowwithnamesandtypes) | ✔ | ✔ |

|

| [JSONCompactEachRowWithNamesAndTypes](#jsoncompacteachrowwithnamesandtypes) | ✔ | ✔ |

|

||||||

| [JSONCompactStringsEachRow](#jsoncompactstringseachrow) | ✔ | ✔ |

|

| [JSONCompactStringsEachRow](#jsoncompactstringseachrow) | ✔ | ✔ |

|

||||||

| [JSONCompactStringsEachRowWithNames](#jsoncompactstringseachrowwithnames) | ✔ | ✔ |

|

| [JSONCompactStringsEachRowWithNames](#jsoncompactstringseachrowwithnames) | ✔ | ✔ |

|

||||||

| [JSONCompactStringsEachRowWithNamesAndTypes](#jsoncompactstringseachrowwithnamesandtypes) | ✔ | ✔ |

|

| [JSONCompactStringsEachRowWithNamesAndTypes](#jsoncompactstringseachrowwithnamesandtypes) | ✔ | ✔ |

|

||||||

| [JSONObjectEachRow](#jsonobjecteachrow) | ✔ | ✔ |

|

| [JSONObjectEachRow](#jsonobjecteachrow) | ✔ | ✔ |

|

||||||

| [BSONEachRow](#bsoneachrow) | ✔ | ✔ |

|

| [BSONEachRow](#bsoneachrow) | ✔ | ✔ |

|

||||||

| [TSKV](#tskv) | ✔ | ✔ |

|

| [TSKV](#tskv) | ✔ | ✔ |

|

||||||

| [Pretty](#pretty) | ✗ | ✔ |

|

| [Pretty](#pretty) | ✗ | ✔ |

|

||||||

| [PrettyNoEscapes](#prettynoescapes) | ✗ | ✔ |

|

| [PrettyNoEscapes](#prettynoescapes) | ✗ | ✔ |

|

||||||

| [PrettyMonoBlock](#prettymonoblock) | ✗ | ✔ |

|

| [PrettyMonoBlock](#prettymonoblock) | ✗ | ✔ |

|

||||||

| [PrettyNoEscapesMonoBlock](#prettynoescapesmonoblock) | ✗ | ✔ |

|

| [PrettyNoEscapesMonoBlock](#prettynoescapesmonoblock) | ✗ | ✔ |

|

||||||

| [PrettyCompact](#prettycompact) | ✗ | ✔ |

|

| [PrettyCompact](#prettycompact) | ✗ | ✔ |

|

||||||

| [PrettyCompactNoEscapes](#prettycompactnoescapes) | ✗ | ✔ |

|

| [PrettyCompactNoEscapes](#prettycompactnoescapes) | ✗ | ✔ |

|

||||||

| [PrettyCompactMonoBlock](#prettycompactmonoblock) | ✗ | ✔ |

|

| [PrettyCompactMonoBlock](#prettycompactmonoblock) | ✗ | ✔ |

|

||||||

| [PrettyCompactNoEscapesMonoBlock](#prettycompactnoescapesmonoblock) | ✗ | ✔ |

|

| [PrettyCompactNoEscapesMonoBlock](#prettycompactnoescapesmonoblock) | ✗ | ✔ |

|

||||||

| [PrettySpace](#prettyspace) | ✗ | ✔ |

|

| [PrettySpace](#prettyspace) | ✗ | ✔ |

|

||||||

| [PrettySpaceNoEscapes](#prettyspacenoescapes) | ✗ | ✔ |

|

| [PrettySpaceNoEscapes](#prettyspacenoescapes) | ✗ | ✔ |

|

||||||

| [PrettySpaceMonoBlock](#prettyspacemonoblock) | ✗ | ✔ |

|

| [PrettySpaceMonoBlock](#prettyspacemonoblock) | ✗ | ✔ |

|

||||||

| [PrettySpaceNoEscapesMonoBlock](#prettyspacenoescapesmonoblock) | ✗ | ✔ |

|

| [PrettySpaceNoEscapesMonoBlock](#prettyspacenoescapesmonoblock) | ✗ | ✔ |

|

||||||

| [Prometheus](#prometheus) | ✗ | ✔ |

|

| [Prometheus](#prometheus) | ✗ | ✔ |

|

||||||

| [Protobuf](#protobuf) | ✔ | ✔ |

|

| [Protobuf](#protobuf) | ✔ | ✔ |

|

||||||

| [ProtobufSingle](#protobufsingle) | ✔ | ✔ |

|

| [ProtobufSingle](#protobufsingle) | ✔ | ✔ |

|

||||||

| [Avro](#data-format-avro) | ✔ | ✔ |

|

| [Avro](#data-format-avro) | ✔ | ✔ |

|

||||||

| [AvroConfluent](#data-format-avro-confluent) | ✔ | ✗ |

|

| [AvroConfluent](#data-format-avro-confluent) | ✔ | ✗ |

|

||||||

| [Parquet](#data-format-parquet) | ✔ | ✔ |

|

| [Parquet](#data-format-parquet) | ✔ | ✔ |

|

||||||

| [ParquetMetadata](#data-format-parquet-metadata) | ✔ | ✗ |

|

| [ParquetMetadata](#data-format-parquet-metadata) | ✔ | ✗ |

|

||||||

| [Arrow](#data-format-arrow) | ✔ | ✔ |

|

| [Arrow](#data-format-arrow) | ✔ | ✔ |

|

||||||

| [ArrowStream](#data-format-arrow-stream) | ✔ | ✔ |

|

| [ArrowStream](#data-format-arrow-stream) | ✔ | ✔ |

|

||||||

| [ORC](#data-format-orc) | ✔ | ✔ |

|

| [ORC](#data-format-orc) | ✔ | ✔ |

|

||||||

| [RowBinary](#rowbinary) | ✔ | ✔ |

|

| [One](#data-format-one) | ✔ | ✗ |

|

||||||

| [RowBinaryWithNames](#rowbinarywithnamesandtypes) | ✔ | ✔ |

|

| [RowBinary](#rowbinary) | ✔ | ✔ |

|

||||||

| [RowBinaryWithNamesAndTypes](#rowbinarywithnamesandtypes) | ✔ | ✔ |

|

| [RowBinaryWithNames](#rowbinarywithnamesandtypes) | ✔ | ✔ |

|

||||||

| [RowBinaryWithDefaults](#rowbinarywithdefaults) | ✔ | ✔ |

|

| [RowBinaryWithNamesAndTypes](#rowbinarywithnamesandtypes) | ✔ | ✔ |

|

||||||

| [Native](#native) | ✔ | ✔ |

|

| [RowBinaryWithDefaults](#rowbinarywithdefaults) | ✔ | ✔ |

|

||||||

| [Null](#null) | ✗ | ✔ |

|

| [Native](#native) | ✔ | ✔ |

|

||||||

| [XML](#xml) | ✗ | ✔ |

|

| [Null](#null) | ✗ | ✔ |

|

||||||

| [CapnProto](#capnproto) | ✔ | ✔ |

|

| [XML](#xml) | ✗ | ✔ |

|

||||||

| [LineAsString](#lineasstring) | ✔ | ✔ |

|

| [CapnProto](#capnproto) | ✔ | ✔ |

|

||||||

| [Regexp](#data-format-regexp) | ✔ | ✗ |

|

| [LineAsString](#lineasstring) | ✔ | ✔ |

|

||||||

| [RawBLOB](#rawblob) | ✔ | ✔ |

|

| [Regexp](#data-format-regexp) | ✔ | ✗ |

|

||||||

| [MsgPack](#msgpack) | ✔ | ✔ |

|

| [RawBLOB](#rawblob) | ✔ | ✔ |

|

||||||

| [MySQLDump](#mysqldump) | ✔ | ✗ |

|

| [MsgPack](#msgpack) | ✔ | ✔ |

|

||||||

| [Markdown](#markdown) | ✗ | ✔ |

|

| [MySQLDump](#mysqldump) | ✔ | ✗ |

|

||||||

|

| [Markdown](#markdown) | ✗ | ✔ |

|

||||||

|

|

||||||

|

|

||||||

You can control some format processing parameters with the ClickHouse settings. For more information read the [Settings](/docs/en/operations/settings/settings-formats.md) section.

|

You can control some format processing parameters with the ClickHouse settings. For more information read the [Settings](/docs/en/operations/settings/settings-formats.md) section.

|

||||||

@ -2131,6 +2132,7 @@ To exchange data with Hadoop, you can use [HDFS table engine](/docs/en/engines/t

|

|||||||

|

|

||||||

- [output_format_parquet_row_group_size](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_row_group_size) - row group size in rows while data output. Default value - `1000000`.

|

- [output_format_parquet_row_group_size](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_row_group_size) - row group size in rows while data output. Default value - `1000000`.

|

||||||

- [output_format_parquet_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_string_as_string) - use Parquet String type instead of Binary for String columns. Default value - `false`.

|

- [output_format_parquet_string_as_string](/docs/en/operations/settings/settings-formats.md/#output_format_parquet_string_as_string) - use Parquet String type instead of Binary for String columns. Default value - `false`.

|

||||||

|

- [input_format_parquet_import_nested](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_import_nested) - allow inserting array of structs into [Nested](/docs/en/sql-reference/data-types/nested-data-structures/index.md) table in Parquet input format. Default value - `false`.

|

||||||

- [input_format_parquet_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_case_insensitive_column_matching) - ignore case when matching Parquet columns with ClickHouse columns. Default value - `false`.

|

- [input_format_parquet_case_insensitive_column_matching](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_case_insensitive_column_matching) - ignore case when matching Parquet columns with ClickHouse columns. Default value - `false`.

|

||||||

- [input_format_parquet_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_allow_missing_columns) - allow missing columns while reading Parquet data. Default value - `false`.

|

- [input_format_parquet_allow_missing_columns](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_allow_missing_columns) - allow missing columns while reading Parquet data. Default value - `false`.

|

||||||

- [input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Parquet format. Default value - `false`.

|

- [input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference](/docs/en/operations/settings/settings-formats.md/#input_format_parquet_skip_columns_with_unsupported_types_in_schema_inference) - allow skipping columns with unsupported types while schema inference for Parquet format. Default value - `false`.

|

||||||

@ -2407,6 +2409,34 @@ $ clickhouse-client --query="SELECT * FROM {some_table} FORMAT ORC" > {filename.

|

|||||||

|

|

||||||

To exchange data with Hadoop, you can use [HDFS table engine](/docs/en/engines/table-engines/integrations/hdfs.md).

|

To exchange data with Hadoop, you can use [HDFS table engine](/docs/en/engines/table-engines/integrations/hdfs.md).

|

||||||

|

|

||||||

|

## One {#data-format-one}

|

||||||

|

|

||||||

|

Special input format that doesn't read any data from file and returns only one row with column of type `UInt8`, name `dummy` and value `0` (like `system.one` table).

|

||||||

|

Can be used with virtual columns `_file/_path` to list all files without reading actual data.

|

||||||

|

|

||||||

|

Example:

|

||||||

|

|

||||||

|

Query:

|

||||||

|

```sql

|

||||||

|

SELECT _file FROM file('path/to/files/data*', One);

|

||||||

|

```

|

||||||

|

|

||||||

|

Result:

|

||||||

|

```text

|

||||||

|

┌─_file────┐

|

||||||

|

│ data.csv │

|

||||||

|

└──────────┘

|

||||||

|

┌─_file──────┐

|

||||||

|

│ data.jsonl │

|

||||||

|

└────────────┘

|

||||||

|

┌─_file────┐

|

||||||

|

│ data.tsv │

|

||||||

|

└──────────┘

|

||||||

|

┌─_file────────┐

|

||||||

|

│ data.parquet │

|

||||||

|

└──────────────┘

|

||||||

|

```

|

||||||

|

|

||||||

## LineAsString {#lineasstring}

|

## LineAsString {#lineasstring}

|

||||||

|

|

||||||

In this format, every line of input data is interpreted as a single string value. This format can only be parsed for table with a single field of type [String](/docs/en/sql-reference/data-types/string.md). The remaining columns must be set to [DEFAULT](/docs/en/sql-reference/statements/create/table.md/#default) or [MATERIALIZED](/docs/en/sql-reference/statements/create/table.md/#materialized), or omitted.

|

In this format, every line of input data is interpreted as a single string value. This format can only be parsed for table with a single field of type [String](/docs/en/sql-reference/data-types/string.md). The remaining columns must be set to [DEFAULT](/docs/en/sql-reference/statements/create/table.md/#default) or [MATERIALIZED](/docs/en/sql-reference/statements/create/table.md/#materialized), or omitted.

|

||||||

|

|||||||

@ -83,8 +83,8 @@ ClickHouse, Inc. does **not** maintain the tools and libraries listed below and

|

|||||||

- Python

|

- Python

|

||||||

- [SQLAlchemy](https://www.sqlalchemy.org)

|

- [SQLAlchemy](https://www.sqlalchemy.org)

|

||||||

- [sqlalchemy-clickhouse](https://github.com/cloudflare/sqlalchemy-clickhouse) (uses [infi.clickhouse_orm](https://github.com/Infinidat/infi.clickhouse_orm))

|

- [sqlalchemy-clickhouse](https://github.com/cloudflare/sqlalchemy-clickhouse) (uses [infi.clickhouse_orm](https://github.com/Infinidat/infi.clickhouse_orm))

|

||||||

- [pandas](https://pandas.pydata.org)

|

- [PyArrow/Pandas](https://pandas.pydata.org)

|

||||||

- [pandahouse](https://github.com/kszucs/pandahouse)

|

- [Ibis](https://github.com/ibis-project/ibis)

|

||||||

- PHP

|

- PHP

|

||||||

- [Doctrine](https://www.doctrine-project.org/)

|

- [Doctrine](https://www.doctrine-project.org/)

|

||||||

- [dbal-clickhouse](https://packagist.org/packages/friendsofdoctrine/dbal-clickhouse)

|

- [dbal-clickhouse](https://packagist.org/packages/friendsofdoctrine/dbal-clickhouse)

|

||||||

|

|||||||

@ -11,7 +11,7 @@ Inserts data into a table.

|

|||||||

**Syntax**

|

**Syntax**

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||||

```

|

```

|

||||||

|

|

||||||

You can specify a list of columns to insert using the `(c1, c2, c3)`. You can also use an expression with column [matcher](../../sql-reference/statements/select/index.md#asterisk) such as `*` and/or [modifiers](../../sql-reference/statements/select/index.md#select-modifiers) such as [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#except-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier).

|

You can specify a list of columns to insert using the `(c1, c2, c3)`. You can also use an expression with column [matcher](../../sql-reference/statements/select/index.md#asterisk) such as `*` and/or [modifiers](../../sql-reference/statements/select/index.md#select-modifiers) such as [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#except-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier).

|

||||||

@ -107,7 +107,7 @@ If table has [constraints](../../sql-reference/statements/create/table.md#constr

|

|||||||

**Syntax**

|

**Syntax**

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] SELECT ...

|

||||||

```

|

```

|

||||||

|

|

||||||

Columns are mapped according to their position in the SELECT clause. However, their names in the SELECT expression and the table for INSERT may differ. If necessary, type casting is performed.

|

Columns are mapped according to their position in the SELECT clause. However, their names in the SELECT expression and the table for INSERT may differ. If necessary, type casting is performed.

|

||||||

@ -126,7 +126,7 @@ To insert a default value instead of `NULL` into a column with not nullable data

|

|||||||

**Syntax**

|

**Syntax**

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

||||||

```

|

```

|

||||||

|

|

||||||

Use the syntax above to insert data from a file, or files, stored on the **client** side. `file_name` and `type` are string literals. Input file [format](../../interfaces/formats.md) must be set in the `FORMAT` clause.

|

Use the syntax above to insert data from a file, or files, stored on the **client** side. `file_name` and `type` are string literals. Input file [format](../../interfaces/formats.md) must be set in the `FORMAT` clause.

|

||||||

|

|||||||

@ -11,7 +11,7 @@ sidebar_label: INSERT INTO

|

|||||||

**Синтаксис**

|

**Синтаксис**

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||||

```

|

```

|

||||||

|

|

||||||

Вы можете указать список столбцов для вставки, используя синтаксис `(c1, c2, c3)`. Также можно использовать выражение cо [звездочкой](../../sql-reference/statements/select/index.md#asterisk) и/или модификаторами, такими как [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#except-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier).

|

Вы можете указать список столбцов для вставки, используя синтаксис `(c1, c2, c3)`. Также можно использовать выражение cо [звездочкой](../../sql-reference/statements/select/index.md#asterisk) и/или модификаторами, такими как [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#except-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier).

|

||||||

@ -100,7 +100,7 @@ INSERT INTO t FORMAT TabSeparated

|

|||||||

**Синтаксис**

|

**Синтаксис**

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] SELECT ...

|

||||||

```

|

```

|

||||||

|

|

||||||

Соответствие столбцов определяется их позицией в секции SELECT. При этом, их имена в выражении SELECT и в таблице для INSERT, могут отличаться. При необходимости выполняется приведение типов данных, эквивалентное соответствующему оператору CAST.

|

Соответствие столбцов определяется их позицией в секции SELECT. При этом, их имена в выражении SELECT и в таблице для INSERT, могут отличаться. При необходимости выполняется приведение типов данных, эквивалентное соответствующему оператору CAST.

|

||||||

@ -120,7 +120,7 @@ INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

|||||||

**Синтаксис**

|

**Синтаксис**

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] FROM INFILE file_name [COMPRESSION type] FORMAT format_name

|

||||||

```

|

```

|

||||||

|

|

||||||

Используйте этот синтаксис, чтобы вставить данные из файла, который хранится на стороне **клиента**. `file_name` и `type` задаются в виде строковых литералов. [Формат](../../interfaces/formats.md) входного файла должен быть задан в секции `FORMAT`.

|

Используйте этот синтаксис, чтобы вставить данные из файла, который хранится на стороне **клиента**. `file_name` и `type` задаются в виде строковых литералов. [Формат](../../interfaces/formats.md) входного файла должен быть задан в секции `FORMAT`.

|

||||||

|

|||||||

@ -8,7 +8,7 @@ INSERT INTO 语句主要用于向系统中添加数据.

|

|||||||

查询的基本格式:

|

查询的基本格式:

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] VALUES (v11, v12, v13), (v21, v22, v23), ...

|

||||||

```

|

```

|

||||||

|

|

||||||

您可以在查询中指定要插入的列的列表,如:`[(c1, c2, c3)]`。您还可以使用列[匹配器](../../sql-reference/statements/select/index.md#asterisk)的表达式,例如`*`和/或[修饰符](../../sql-reference/statements/select/index.md#select-modifiers),例如 [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#apply-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier)。

|

您可以在查询中指定要插入的列的列表,如:`[(c1, c2, c3)]`。您还可以使用列[匹配器](../../sql-reference/statements/select/index.md#asterisk)的表达式,例如`*`和/或[修饰符](../../sql-reference/statements/select/index.md#select-modifiers),例如 [APPLY](../../sql-reference/statements/select/index.md#apply-modifier), [EXCEPT](../../sql-reference/statements/select/index.md#apply-modifier), [REPLACE](../../sql-reference/statements/select/index.md#replace-modifier)。

|

||||||

@ -71,7 +71,7 @@ INSERT INTO [db.]table [(c1, c2, c3)] FORMAT format_name data_set

|

|||||||

例如,下面的查询所使用的输入格式就与上面INSERT … VALUES的中使用的输入格式相同:

|

例如,下面的查询所使用的输入格式就与上面INSERT … VALUES的中使用的输入格式相同:

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] FORMAT Values (v11, v12, v13), (v21, v22, v23), ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] FORMAT Values (v11, v12, v13), (v21, v22, v23), ...

|

||||||

```

|

```

|

||||||

|

|

||||||

ClickHouse会清除数据前所有的空白字符与一个换行符(如果有换行符的话)。所以在进行查询时,我们建议您将数据放入到输入输出格式名称后的新的一行中去(如果数据是以空白字符开始的,这将非常重要)。

|

ClickHouse会清除数据前所有的空白字符与一个换行符(如果有换行符的话)。所以在进行查询时,我们建议您将数据放入到输入输出格式名称后的新的一行中去(如果数据是以空白字符开始的,这将非常重要)。

|

||||||

@ -93,7 +93,7 @@ INSERT INTO t FORMAT TabSeparated

|

|||||||

### 使用`SELECT`的结果写入 {#inserting-the-results-of-select}

|

### 使用`SELECT`的结果写入 {#inserting-the-results-of-select}

|

||||||

|

|

||||||

``` sql

|

``` sql

|

||||||

INSERT INTO [db.]table [(c1, c2, c3)] SELECT ...

|

INSERT INTO [TABLE] [db.]table [(c1, c2, c3)] SELECT ...

|

||||||

```

|

```

|

||||||

|

|

||||||

写入与SELECT的列的对应关系是使用位置来进行对应的,尽管它们在SELECT表达式与INSERT中的名称可能是不同的。如果需要,会对它们执行对应的类型转换。

|

写入与SELECT的列的对应关系是使用位置来进行对应的,尽管它们在SELECT表达式与INSERT中的名称可能是不同的。如果需要,会对它们执行对应的类型转换。

|

||||||

|

|||||||

@ -997,7 +997,9 @@ namespace

|

|||||||

{

|

{

|

||||||

/// sudo respects limits in /etc/security/limits.conf e.g. open files,

|

/// sudo respects limits in /etc/security/limits.conf e.g. open files,

|

||||||

/// that's why we are using it instead of the 'clickhouse su' tool.

|

/// that's why we are using it instead of the 'clickhouse su' tool.

|

||||||

command = fmt::format("sudo -u '{}' {}", user, command);

|

/// by default, sudo resets all the ENV variables, but we should preserve

|

||||||

|

/// the values /etc/default/clickhouse in /etc/init.d/clickhouse file

|

||||||

|

command = fmt::format("sudo --preserve-env -u '{}' {}", user, command);

|

||||||

}

|

}

|

||||||

|

|

||||||

fmt::print("Will run {}\n", command);

|

fmt::print("Will run {}\n", command);

|

||||||

|

|||||||

@ -105,6 +105,7 @@ namespace ErrorCodes

|

|||||||

extern const int LOGICAL_ERROR;

|

extern const int LOGICAL_ERROR;

|

||||||

extern const int CANNOT_OPEN_FILE;

|

extern const int CANNOT_OPEN_FILE;

|

||||||

extern const int FILE_ALREADY_EXISTS;

|

extern const int FILE_ALREADY_EXISTS;

|

||||||

|

extern const int USER_SESSION_LIMIT_EXCEEDED;

|

||||||

}

|

}

|

||||||

|

|

||||||

}

|

}

|

||||||

@ -2408,6 +2409,13 @@ void ClientBase::runInteractive()

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

if (suggest && suggest->getLastError() == ErrorCodes::USER_SESSION_LIMIT_EXCEEDED)

|

||||||

|

{

|

||||||

|

// If a separate connection loading suggestions failed to open a new session,

|

||||||

|

// use the main session to receive them.

|

||||||

|

suggest->load(*connection, connection_parameters.timeouts, config().getInt("suggestion_limit"));

|

||||||

|

}

|

||||||

|

|

||||||

try

|

try

|

||||||

{

|

{

|

||||||

if (!processQueryText(input))

|

if (!processQueryText(input))

|

||||||

|

|||||||

@ -22,9 +22,11 @@ namespace DB

|

|||||||

{

|

{

|

||||||

namespace ErrorCodes

|

namespace ErrorCodes

|

||||||

{

|

{

|

||||||

|

extern const int OK;

|

||||||

extern const int LOGICAL_ERROR;

|

extern const int LOGICAL_ERROR;

|

||||||

extern const int UNKNOWN_PACKET_FROM_SERVER;

|

extern const int UNKNOWN_PACKET_FROM_SERVER;

|

||||||

extern const int DEADLOCK_AVOIDED;

|

extern const int DEADLOCK_AVOIDED;

|

||||||

|

extern const int USER_SESSION_LIMIT_EXCEEDED;

|

||||||

}

|

}

|

||||||

|

|

||||||

Suggest::Suggest()

|

Suggest::Suggest()

|

||||||

@ -121,21 +123,24 @@ void Suggest::load(ContextPtr context, const ConnectionParameters & connection_p

|

|||||||

}

|

}

|

||||||

catch (const Exception & e)

|

catch (const Exception & e)

|

||||||

{

|

{

|

||||||

|

last_error = e.code();

|

||||||

if (e.code() == ErrorCodes::DEADLOCK_AVOIDED)

|

if (e.code() == ErrorCodes::DEADLOCK_AVOIDED)

|

||||||

continue;

|

continue;

|

||||||

|

else if (e.code() != ErrorCodes::USER_SESSION_LIMIT_EXCEEDED)

|

||||||

/// Client can successfully connect to the server and

|

{

|

||||||

/// get ErrorCodes::USER_SESSION_LIMIT_EXCEEDED for suggestion connection.

|

/// We should not use std::cerr here, because this method works concurrently with the main thread.

|

||||||

|

/// WriteBufferFromFileDescriptor will write directly to the file descriptor, avoiding data race on std::cerr.

|

||||||

/// We should not use std::cerr here, because this method works concurrently with the main thread.

|

///

|

||||||

/// WriteBufferFromFileDescriptor will write directly to the file descriptor, avoiding data race on std::cerr.

|

/// USER_SESSION_LIMIT_EXCEEDED is ignored here. The client will try to receive

|

||||||

|

/// suggestions using the main connection later.

|

||||||

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

||||||

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

||||||

out.next();

|

out.next();

|

||||||

|

}

|

||||||

}

|

}

|

||||||

catch (...)

|

catch (...)

|

||||||

{

|

{

|

||||||

|

last_error = getCurrentExceptionCode();

|

||||||

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

WriteBufferFromFileDescriptor out(STDERR_FILENO, 4096);

|

||||||

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

out << "Cannot load data for command line suggestions: " << getCurrentExceptionMessage(false, true) << "\n";

|

||||||

out.next();

|

out.next();

|

||||||

@ -148,6 +153,21 @@ void Suggest::load(ContextPtr context, const ConnectionParameters & connection_p

|

|||||||

});

|

});

|

||||||

}

|

}

|

||||||

|

|

||||||

|

void Suggest::load(IServerConnection & connection,

|

||||||

|

const ConnectionTimeouts & timeouts,

|

||||||

|

Int32 suggestion_limit)

|

||||||

|

{

|

||||||

|

try

|

||||||

|

{

|

||||||

|

fetch(connection, timeouts, getLoadSuggestionQuery(suggestion_limit, true));

|

||||||

|

}

|

||||||

|

catch (...)

|

||||||

|

{

|

||||||

|

std::cerr << "Suggestions loading exception: " << getCurrentExceptionMessage(false, true) << std::endl;

|

||||||

|

last_error = getCurrentExceptionCode();

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

void Suggest::fetch(IServerConnection & connection, const ConnectionTimeouts & timeouts, const std::string & query)

|

void Suggest::fetch(IServerConnection & connection, const ConnectionTimeouts & timeouts, const std::string & query)

|

||||||

{

|

{

|

||||||

connection.sendQuery(

|

connection.sendQuery(

|

||||||

@ -176,6 +196,7 @@ void Suggest::fetch(IServerConnection & connection, const ConnectionTimeouts & t

|

|||||||

return;

|

return;

|

||||||

|

|

||||||

case Protocol::Server::EndOfStream:

|

case Protocol::Server::EndOfStream:

|

||||||

|

last_error = ErrorCodes::OK;

|

||||||

return;

|

return;

|

||||||

|

|

||||||

default:

|

default:

|

||||||

|

|||||||

@ -7,6 +7,7 @@

|

|||||||

#include <Client/LocalConnection.h>

|

#include <Client/LocalConnection.h>

|

||||||

#include <Client/LineReader.h>

|

#include <Client/LineReader.h>

|

||||||

#include <IO/ConnectionTimeouts.h>

|

#include <IO/ConnectionTimeouts.h>

|

||||||

|

#include <atomic>

|

||||||

#include <thread>

|

#include <thread>

|

||||||

|

|

||||||

|

|

||||||

@ -28,9 +29,15 @@ public:

|

|||||||

template <typename ConnectionType>

|

template <typename ConnectionType>

|

||||||

void load(ContextPtr context, const ConnectionParameters & connection_parameters, Int32 suggestion_limit);

|

void load(ContextPtr context, const ConnectionParameters & connection_parameters, Int32 suggestion_limit);

|

||||||

|

|

||||||

|

void load(IServerConnection & connection,

|

||||||

|

const ConnectionTimeouts & timeouts,

|

||||||

|

Int32 suggestion_limit);

|

||||||

|

|

||||||

/// Older server versions cannot execute the query loading suggestions.

|

/// Older server versions cannot execute the query loading suggestions.

|

||||||

static constexpr int MIN_SERVER_REVISION = DBMS_MIN_PROTOCOL_VERSION_WITH_VIEW_IF_PERMITTED;

|

static constexpr int MIN_SERVER_REVISION = DBMS_MIN_PROTOCOL_VERSION_WITH_VIEW_IF_PERMITTED;

|

||||||

|

|

||||||

|

int getLastError() const { return last_error.load(); }

|

||||||

|

|

||||||

private:

|

private:

|

||||||

void fetch(IServerConnection & connection, const ConnectionTimeouts & timeouts, const std::string & query);

|

void fetch(IServerConnection & connection, const ConnectionTimeouts & timeouts, const std::string & query);

|

||||||

|

|

||||||

@ -38,6 +45,8 @@ private:

|

|||||||

|

|

||||||

/// Words are fetched asynchronously.

|

/// Words are fetched asynchronously.

|

||||||

std::thread loading_thread;

|

std::thread loading_thread;

|

||||||

|

|

||||||

|

std::atomic<int> last_error { -1 };

|

||||||

};

|

};

|

||||||

|

|

||||||

}

|

}

|

||||||

|

|||||||

@ -3,23 +3,25 @@

|

|||||||

#include <base/Decimal_fwd.h>

|

#include <base/Decimal_fwd.h>

|

||||||

#include <base/extended_types.h>

|

#include <base/extended_types.h>

|

||||||

|

|

||||||

|

#include <city.h>

|

||||||

|

|

||||||

#include <utility>

|

#include <utility>

|

||||||

|

|

||||||

namespace DB

|

namespace DB

|

||||||

{

|

{

|

||||||

template <std::endian endian, typename T>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename T>

|

||||||

requires std::is_integral_v<T>

|

requires std::is_integral_v<T>

|

||||||

inline void transformEndianness(T & value)

|

inline void transformEndianness(T & value)

|

||||||

{

|

{

|

||||||

if constexpr (endian != std::endian::native)

|

if constexpr (ToEndian != FromEndian)

|

||||||

value = std::byteswap(value);

|

value = std::byteswap(value);

|

||||||

}

|

}

|

||||||

|

|

||||||

template <std::endian endian, typename T>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename T>

|

||||||

requires is_big_int_v<T>

|

requires is_big_int_v<T>

|

||||||

inline void transformEndianness(T & x)

|

inline void transformEndianness(T & x)

|

||||||

{

|

{

|

||||||

if constexpr (std::endian::native != endian)

|

if constexpr (ToEndian != FromEndian)

|

||||||

{

|

{

|

||||||

auto & items = x.items;

|

auto & items = x.items;

|

||||||

std::transform(std::begin(items), std::end(items), std::begin(items), [](auto & item) { return std::byteswap(item); });

|

std::transform(std::begin(items), std::end(items), std::begin(items), [](auto & item) { return std::byteswap(item); });

|

||||||

@ -27,42 +29,49 @@ inline void transformEndianness(T & x)

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

template <std::endian endian, typename T>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename T>

|

||||||

requires is_decimal<T>

|

requires is_decimal<T>

|

||||||

inline void transformEndianness(T & x)

|

inline void transformEndianness(T & x)

|

||||||

{

|

{

|

||||||

transformEndianness<endian>(x.value);

|

transformEndianness<ToEndian, FromEndian>(x.value);

|

||||||

}

|

}

|

||||||

|

|

||||||

template <std::endian endian, typename T>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename T>

|

||||||

requires std::is_floating_point_v<T>

|

requires std::is_floating_point_v<T>

|

||||||

inline void transformEndianness(T & value)

|

inline void transformEndianness(T & value)

|

||||||

{

|

{

|

||||||

if constexpr (std::endian::native != endian)

|

if constexpr (ToEndian != FromEndian)

|

||||||

{

|

{

|

||||||

auto * start = reinterpret_cast<std::byte *>(&value);

|

auto * start = reinterpret_cast<std::byte *>(&value);

|

||||||

std::reverse(start, start + sizeof(T));

|

std::reverse(start, start + sizeof(T));

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

template <std::endian endian, typename T>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename T>

|

||||||

requires std::is_scoped_enum_v<T>

|

requires std::is_scoped_enum_v<T>

|

||||||

inline void transformEndianness(T & x)

|

inline void transformEndianness(T & x)

|

||||||

{

|

{

|

||||||

using UnderlyingType = std::underlying_type_t<T>;

|

using UnderlyingType = std::underlying_type_t<T>;

|

||||||

transformEndianness<endian>(reinterpret_cast<UnderlyingType &>(x));

|

transformEndianness<ToEndian, FromEndian>(reinterpret_cast<UnderlyingType &>(x));

|

||||||

}

|

}

|

||||||

|

|

||||||

template <std::endian endian, typename A, typename B>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename A, typename B>

|

||||||

inline void transformEndianness(std::pair<A, B> & pair)

|

inline void transformEndianness(std::pair<A, B> & pair)

|

||||||

{

|

{

|

||||||

transformEndianness<endian>(pair.first);

|

transformEndianness<ToEndian, FromEndian>(pair.first);

|

||||||

transformEndianness<endian>(pair.second);

|

transformEndianness<ToEndian, FromEndian>(pair.second);

|

||||||

}

|

}

|

||||||

|

|

||||||

template <std::endian endian, typename T, typename Tag>

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native, typename T, typename Tag>

|

||||||

inline void transformEndianness(StrongTypedef<T, Tag> & x)

|

inline void transformEndianness(StrongTypedef<T, Tag> & x)

|

||||||

{

|

{

|

||||||

transformEndianness<endian>(x.toUnderType());

|

transformEndianness<ToEndian, FromEndian>(x.toUnderType());

|

||||||

|

}

|

||||||

|

|

||||||

|

template <std::endian ToEndian, std::endian FromEndian = std::endian::native>

|

||||||

|

inline void transformEndianness(CityHash_v1_0_2::uint128 & x)

|

||||||

|

{

|

||||||

|

transformEndianness<ToEndian, FromEndian>(x.low64);

|

||||||

|

transformEndianness<ToEndian, FromEndian>(x.high64);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|||||||

@ -152,7 +152,7 @@ void ZooKeeper::init(ZooKeeperArgs args_)

|

|||||||

throw KeeperException(code, "/");

|

throw KeeperException(code, "/");

|

||||||

|

|

||||||

if (code == Coordination::Error::ZNONODE)

|

if (code == Coordination::Error::ZNONODE)

|

||||||

throw KeeperException("ZooKeeper root doesn't exist. You should create root node " + args.chroot + " before start.", Coordination::Error::ZNONODE);

|

throw KeeperException(Coordination::Error::ZNONODE, "ZooKeeper root doesn't exist. You should create root node {} before start.", args.chroot);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -491,7 +491,7 @@ std::string ZooKeeper::get(const std::string & path, Coordination::Stat * stat,

|

|||||||

if (tryGet(path, res, stat, watch, &code))

|

if (tryGet(path, res, stat, watch, &code))

|

||||||

return res;

|

return res;

|

||||||

else

|

else

|

||||||

throw KeeperException("Can't get data for node " + path + ": node doesn't exist", code);

|

throw KeeperException(code, "Can't get data for node '{}': node doesn't exist", path);

|

||||||

}

|

}

|

||||||

|

|

||||||

std::string ZooKeeper::getWatch(const std::string & path, Coordination::Stat * stat, Coordination::WatchCallback watch_callback)

|

std::string ZooKeeper::getWatch(const std::string & path, Coordination::Stat * stat, Coordination::WatchCallback watch_callback)

|

||||||

@ -501,7 +501,7 @@ std::string ZooKeeper::getWatch(const std::string & path, Coordination::Stat * s

|

|||||||

if (tryGetWatch(path, res, stat, watch_callback, &code))

|

if (tryGetWatch(path, res, stat, watch_callback, &code))

|

||||||

return res;

|

return res;

|

||||||

else

|

else

|

||||||

throw KeeperException("Can't get data for node " + path + ": node doesn't exist", code);

|

throw KeeperException(code, "Can't get data for node '{}': node doesn't exist", path);

|

||||||

}

|

}

|

||||||

|

|

||||||

bool ZooKeeper::tryGet(

|

bool ZooKeeper::tryGet(

|

||||||

|

|||||||

@ -213,7 +213,7 @@ void ZooKeeperArgs::initFromKeeperSection(const Poco::Util::AbstractConfiguratio

|

|||||||

};

|

};

|

||||||

}

|

}

|

||||||

else

|

else

|

||||||

throw KeeperException(std::string("Unknown key ") + key + " in config file", Coordination::Error::ZBADARGUMENTS);

|

throw KeeperException(Coordination::Error::ZBADARGUMENTS, "Unknown key {} in config file", key);

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

|||||||

@ -10,6 +10,8 @@

|

|||||||

#include <Formats/ProtobufReader.h>

|

#include <Formats/ProtobufReader.h>

|

||||||

#include <Core/Field.h>

|

#include <Core/Field.h>

|

||||||

|

|

||||||

|

#include <ranges>

|

||||||

|

|

||||||

namespace DB

|

namespace DB

|

||||||

{

|

{

|

||||||

|

|

||||||

@ -135,13 +137,25 @@ template <typename T>

|

|||||||

void SerializationNumber<T>::serializeBinaryBulk(const IColumn & column, WriteBuffer & ostr, size_t offset, size_t limit) const

|

void SerializationNumber<T>::serializeBinaryBulk(const IColumn & column, WriteBuffer & ostr, size_t offset, size_t limit) const

|

||||||

{

|

{

|

||||||

const typename ColumnVector<T>::Container & x = typeid_cast<const ColumnVector<T> &>(column).getData();

|

const typename ColumnVector<T>::Container & x = typeid_cast<const ColumnVector<T> &>(column).getData();

|

||||||

|

if (const size_t size = x.size(); limit == 0 || offset + limit > size)

|

||||||

size_t size = x.size();

|

|

||||||

|

|

||||||

if (limit == 0 || offset + limit > size)

|

|

||||||

limit = size - offset;

|

limit = size - offset;

|

||||||

|

|

||||||

if (limit)

|

if (limit == 0)

|

||||||

|

return;

|

||||||

|

|

||||||

|

if constexpr (std::endian::native == std::endian::big && sizeof(T) >= 2)

|

||||||

|

{

|

||||||

|

static constexpr auto to_little_endian = [](auto i)

|

||||||

|

{

|

||||||

|

transformEndianness<std::endian::little>(i);

|

||||||

|

return i;

|

||||||

|

};

|

||||||

|

|

||||||

|

std::ranges::for_each(

|

||||||

|

x | std::views::drop(offset) | std::views::take(limit) | std::views::transform(to_little_endian),

|

||||||

|

[&ostr](const auto & i) { ostr.write(reinterpret_cast<const char *>(&i), sizeof(typename ColumnVector<T>::ValueType)); });

|

||||||

|

}

|

||||||

|

else

|

||||||

ostr.write(reinterpret_cast<const char *>(&x[offset]), sizeof(typename ColumnVector<T>::ValueType) * limit);

|

ostr.write(reinterpret_cast<const char *>(&x[offset]), sizeof(typename ColumnVector<T>::ValueType) * limit);

|

||||||

}

|

}

|

||||||

|

|

||||||

@ -149,10 +163,13 @@ template <typename T>

|

|||||||

void SerializationNumber<T>::deserializeBinaryBulk(IColumn & column, ReadBuffer & istr, size_t limit, double /*avg_value_size_hint*/) const

|

void SerializationNumber<T>::deserializeBinaryBulk(IColumn & column, ReadBuffer & istr, size_t limit, double /*avg_value_size_hint*/) const

|

||||||

{

|

{

|

||||||

typename ColumnVector<T>::Container & x = typeid_cast<ColumnVector<T> &>(column).getData();

|

typename ColumnVector<T>::Container & x = typeid_cast<ColumnVector<T> &>(column).getData();

|

||||||

size_t initial_size = x.size();

|

const size_t initial_size = x.size();

|

||||||

x.resize(initial_size + limit);

|

x.resize(initial_size + limit);

|

||||||

size_t size = istr.readBig(reinterpret_cast<char*>(&x[initial_size]), sizeof(typename ColumnVector<T>::ValueType) * limit);

|

const size_t size = istr.readBig(reinterpret_cast<char*>(&x[initial_size]), sizeof(typename ColumnVector<T>::ValueType) * limit);

|

||||||

x.resize(initial_size + size / sizeof(typename ColumnVector<T>::ValueType));

|

x.resize(initial_size + size / sizeof(typename ColumnVector<T>::ValueType));

|

||||||

|

|

||||||

|

if constexpr (std::endian::native == std::endian::big && sizeof(T) >= 2)

|

||||||

|

std::ranges::for_each(x | std::views::drop(initial_size), [](auto & i) { transformEndianness<std::endian::big, std::endian::little>(i); });

|

||||||

}

|

}

|

||||||

|

|

||||||

template class SerializationNumber<UInt8>;

|

template class SerializationNumber<UInt8>;

|

||||||

|

|||||||

@ -101,6 +101,7 @@ void registerInputFormatJSONAsObject(FormatFactory & factory);

|

|||||||

void registerInputFormatLineAsString(FormatFactory & factory);

|

void registerInputFormatLineAsString(FormatFactory & factory);

|

||||||

void registerInputFormatMySQLDump(FormatFactory & factory);

|

void registerInputFormatMySQLDump(FormatFactory & factory);

|

||||||

void registerInputFormatParquetMetadata(FormatFactory & factory);

|

void registerInputFormatParquetMetadata(FormatFactory & factory);

|

||||||

|

void registerInputFormatOne(FormatFactory & factory);

|

||||||

|

|

||||||

#if USE_HIVE

|

#if USE_HIVE

|

||||||

void registerInputFormatHiveText(FormatFactory & factory);

|

void registerInputFormatHiveText(FormatFactory & factory);

|

||||||

@ -142,6 +143,7 @@ void registerTemplateSchemaReader(FormatFactory & factory);

|

|||||||

void registerMySQLSchemaReader(FormatFactory & factory);

|

void registerMySQLSchemaReader(FormatFactory & factory);

|

||||||

void registerBSONEachRowSchemaReader(FormatFactory & factory);

|

void registerBSONEachRowSchemaReader(FormatFactory & factory);

|

||||||

void registerParquetMetadataSchemaReader(FormatFactory & factory);

|

void registerParquetMetadataSchemaReader(FormatFactory & factory);

|

||||||

|

void registerOneSchemaReader(FormatFactory & factory);

|

||||||

|

|

||||||

void registerFileExtensions(FormatFactory & factory);

|

void registerFileExtensions(FormatFactory & factory);

|

||||||

|

|

||||||

@ -243,6 +245,7 @@ void registerFormats()

|

|||||||

registerInputFormatMySQLDump(factory);

|

registerInputFormatMySQLDump(factory);

|

||||||

|

|

||||||

registerInputFormatParquetMetadata(factory);

|

registerInputFormatParquetMetadata(factory);

|

||||||

|

registerInputFormatOne(factory);

|

||||||

|

|

||||||

registerNonTrivialPrefixAndSuffixCheckerJSONEachRow(factory);

|

registerNonTrivialPrefixAndSuffixCheckerJSONEachRow(factory);

|

||||||

registerNonTrivialPrefixAndSuffixCheckerJSONAsString(factory);

|

registerNonTrivialPrefixAndSuffixCheckerJSONAsString(factory);

|

||||||

@ -279,6 +282,7 @@ void registerFormats()

|

|||||||

registerMySQLSchemaReader(factory);

|

registerMySQLSchemaReader(factory);

|

||||||

registerBSONEachRowSchemaReader(factory);

|

registerBSONEachRowSchemaReader(factory);

|

||||||

registerParquetMetadataSchemaReader(factory);

|

registerParquetMetadataSchemaReader(factory);

|

||||||

|

registerOneSchemaReader(factory);

|

||||||

}

|

}

|

||||||

|

|

||||||

}

|

}

|

||||||

|

|||||||

@ -1374,8 +1374,8 @@ public:

|

|||||||

|

|

||||||

if constexpr (std::is_same_v<ToType, UInt128>) /// backward-compatible

|

if constexpr (std::is_same_v<ToType, UInt128>) /// backward-compatible

|

||||||

{

|

{

|

||||||

if (std::endian::native == std::endian::big)

|

if constexpr (std::endian::native == std::endian::big)

|

||||||

std::ranges::for_each(col_to->getData(), transformEndianness<std::endian::little, ToType>);

|

std::ranges::for_each(col_to->getData(), transformEndianness<std::endian::little, std::endian::native, ToType>);

|

||||||

|

|

||||||

auto col_to_fixed_string = ColumnFixedString::create(sizeof(UInt128));

|

auto col_to_fixed_string = ColumnFixedString::create(sizeof(UInt128));

|

||||||

const auto & data = col_to->getData();

|

const auto & data = col_to->getData();

|

||||||

|

|||||||

@ -188,7 +188,7 @@ Client::Client(

|

|||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

LOG_TRACE(log, "API mode: {}", toString(api_mode));

|

LOG_TRACE(log, "API mode of the S3 client: {}", api_mode);

|

||||||

|

|

||||||

detect_region = provider_type == ProviderType::AWS && explicit_region == Aws::Region::AWS_GLOBAL;

|

detect_region = provider_type == ProviderType::AWS && explicit_region == Aws::Region::AWS_GLOBAL;

|

||||||

|

|

||||||

|

|||||||

@ -60,9 +60,6 @@ public:

|

|||||||

/// (When there is a local replica with big delay).

|

/// (When there is a local replica with big delay).

|

||||||

bool lazy = false;

|

bool lazy = false;

|

||||||

time_t local_delay = 0;

|

time_t local_delay = 0;

|

||||||

|

|

||||||

/// Set only if parallel reading from replicas is used.

|

|

||||||

std::shared_ptr<ParallelReplicasReadingCoordinator> coordinator;

|

|

||||||

};

|

};

|

||||||

|

|

||||||

using Shards = std::vector<Shard>;

|